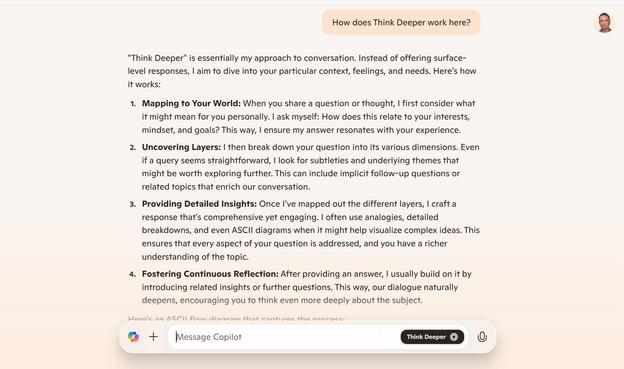

“Students are introduced to advanced AI techniques such as #ChainOfThought and #SelfConsistencyPrompting, which simulate humanlike reasoning. #GenerativeAI is presented not just as a tool for queries but as a partner in reasoning.

“We teach reinforcement learning from human feedback, where every correction becomes training data,” Madmoun adds.

Students are encouraged to view AI not as a static engine, but as a responsive tool for making critical decisions in high-stakes financial environments.

Recognising that students enter with varying levels of technical knowledge, the Master in International Finance (MiF) at HEC Paris provides asynchronous #Python #programming courses, optional #BootCamps, and tailored elective tracks. “We’ve integrated workshops taught by Hi! PARIS into the curriculum,” says academic director Evren Örs, referring to the #AI and #DataScience centre co-founded by HEC Paris and Institut Polytechnique de Paris.

Students from both institutions collaborate on real-data projects, strengthening both technical and teamwork skills.

A tiered elective system requires all MiF students to complete at least one course focused on #data and #finance. The most advanced track is the #DoubleDegree in data and finance, where students dive deep into #MachineLlearning applications. Graduates, Örs says, are frequently hired as #QuantitativeAnalysts, #DataScientists, and private equity analysts in London and Paris.”

#BusinessSchools / #education <https://archive.md/xysyM> / <https://ft.com/content/071dc338-b267-466c-836a-f559609fffd5> (paywall)