#PaperThread

#paperThread #auditory #neuroscience

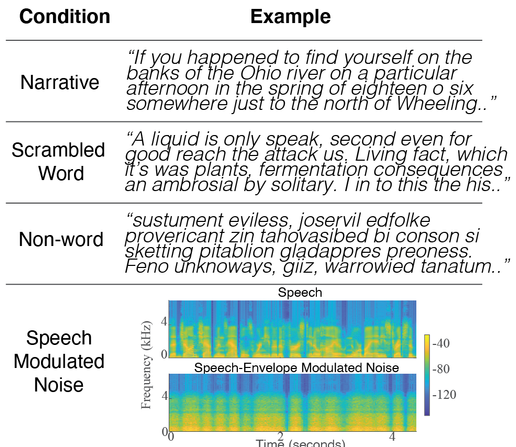

Our latest paper just came out in the Journal of Neuroscience “Neural Dynamics of the Processing of Speech Features: Evidence for a Progression of Features from Acoustic to Sentential Processing.” We follow the cortical processing of four different speech-like stimuli (https://dushk88.github.io/progression-of-neural-features) through the brain, using MEG, from early auditory cortex to areas processing semantic-level information. The results show that each language-sensitive processing stage shows both an early (bottom-up-like) cortical contribution and a late (top-down-like) cortical contribution consistent with predictive coding. https://www.jneurosci.org/content/45/11/e1143242025

https://fediscience.org/@jzsimon/111865220831291708

#neuroscience #paperThread A new #preprint by Dushyanthi Karunathilake https://doi.org/10.1101/2024.02.02.578603

Language has a hierarchical structure, and some neural processing stages seem to align with these levels. Here we record MEG responses from subjects listening to a progression of speech/speech-like passages: speech-modulated noise; non-words with well-formed phonemes; shuffled words; and true narrative. We can then trace the hierarchy of neural processing stages, from acoustical to full language. 1/7

#neuroscience #paperThread New paper in PNAS by recent PhD Dushyanthi Karunathilake! https://doi.org/10.1073/pnas.2309166120

MEG responses from continuous speech listening lock to various stimulus features: acoustic, phonemic, lexical, & semantic. Could they provide an objective measure of when degraded speech is perceived as actually intelligible? This would give insight as to how the brain turns speech into language, and be a treasure mine for clinical populations ill-suited for behavioral testing. 1/5

Interesting developments in subquadratic alternatives to self-attention based transformers for large sequence modeling (32k and more).

Hyena Hierarchy: Towards Larger Convolutional Language Models

https://arxiv.org/abs/2302.10866

They propose to replace the quadratic self-attention layers by an operator built with implicitly parametrized long kernel 1D convolutions.

#DeepLearning #LLMs #PaperThread

1/4

It's not the first time! A dream team of Eve Fleisig (human eval), Adam Lopez (remembers the Stat MT era), Kyunghyun Cho (helped end it), and me (pun in title) are here to teach you the history of scale crises and what lessons we can take from them. https://arxiv.org/abs/2311.05020 🧵 #paperthread #LLMs

5/5

Our dataset comprises also CT and MRI scans with patients lesions segmented by an expert.

This allowed us to look at the distribution of lesions cluster-wise, and validate the associations between symptoms and lesions.

Check our pre-print and comment, make questions, offer suggestions!

Although it is not simple to share data, we will release code soon, as a means to replicate the approach on similar data and more.

The link is already in the paper!

And let us know if you have data you'd like to share and analyse with our developing methods👨🏾💻

We are deciding on the best match for a journal to review and possibly publish this work, of which I am super proud and thankful to co-authors Andrea Zanola, Antonio Bisogno, Silvia Facchini, Lorenzo Pini, Manfredo Atzori, and Maurizio Corbetta!

#scicomm #paperthread #preprints #neuroscience #machinelearning #mri #stroke #clustering

1/n

Our pre-print is finally out!

Here's my first #paperthread 🧵

In this work, co-authors and I clustered ischaemic stroke patients profiles, and recovered common patterns of cognitive, sensorimotor damage.

...Historically many focal lesions to specific cortical areas were associated with specific distinction, but most strokes involve subcortical regions and bring multivariate patterns of deficits.

To characterize those patterns, many studies have turned to correlation analysis, factor analysis, PCA, focusing on the relations among variables==domains of impairments...

📝 Now reading: "From empirical problem-solving to theoretical problem-finding perspectives on the cognitive sciences -- by @fedeadolfi #LauraVandeBraak, and @mariekewoe (2023, PsyArXiv) #PaperThread 🧵

@jkanev I follow #NewPaper OR #preprint OR #PaperThread and it's *very* quiet.

DINOv2: Learning Robust Visual Features without Supervision

Tricks applied to DINO and iBOT to learn robust/generic features for many downstream tasks

My summary on HFPapers: https://huggingface.co/papers/2304.07193#64f81a7f35a0a9fc54363373

arXiv: https://arxiv.org/abs/2304.07193

Demo: https://dinov2.metademolab.com/

LidarCLIP or: How I Learned to Talk to Point Clouds

Align LiDAR encoder to CLIP image encoder and you can query LiDAR through image similarity or even text.

My summary on HFPapers: https://huggingface.co/papers/2212.06858#64f6f05910a91217c38874b7

arXiv: https://arxiv.org/abs/2212.06858

PWC: https://paperswithcode.com/paper/lidarclip-or-how-i-learned-to-talk-to-point

T'S PAPER DAY!

Seidel & Prinoth et al. has just been accepted for publication in A&A and you can find it on arXiv already today: https://arxiv.org/abs/2308.13622 ✨

Let us tell you a bit more 👇🏼🧵

Hello, world!

Look, it's me 👀 My second first-author paper has just been accepted for publication in A&A and you can find it on arXiv already today ✨

Let me tell you a bit about it 👇🏼🧵

(okay a lot, it's a big kid)

SNAP: Self-Supervised Neural Maps for Visual Positioning and Semantic Understanding

Top-view and ground-view images can make neural maps that aid visual positioning.

My summary on HFPapers: https://huggingface.co/papers/2306.05407#64c3fcb171947b03ffcf314c

arXiv: https://arxiv.org/abs/2306.05407

PapersWithCode: https://paperswithcode.com/paper/snap-self-supervised-neural-maps-for-visual

Diffusion Models Beat GANs on Image Classification

Extract features (activations) at a block at a diffusion time step gives a decent classifier.

My summary on HFPapers: https://huggingface.co/papers/2307.08702#64c3fb7ebf1103bae84618a6

arXiv: https://arxiv.org/abs/2307.08702

Links: [PapersWithCode](https://paperswithcode.com/paper/diffusion-models-beat-gans-on-image)

How is ChatGPT's behavior changing over time?

Monitors trends in performance of GPT-4 and GPT-3.5 (backend LLMs of ChatGPT) from March 2023 to June 2023 on diverse tasks.

My summary on HFPapers: https://huggingface.co/papers/2307.09009#64c3f9c2979493279b41df28

arXiv: https://arxiv.org/abs/2307.09009

GitHub: https://github.com/lchen001/LLMDrift

LightGlue: Local Feature Matching at Light Speed

Improving SuperGlue with changes to transformer (GNN matching). Iterative design gives speed boost.

My summary on HFPapers: https://huggingface.co/papers/2306.13643#64ba93f75e13c0d8659b4ce8

Links: [PapersWithCode](https://paperswithcode.com/paper/lightglue-local-feature-matching-at-light), [GitHub](https://github.com/cvg/lightglue), [arxiv](https://arxiv.org/abs/2306.13643)

Llama 2: Open Foundation and Fine-Tuned Chat Models

Improving LLaMA with hacks.

Gotta admit (being a critic of Meta) they have tested it well.

HFPapers: https://huggingface.co/papers/2307.09288

My summary: https://huggingface.co/papers/2307.09288#64b79ed1104e7af01c0c6425

Links: [website](https://ai.meta.com/llama/), [Meta AI blog post](https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/), [arxiv](https://arxiv.org/abs/2307.09288), [Meta news](https://about.fb.com/news/2023/07/llama-2/), [GitHub](https://github.com/facebookresearch/llama) ([Older LLaMA - v1](https://github.com/facebookresearch/llama/tree/llama_v1))

Diffusion Hyperfeatures: Searching Through Time and Space for Semantic Correspondence

Fusing diffusion features into per-pixel image features (specific for downstream tasks)

@ducha_aiki's tweet: https://twitter.com/ducha_aiki/status/1661310529846013955

arXiv: https://arxiv.org/abs/2305.14334

website: https://diffusion-hyperfeatures.github.io/

GitHub: https://github.com/diffusion-hyperfeatures/diffusion_hyperfeatures

My summary on HFPapers: https://huggingface.co/papers/2305.14334#64b79bcafe6a108d0308f9b5