I'm using Gemini AI to help me debug my Python code to build a local cybersecurity RAG vector database for a local DeepSeek AI and it feels very oroborous

#chromadb

From #FreeCodeCamp: "How to Build a Local #RAG App with #Ollama and #ChromaDB in the R Programming Language"

https://www.freecodecamp.org/news/build-a-local-rag-app-with-ollama-and-chromadb-in-r/

Security-RAG или как сделать AI Security tool на коленке

LLM-ки и LLM-агенты продолжают наводить шум. Cтатьи про то как сделать очередной RAG или Агента продолжают клепаться (да блин даже мы одна из этих статей), huggingface выпускают smolagents , квантизация позволяет дойти LLM-кам до простых работяг и обывателей, давая им возможность в них потыкаться в той же LM studio или других приложениях. Пока бизнес спешит внедрить очередного виртуального помощника, тот зачастую превращается в неумелого продавца, который не только мешает клиентам связаться со службой поддержки, но и... Удиви меня

https://habr.com/ru/articles/874820/

#llm #llmattack #llmприложения #ml #langchain #langfuse #chromadb #промптинъекции #искусственный_интеллект

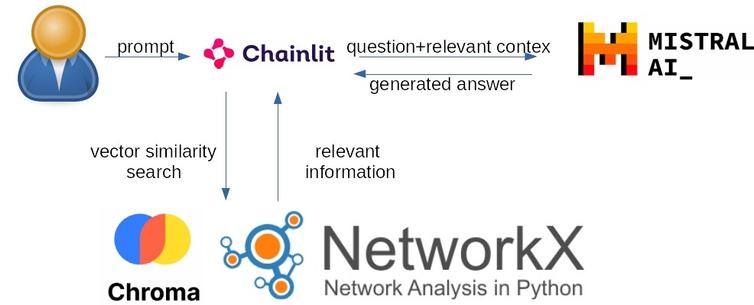

I had some fun putting together #KnowledgeGraph and RAG for #LLMs

The idea is that the vector embedding to extend the knowledge of an LLM is reinforced by a knowledge graph.

Here full explanation and code including GUI, permanent storage with #Chromadb, etc:

https://medium.com/towards-artificial-intelligence/how-i-built-an-llm-app-based-on-graph-rag-system-with-chromadb-and-chainlit-f70c9cca4400

it seems even more cumbersome as they say in Matsumoto et al. https://academic.oup.com/bioinformatics/article/40/6/btae353/7687047

or maybe it has to do with the size of the graph, as suggested here https://arxiv.org/abs/2404.16130

Let me know what you think about it

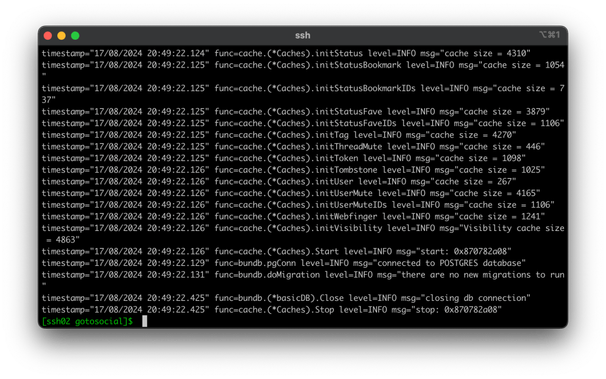

In June, I wrote about creating a private fediverse instance. For JWS Social, I am running GoToSocial hosted on K&T Host. GoToSocial supports decentralized social networking by adhering to ActivityPub, a protocol that enables interoperability across platforms like Mastodon, Flipboard, Pixelfed, and Threads. This compatibility allows users on different platforms to communicate and share content seamlessly, promoting a more connected and diverse social media ecosystem. So, you don’t need to use JWS Social to interact with a user on JWS Social. Up until now, there has only been one account on JWS Social. Today, we are going to create a second user.

Creating the account

K&T has a “Get Started” guide that includes how to SSH into your server. GoToSocial is a bit more bare-bones than Mastodon, so you need to SSH in to create a new user. K&T stores GoToSocial in /apps/gotosocial.

You can run …

./gotosocial --config-path config.yaml \

admin account create \

--username some_username \

--email some_email@whatever.org \

--password 'SOME_PASSWORD'

… from that folder to create a new GTS account. The result should look something like this …

In this case, we are creating the user Mr. Scoops. At this point, you can log in to the account using the username and password that you set above.

How to generate a GoToSocial access token for the account

If you are using Mastodon to create a bot, you have the development section of the settings page to generate an access token but it is a little more complicated with GoToSocial. Luckily, @takahashim came up with a little bit of a cheat. They created an Access Token Generator that you can also download from GitHub.

If you fill out the form, click “Publish access_token”, and then authenticate, the access_token will appear on the right side of the page.

So, now that you have an access token, how do you test it? You can make a curl request like …

curl -X GET https://<your_instance>/api/v1/accounts/verify_credentials \ -H "Authorization: Bearer <access_token>" If your instance is hosted on K&T, you might need to specify a user agent also.

How to post to the account from curl

To post a status using curl, you can …

curl -X POST https://jws.social/api/v1/statuses \

-H "Authorization: Bearer YOUR_ACCESS_TOKEN" \

-H "User-Agent: Test Script" \

-d "status=Your message here" \

-d "visibility=unlisted"

The result should be an unlisted update to the account.

How to read mentions to the account from curl

Now that we can post to the account, we need a way to see which toots require replies.

curl -X GET https://jws.social/api/v1/notifications \

-H "Authorization: Bearer YOUR_ACCESS_TOKEN" \

-H "User-Agent: Test Script" | jq '.[] | select(.type == "mention") | {from: .account.acct, message: .status.content, responses: .status.replies_count}'

This should return a JSON object that looks like this …

A simple reply bot in Node.js

The next natural step is to pull it all together into a script that watches for mentions and replies to them. For this example, we are going to use Node.js and check for mentions of Mr Scoops once every 60 seconds.

The result looks like this …

An AI-driven reply bot in Python

Last month, we looked at how to use Python, Ollama, and two models to build a RAG app that runs from the CLI. Using that as a guide, we could connect the RAG app to a GTS account. Let’s take a look.

So, what is happening here?

- The script fetches the flavors of the day from the API

- It creates the ChromaDB database (using the documents from above)

- It checks the GTS account for any new mentions once every 60 seconds

If it finds a GTS mention that was posted since the script was started and hasn’t been replied to yet, it…

- It generates an embedding for the prompt and retrieves the most relevant document

- It uses the response text to reply to the toot

The result looks like this…

For this demo, I gave the model a custom prompt in an attempt to give it a little extra personality.

A few caveats

The Python example above assumes that Ollama is running on the same machine as the script. Before this goes into production, you would need to tweak it so that the script can at least be in a container. That is the reason why Mr Scoops is likely going to live on my laptop and only be turned on now and then.

Have any questions, comments, etc? Please feel free to drop a comment below.

https://jws.news/2024/lets-build-an-ai-driven-social-media-bot/

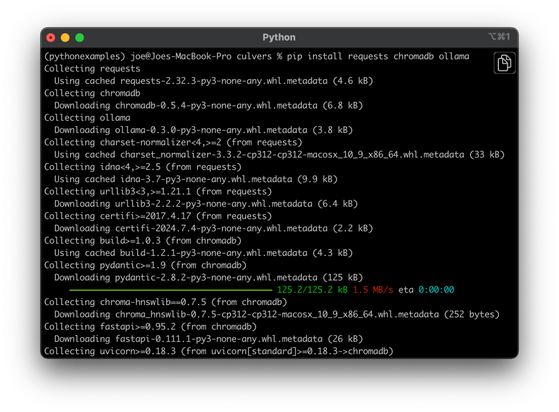

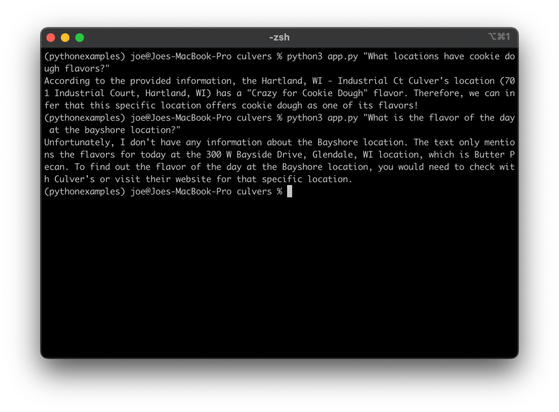

Earlier this week, we used two non-public APIs to pull in the flavor of the day data for Culver’s and Kopp’s. Back in May, we looked at How to get AI to tell you the flavor of the day at Kopp’s and I figured that the next natural step would be to do the same for Culver’s. Much like the last time, we will use Ollama, Python, and Chroma DB. This time, we are also going to be using our Culver’s Flavor of the Day API, though. Let’s start by installing the necessary modules.

Using the Kopp’s demo as a guide, we can easily build a Culver’s variant.

So, what is happening above?

- The script starts by importing a few required modules

- It fetches the flavor and location data from the API

- It creates a “docs” collection in the Chroma DB database

- It verifies that the user is asking a question

- It passes the prompt into the embedding model and retrieves the most relevant documents

- It uses the main model to generate an actual final response

- It outputs the response.

The result looks like this:

Since the new dataset covers just today’s flavors but covers the flavors for multiple locations, what you can do with the model is slightly different.

So, what do you think? How do you think that you could use this?

https://jws.news/2024/lets-play-more-with-milwaukee-custard-data/

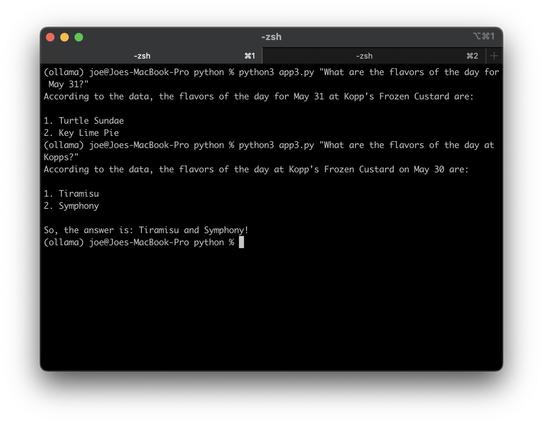

Previously, we looked at how to build a retrieval-augmented generation system using LangChain. As of last month, you can do the same thing with just the Ollama Python Library that we used in last month’s How to Write a Python App that uses Ollama. In today’s post, I want to use the Ollama Python Library, Chroma DB, and the JSON API for Kopp’s Frozen Custard to embed the flavor of the day for today and tomorrow.Let’s start with a very basic embedding example.

In the above example, we start by building an array of things that we want to embed, embed them using nomic-embed-text and Chroma DB, and then use llama3:8b for the main model.

So, how do you get the live data for the flavors of the day? The API, of course!

This simple script gets the flavor of the day from a JSON API, builds an array of embedable strings, and prints the result.

The next step is to combine the two scripts.

Two big differences that you will notice between the other two examples and this one is that the date no longer contains the year and I added a statement of what today’s date is, so that you can ask for “Today’s flavors”.

If you have any questions on how this works, later on today I am hosting a live webinar on Crafting Intelligent Python Apps with Retrieval-Augmented Generation. Feel free to stop by and see how to build a RAG system.

https://jws.news/2024/how-to-get-ai-to-tell-you-the-flavor-of-the-day-at-kopps/

@grimmy Every time you run the script, it gets the JSON feed from the Kopp’s website, builds a collection in a #ChromaDB vector database, populates it with what today is, what today’s flavor is, and what tomorrow’s flavor is, and then uses that for the embedding. I wasn’t expecting to need to tell it what today’s date is.

Back in January, we started looking at AI and how to run a large language model (LLM) locally (instead of just using something like ChatGPT or Gemini). A tool like Ollama is great for building a system that uses AI without dependence on OpenAI. Today, we will look at creating a Retrieval-augmented generation (RAG) application, using Python, LangChain, Chroma DB, and Ollama. Retrieval-augmented generation is the process of optimizing the output of a large language model, so it references an authoritative knowledge base outside of its training data sources before generating a response. If you have a source of truth that isn’t in the training data, it is a good way to get the model to know about it. Let’s get started!

Your RAG will need a model (like llama3 or mistral), an embedding model (like mxbai-embed-large), and a vector database. The vector database contains relevant documentation to help the model answer specific questions better. For this demo, our vector database is going to be Chroma DB. You will need to “chunk” the text you are feeding into the database. Let’s start there.

Chunking

There are many ways of choosing the right chunk size and overlap but for this demo, I am just going to use a chunk size of 7500 characters and an overlap of 100 characters. I am also going to use LangChain‘s CharacterTextSplitter to do the chunking. It means that the last 100 characters in the value will be duplicated in the next database record.

The Vector Database

A vector database is a type of database designed to store, manage, and manipulate vector embeddings. Vector embeddings are representations of data (such as text, images, or sounds) in a high-dimensional space, where each data item is represented as a dense vector of real numbers. When you query a vector database, your query is transformed into a vector of real numbers. The database then uses this vector to perform similarity searches.

You can think of it as being like a two-dimensional chart with points on it. One of those points is your query. The rest are your database records. What are the points that are closest to the query point?

Embedding Model

To do this, you can’t just use an Ollama model. You need to also use an embedding model. There are three that are available to pull from the Ollama library as of the writing of this. For this demo, we are going to be using nomic-embed-text.

Main Model

Our main model for this demo is going to be phi3. It is a 3.8B parameters model that was trained by Microsoft.

LangChain

You will notice that today’s demo is heavily using LangChain. LangChain is an open-source framework designed for developing applications that use LLMs. It provides tools and structures that enhance the customization, accuracy, and relevance of the outputs produced by these models. Developers can leverage LangChain to create new prompt chains or modify existing ones. LangChain pretty much has APIs for everything that we need to do in this app.

The Actual App

Before we start, you are going to want to pip install tiktoken langchain langchain-community langchain-core. You are also going to want to ollama pull phi3 and ollama pull nomic-embed-text. This is going to be a CLI app. You can run it from the terminal like python3 app.py "<Question Here>".

You also need a sources.txt file containing the URLs of things that you want to have in your vector database.

So, what is happening here? Our app.py file is reading sources.txt to get a list of URLs for news stories from Tuesday’s Apple event. It then uses WebBaseLoader to download the pages behind those URLs, uses CharacterTextSplitter to chunk the data, and creates the vectorstore using Chroma. It then creates and invokes rag_chain.

Here is what the output looks like:

The May 7th event is too recent to be in the model’s training data. This makes sure that the model knows about it. You could also feed the model company policy documents, the rules to a board game, or your diary and it will magically know that information. Since you are running the model in Ollama, there is no risk of that information getting out, too. It is pretty awesome.

Have any questions, comments, etc? Feel free to drop a comment, below.

https://jws.news/2024/how-to-build-a-rag-system-using-python-ollama-langchain-and-chroma-db/

#AI #ChromaDB #Chunking #LangChain #LLM #Ollama #Python #RAG

Векторные БД vs Точность — часть 1

Как я пытался собрать "по-быстрому" локальный RAG(retrieval augmentation generation), который будет находить термины из словаря Ожегова и поделиться об этом тут. На просторах интернетах все просто. Но на практике для моей задачи это оказалось не так. Точность...

https://habr.com/ru/articles/807957/

#llm #векторные_базы_данных #retrieval_augmented_generation #chromadb

[ Implementing semantic cache to improve a RAG system with FAISS. - Hugging Face Open-Source AI Cookbook ]

https://huggingface.co/learn/cookbook/semantic_cache_chroma_vector_database #RAG #ChromaDB #FAISS #Cache

#vectordatabases are amazing. Created an Q&A #ai running completely local. And it is shockingly good whilst being shockingly easy to implement...

I just dump a folder of PDFs into #apache #tika. Concat them, split them by /n/n to get paragraphs. Yoink them into #chromadb. Done

Now I can pose a question that will query chroma to return 20 semantically similar documents. Those documents are dumped into a mixtral-instruct in combination with the original question.

The results are nearly perfect!

Retrieval-Augmented Generation в техподдержке на основе YandexGPT

Статья посвящена рассмотрению процесса создания системы генерации ответов службы технической поддержки . Для этого используется методика с расширенным поиском, известная как Retrieval-Augmented Generation ( RAG ). Процесс основан на использовании шаблонов и реальных вопросов-ответов техподдержки. В качестве основных инструментов применяются YandexGPT и ChromaDB .

https://habr.com/ru/articles/784298/

#yandexcloud #yandexgpt_для_бизнеса #chromadb #эмбеддинги #ai #python

#Chroma is hiring. It was founded recently by Jeff Huber and Anton Troynikov in #SanFrancisco. Their product #ChromaDB is a #VectorDatabase for storing and retrieving vector embeddings.

https://trychroma.notion.site/careers-chroma-9d017c3007c7478ebd85bad854101497