Бэктестинг торговых стратегий на Python с помощью Numba. Когда перевод расчетов на GPU действительно оправдан?

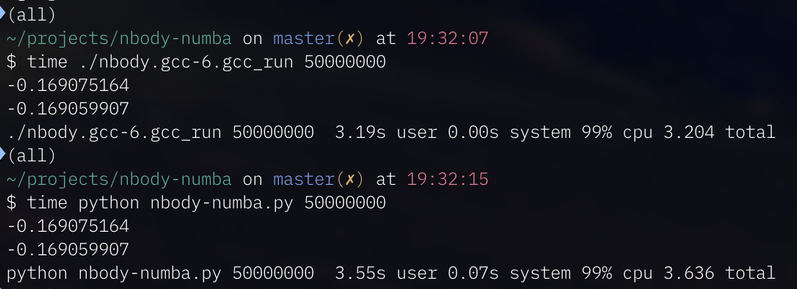

Бэктестинг — ключевой процесс в алгоритмической торговле. Он позволяет проверить стратегию на исторических данных, прежде чем запускать её в реальной торговле. Однако, чем больше данных и сложнее логика стратегии, тем дольше времени занимают вычисления. Особенно если стратегия анализирует тиковые данные и требуется протестировать множество комбинаций гиперпараметров стратегии, время вычислений может расти экспоненциально. В этой статье мы разберем, как реализовать бэктестинг на чистом Python, посмотрим сколько времени могут занимать вычисления, а также попробуем найти разные способы оптимизации. Python, как известно — это интерпретируемый язык, что означает, что код выполняется построчно во время исполнения программы, а не компилируется в машинный код заранее, как это происходит, например, в C или C++. Это делает разработку быстрее и удобнее, так как можно сразу видеть результаты выполнения кода и легко отлаживать программы. Но этот же факт, в свою очередь, приводит к тому, что Python заметно уступает в скорости более низкоуровневым языкам. К тому же Python использует динамическую типизацию, что требует дополнительных проверок и снижает производительность и если данных очень много, это может приводить к значительным сложностям, связанным с увеличением времени вычислений. Как же использовать ту легкость и скорость разработки Python и при этом сохранить адекватное время вычислений на больших объемах данных? В этой статье мы увидим, насколько перенос вычислений на GPU может увеличить производительность вычислений.