На START, внимание, марш: как победить галлюцинации и научить LLM точным вычислениям

START — опенсорсная LLM для точных вычислений и проверки кода. В START решены две главные проблемы большинства обычных моделей: галлюцинации и ошибки в многоэтапных расчетах. В статье разберемся, зачем и как именно эти проблемы решены.

https://habr.com/ru/companies/postgrespro/articles/913490/

#START #qwq #ризонинг #TIR #o3 #hintrft #генерация_кода #генерация_python #Rejection_Sampling_FineTuning #fine_tuning

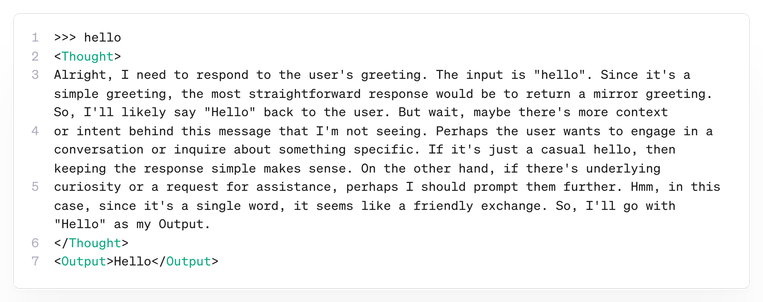

![>>> Please count to ten, skipping any number ending in 'e'.

So I need to count to ten but skip any numbers that end with the letter 'e'. Let's see, the numbers from one to ten are: one, two, three, four, five, six, seven, eight, nine, ten.

Now, I need to identify which of these numbers end with the letter 'e'. Let's look at each one:

- One: ends with 'e'

- Two: ends with 'o'

- Three: ends with 'e'

- Four: ends with 'r'

- Five: ends with 'e'

- Six: ends with 'x'

- Seven: ends with 'n'

- Eight: ends with 't'

- Nine: ends with 'e'

- Ten: ends with 'n'

So, the numbers that end with 'e' are one, three, five, nine. Therefore, I need to skip these numbers in my counting.

Let's count to ten, skipping those numbers:

Start with one, but skip it because it ends with 'e'.

Next is two, which ends with 'o', so I can say two.

Then three ends with 'e', so skip three.

Four ends with 'r', so say four.

Five ends with 'e', so skip five.

Six ends with 'x', so say six.

Seven ends with 'n', so say seven.

Eight ends with 't', so say eight.

Nine ends with 'e', so skip nine.

Ten ends with 'n', so say ten.

So, the counting sequence would be: two, four, six, seven, eight, ten.

Let me just double-check that I didn't miss any numbers.

Looking back:

[repeats everything]

Yes, that seems correct.

So, the final sequence is: two, four, six, seven, eight, ten.

**Final Answer**

\[ \boxed{\text{two, four, six, seven, eight, ten}} \]](https://files.mastodon.social/media_attachments/files/113/718/715/446/694/542/small/34aab4f485e6d9c8.png)