An OpenAI co-founder wants to build a bunker before releasing AGI. Ilya Sutskever publicly admitted he was terrified of what’s coming next.

https://www.news-cafe.eu/?go=news&n=13666

#AI #sutskever #openai #altman #technology #artificialintelligence #chatgpt #AGI

#sutskever

“We’ve achieved peak data and there’ll be no more.”

OpenAI’s cofounder and former chief scientist,

#Ilya #Sutskever, made headlines earlier this year after he left to start his own AI lab called

Safe Superintelligence Inc.

He has avoided the limelight since his departure but made a rare public appearance in Vancouver on Friday at the

Conference on Neural Information Processing Systems (NeurIPS).

“Pre-training as we know it will unquestionably end,” Sutskever said onstage.

This refers to the first phase of AI model development,

when a large language model learns patterns from vast amounts of unlabeled data

— typically text from the internet, books, and other sources.

During his NeurIPS talk, Sutskever said that,

while he believes existing data can still take AI development farther,

the industry is tapping out on new data to train on.

This dynamic will, he said, eventually force a shift away from the way models are trained today.

He compared the situation to fossil fuels:

just as oil is a finite resource,

the internet contains a finite amount of human-generated content.

“We’ve achieved peak data and there’ll be no more,” according to Sutskever.

“We have to deal with the data that we have. There’s only one internet

Next-generation models, he predicted, are going to “be agentic in a real ways.”

Agents have become a real buzzword in the AI field.

While Sutskever didn’t define them during his talk, they are commonly understood to be an autonomous AI system that performs tasks, makes decisions,

and interacts with software on its own.

Along with being “agentic,” he said future systems will also be able to reason.

Unlike today’s AI, which mostly pattern-matches based on what a model has seen before,

future AI systems will be able to work things out step-by-step in a way that is more comparable to thinking.

The more a system reasons, “the more unpredictable it becomes,” according to Sutskever.

He compared the unpredictability of “truly reasoning systems” to how advanced AIs that play chess “are unpredictable to the best human chess players.”

“They will understand things from limited data,” he said.

“They will not get confused.”

On stage, he drew a comparison between the scaling of AI systems and evolutionary biology,

citing research that shows the relationship between brain and body mass across species.

He noted that while most mammals follow one scaling pattern, hominids (human ancestors) show a distinctly different slope in their brain-to-body mass ratio on logarithmic scales.

He suggested that, just as evolution found a new scaling pattern for hominid brains,

AI might similarly discover new approaches to scaling beyond how pre-training works today.

https://www.theverge.com/2024/12/13/24320811/what-ilya-sutskever-sees-openai-model-data-training

5 miliardi di dollari per una #startup di 3 mesi: il record di #SafeSuperIntelligence ⤵️⤵️

https://innovationisland.it/safe-superintelligence-5-miliardi-dollari-startup/

Oltre #OpenAI, #Sutskever lancia la sfida per creare un'#IA che superi l'uomo... in sicurezza ⤵️⤵️

https://innovationisland.it/openai-sutskever-intelligenza-artificiale/

Ilya Sutskever forms new AI startup to pursue 'safe superintelligence'

Former OpenAI chief scientist promises efforts will be insulated from commercial pressures

#ai #ssi #ilyasutskever #sutskever #openai #superintelligence #technews

💡Ilya Sutskever, co-fondatore di OpenAI, fonda Safe Superintelligence Inc. ( @ssi )

https://gomoot.com/ilya-sutskever-co-fondatore-di-openai-fonda-safe-superintelligence-inc-ssi

#AI #blog #ia #news #OpenAI #ssi #Sutskever #tech #tecnologia @ilyasut

Ex-OpenAI-Chefwissenschaftler: Ilya Sutskever gründet AI-Startup für sichere Superintelligenz https://www.computerbase.de/2024-06/ex-openai-chefwissenschaftler-ilya-sutskever-gruendet-ai-startup-fuer-sichere-superintelligenz/ #OpenAI #Sutskever

OpenAI has appointed Paul M. Nakasone,

a retired general of the US Army and a former head of the National Security Agency ( #NSA ),

to its board of directors, the company announced on Thursday.

OpenAI says Nakasone will join its Safety and Security Committee, which was announced in May and is led by CEO Sam Altman, “as a first priority.”

Nakasone will “also contribute to OpenAI’s efforts to better understand how AI can be used to strengthen cybersecurity by quickly detecting and responding to cybersecurity threats.”

#Nakasone was nominated to lead the NSA by former President Donald Trump, and directed the agency from 2018 until February of this year.

Before Nakasone left the NSA, he wrote an op-ed supporting the renewal of Section 702 of the Foreign Intelligence Surveillance Act, the surveillance program that was ultimately reauthorized by Congress in April.

OpenAI board chair Bret Taylor said in a statement. “General Nakasone’s unparalleled experience in areas like cybersecurity will help guide OpenAI in achieving its mission of ensuring artificial general intelligence benefits all of humanity.”

Recent departures tied to safety at OpenAI include co-founder and chief scientist Ilya #Sutskever, who played a key role in Sam Altman’s November firing and eventual un-firing,

and Jan #Leike, who said on X that “safety culture and processes have taken a backseat to shiny products.”

https://www.theverge.com/2024/6/13/24178079/openai-board-paul-nakasone-nsa-safety

Chief Scientist and superalignment lead Ilya Sutskever parts ways with OpenAI

Superalignment co-lead Jan Leike follows hours later

https://www.computing.co.uk/news/4208378/chief-scientist-superalignment-lead-ilya-sutskever-openai

Nach Entlassungsdrama: Chefwissenschaftler Ilya Sutskever verlässt OpenAI https://www.computerbase.de/2024-05/nach-entlassungsdrama-chefwissenschaftler-ilya-sutskever-verlaesst-openai/ #OpenAI #Sutskever

#Sutskever played a key role in #Altman’s dramatic firing and rehiring in November last year. At the time, Sutskever was on the board of #OpenAI and helped to orchestrate Altman’s firing.

Sutskever has long been a prominent researcher in the #AI field. He started his career working with #GeoffreyHinton, one of the so-called “godfathers of AI”.

OpenAI cofounder Ilya Sutskever departs #ChatGPT maker

https://www.rappler.com/technology/openai-ilya-sutskever-departs-chatgpt-maker/

Lichess' puzzle database (CC0 licensed! see: https://database.lichess.org/#puzzles) has a "cameo" in a pre-print (https://arxiv.org/pdf/2312.09390.pdf) about supervising stronger LLMs with weaker ones.

The pre-print is authored by #OpenAI 's Ilya #Sutskever and his superalignment team.

#SamAltman has passed the #BaptismofFire. :birdsite: Now stronger than ever. The #OpenAiBoard has kicked itself out of the Game . Was a Shot in the own Knee. What about #Sutskever ? Leaving the Firm ?

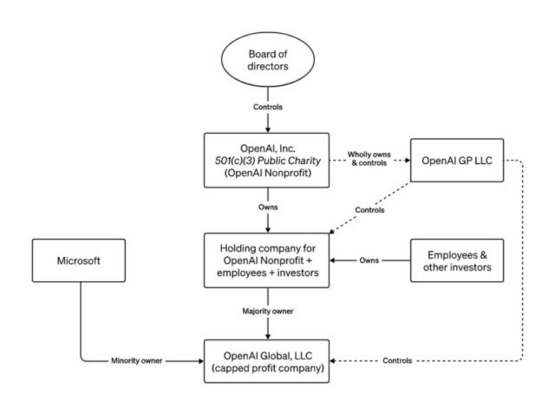

Da brodelt es im Board, der CEO wird entlassen, kommt nach vier Tagen zurück und das Board wird neu besetzt. Wer entscheidet da eigentlich was? Welche Funktion hat der Aufsichtsrat und wer bestellt ihn? Weiß jemand von Euch mehr dazu?

#OpenAI #Sutskever #Altman #ChatGPT

#Sutskever war offensichtlich nicht klar, was er mit seinem #OpenAI - Putsch auslösen würde. Zurück wird ein Gerippe von einer Firma bleiben, die keine Investoren mehr findet und höchstens noch ein bisschen Forschung betreiben wird. Der Rest sitzt in einer neuen Unterfirma bei Microsoft und freut sich mit #SamAltman und #GregBrockman.

(4/n)

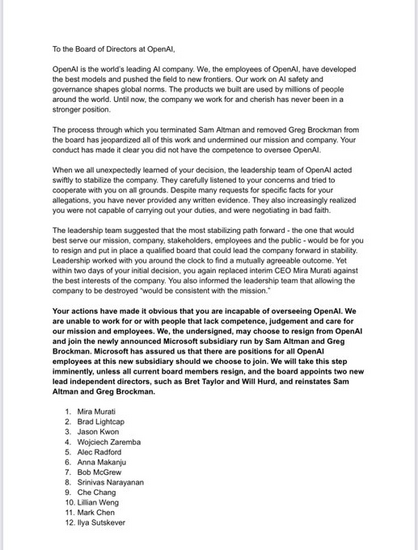

...letter’s signaturies are “unable to work for or with people that lack competence, judgment and care for our mission and employees.”

Technology journalist #KaraSwisher has posted the letter on X (formerly Twitter) – and points out that OpenAI’s chief scientist, #IlyaSutskever, has signed it, even though he is a member of the board that fired Altman.

As flagged earlier, #Sutskever has posted on #X today that “I deeply regret...

There has been a hiatus in my Sentient Syllabus Writing, while I was lecturing and thinking through things.

But I have just posted an analysis on the #Sutskever / #Altman schism at #OpenAI and hope you find it enlightening.

Enjoy!

#ChatGPT #GPT4 #HigherEd #AI #AGI #ASI #ChatGPT #generativeAI #Bostrom #AI-ethics #Education #University #Academia

🌗 Ilya Sutskever與Jensen Huang的聊天突顯:AI今天和未來的願景 - YouTube

➤ AI今天的狀況和未來的願景

✤ https://www.youtube.com/watch?v=GI4Tpi48DlA

這是「聊天突顯:Ilya Sutskever與Jensen Huang:AI今天和未來的願景(2023年3月)」的精簡版本。在這個影片中,我們將聽到Sutskever和Huang討論AI的現況以及未來的願景。

+ 很期待聽到Sutskever和Huang的見解。

+ 這將是一個令人興奮的話題,AI的進展一直都很有趣。

#AI #聊天 #Sutskever #Huang

More details about the departure of #Altman , the role #Sutskever played, he apparently wanted to slow things down. Also a good explanantion of the peculiar company structure in this blog.

Interessant is dat de Chief Scientist vam #OpenAI , Ilya Sutskever nog steeds bij #OpenAI werkt. Sommige suggereren dat hij achter het vertrek van Sam Altman zit. Lees het interessante artikel van @marcoderksen #AI #Altman #Sutskever

https://koneksa-mondo.nl/2023/11/18/ilya-sutskever-de-ai-wetenschapper-die-de-wereld-vormgeeft/