🕯️Fresh from the oven, a new book I co-edited with my colleagues and friends Jordi Vallverdú and Vicent Costa.

“There is no such thing as a natural death: nothing that happens to a man is ever natural, since his presence calls the world into question. All men must die: but for every man his death is an accident and, even if he knows it and consents to it, an unjustifiable violation.”

— Simone de Beauvoir, A Very Easy Death (1965)

In the digital age, death feels increasingly strange to us — as if were moving farther away, abstracted, outsourced.

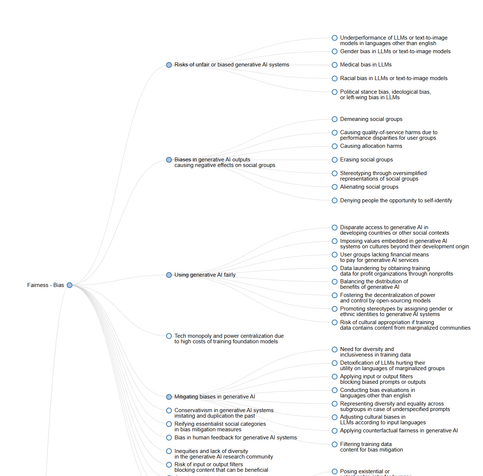

"SecondDeath. Experiences of Death Across Technologies" explores how artificial intelligence, robotics, and digital systems are reshaping our experience of mortality.

In a world mediated by algorithms and cybernetic agents, death is no longer only biological. It becomes symbolic, synthetic, sometimes programmable.

The question is no longer if machines can die, but what their death reveals about our own: about grief, identity, continuity, and the boundaries of consciousness.

This volume gathers philosophical, cultural, scientific, ethical, psychological, and technological perspectives to rethink one of humanity’s most ancient enigmas — through the lens of our most recent inventions.

To confront death is, once again, to rethink life, our reality itself.

https://link.springer.com/book/9783031988073

#SecondDeath #Philosophy #AI #Robotics #DigitalEthics #DeathStudies #Technology #CognitiveScience #Bioethics #Neuroethics #Psychiatry #Psychology #Thanatology #DigitalHumanities #CulturalStudies #Cybernetics #ArtificialLife #HumanMachineInteraction #AIEthics #Transhumanism #Posthumanism #Neuroscience #Existentialism #PhilosophyOfMind #EthicsInAI #ScienceAndSociety #academia