In the dynamic sphere of Information Technology, I find myself increasingly drawn to the 'less glamorous' aspect of stability. Here are a few instances that illustrate this preference:

The allure of a Linux distribution like Debian, which prioritizes core functionality and stability over making headlines with experimental features. It's heartening to see Debian providing a solid foundation for others to innovate upon.

The prudence in a software release cycle that's paced at six months, allowing for meticulous testing with multiple release candidates before an official launch. This approach often unveils well-architected software, even in its 'alpha' phase, whereas some 'production-ready' software necessitates frequent bug fixes.

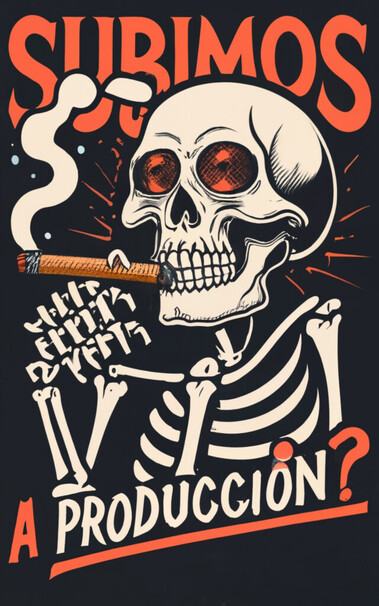

The common narrative equates rapid releases with innovation. However, the more I explore this, the more I discern the pitfalls of such a fast-paced cycle. It not only burdens downstream stakeholders but also creates a ripple effect across the software supply chain, manifesting issues of a significant magnitude in our daily lives.

Imagine a near-future where daily updates become a norm for not just our computers and mobile phones, but extend to our cars 🚗, home appliances 🏡, and even farming equipment 🚜. Even if each update process is streamlined to a minute, the cumulative time and effort required could be substantial. (now what if i tell you its far closer then you can imagine)

Are we truly prepared for such a hyper-connected, software-driven reality where updates are incessant?

Would love to hear your thoughts on how we might find a sweet spot between nurturing innovation and maintaining stability in our tech-driven world?

Hastag soup:

#SoftwareDevelopment #ITIndustry #TechnologyTrends #Innovation #Stability #ReleaseManagement #SoftwareSupplyChain #softwareSecurity #informationSecurity #appsec #infosec