Neuromorphic Chips: How the Brain Inspires the Machines of the Future

Imagine a computer that thinks like you. Not just crunching numbers, but learning, adapting, and solving problems faster than you can blink, all while sipping less power than a fridge light bulb. Sounds like sci-fi? Welcome to neuromorphic chips—tech that mimics the human brain and is set to redefine computing. Let’s dive into this wild ride where science meets nature’s genius and see how these chips are shaping tomorrow.

What Are Neuromorphic Chips?

Forget traditional processors that plod through data like accountants. Neuromorphic chips are the rock stars of tech, inspired by the ultimate supercomputer: your brain. They mimic neurons and synapses, sending info via short electrical “spikes,” just like your brain does. These chips don’t just process—they learn and adapt with incredible efficiency.

“Neuromorphic” mixes “neuron” and “morphology” (form). These chips borrow the brain’s wiring: millions of artificial neurons linked by synapses work in parallel, like a data symphony. The best part? They use a fraction of the energy of regular chips.

How Do They Work? A Brain in Silicon

Picture your brain as a party where neurons only speak when they’ve got something juicy to share. Neuromorphic chips roll the same way:

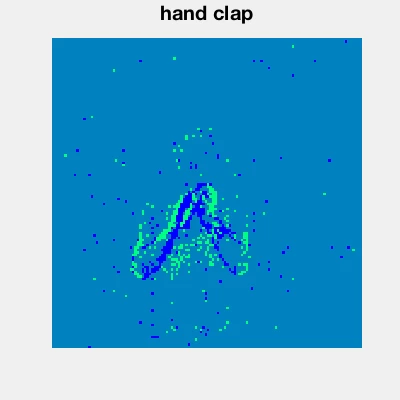

1. Spiking Neural Networks (SNN): Unlike regular neural nets that buzz constantly, spiking networks only fire when needed, like texting only big news. Energy savings? Huge!

2. Parallel Power: These chips juggle data across multiple channels, like a brain planning dinner while grooving to music. Perfect for facial recognition or live video analysis.

3. Memory on Deck: Regular computers shuttle data like rush-hour couriers. Neuromorphic chips keep memory in the neurons—no traffic, just speed.

4. Self-Learning: These chips learn on the fly, like a brain memorizing a new route. Think robots that train themselves or cars that react faster than you.

Why Are They Awesome?

1. Firefly Energy: Your brain runs on 20 watts—less than a hallway bulb. Neuromorphic chips aim for that efficiency, ideal for drones, smartwatches, or gadgets lasting months without a charge.

2. Lightning Speed: Parallel processing makes these chips tackle tasks that’d fry regular processors. Your phone could spot faces in a crowd in milliseconds.

3. AI on Fire: Built for AI, these chips supercharge neural networks, making AI smarter and cheaper to run.

4. Scalable Future: From tiny sensors to brain-mimicking supercomputers, these chips scale effortlessly.

Who’s Making the Magic?

Neuromorphic chips aren’t a lab dream—they’re real, thanks to top innovators:

- IBM TrueNorth: Launched in 2014, it rocks a million neurons and 256 million synapses, analyzing video faster than you can say “cool.”

- Intel Loihi: Debuted in 2017, upgraded in 2021, Loihi learns on-device, perfect for robots or smart cameras.

- BrainChip Akida: Built for offline smart devices, like cameras that recognize faces without the cloud.

- SpiNNaker: A Manchester University project to simulate the brain, using neuromorphic processors for real-time neural networks.

Where Will We See Them?

These chips are ready to invade our lives:

- Self-Driving Cars: Cars that see the road like humans and dodge pedestrians in a flash.

- Smart Robots: Robots learning new tasks solo, from factory work to surgical assists.

- Next-Gen Medicine: Analyzing MRIs or monitoring heartbeats in real time with precision.

- IoT and Smart Homes: Smarter speakers and cameras that work offline, sipping power.

- Near-Human AI: AI that gets context, emotions, and learns like a kid.

The Catch…

Every revolution has bumps:

- Programming’s Tough: Spiking networks need new coding tricks. Python won’t cut it.

- Not Universal: Great for AI, but overkill for simple tasks like taxes.

- Pricey Development: Building these chips costs billions and years.

- Algorithm Hunt: New algorithms are needed to unlock their full power.

What’s Next?

Neuromorphic chips are like the moon landing—early days, big dreams:

- Smart Everything: From AR glasses to medical implants, they’ll weave tech into life.

- Quantum Combo: Pairing with quantum computing could create light-speed brains.

- Soulful AI: We might get AI that’s not just smart but feels almost alive.

In Conclusion

Neuromorphic chips bridge machines and humans, silicon and neurons. They’re not just powerful—they’re smart, efficient, and nature-inspired. We’re entering an era where machines might think, learn, and even dream like us. One day, a neuromorphic chip might ask, “What’s love?”—and we’ll be the ones stumped.

:sp_oko: :sp_sama:

#NeuromorphicComputing #ArtificialIntelligence #BrainInspiredTech #FutureOfComputing #AI #MachineLearning #SpikingNeuralNetworks #TechInnovation #IoT #Robotics #EdgeComputing #EnergyEfficiency #IntelLoihi #IBMTrueNorth #BrainChip #SpiNNaker #SmartDevices #AutonomousSystems