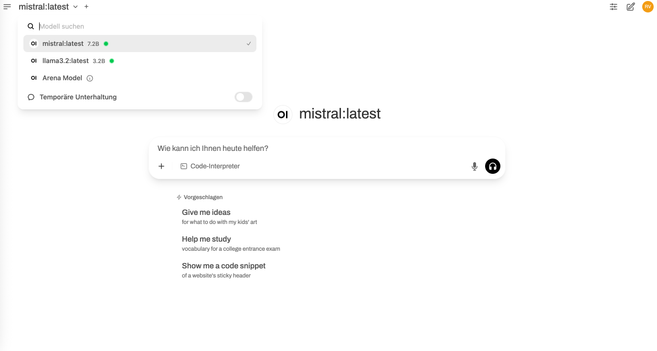

Im Blogpost hats jetzt ein Update drin, wie man sich eine fancy Oberfläche bastelt, dass die lokale KI aussieht wie "richtig".

#localAI

J'ai découvert #LocalAI

https://notes.sklein.xyz/2025-06-12_1702/

Pour le moment, je ne sais pas trop comment le positionner par rapport à Open WebUI, ollama, vLLM, etc

🧠 Cloud-free. Token-free. Limit-free.

AgenticSeek is the AI agent that lives on your machine — not someone else’s server.

Want total control over scraping, planning, and research flows… without paying ₹16K/month?

👇 This one’s for the rebels.

#LocalAI #PrivacyFirst #AgenticSeek #AIagents

https://medium.com/@rogt.x1997/agenticseek-the-no-cloud-full-control-ai-agent-i-didnt-know-i-needed-until-now-4aecd4d03abc

🐧 Weekend project: I got local AI models running on Linux using llm + Ollama — no cloud, just open tools.

Wrote a quick blog post to share the setup.

Or just use you AI locally 🦾 💻 🧠

I completely understand the concerns about relying too heavily on AI, especially cloud-based, centralized models like ChatGPT. The issues of privacy, energy consumption, and the potential for misuse are very real and valid. However, I believe there's a middle ground that allows us to benefit from the advantages of AI without compromising our values or autonomy.

Instead of rejecting AI outright, we can opt for open-source models that run on local hardware. I've been using local language models (LLMs) on my own hardware. This approach offers several benefits:

- Privacy - By running models locally, we can ensure that our data stays within our control and isn't sent to third-party servers.

- Transparency - Open-source models allow us to understand how the AI works, making it easier to identify and correct biases or errors.

- Customization - Local models can be tailored to our specific needs, whether it's for accessibility, learning, or creative projects.

- Energy Efficiency - Local processing can be more energy-efficient than relying on large, centralized data centers.

- Empowerment - Using AI as a tool to augment our own abilities, rather than replacing them, can help us learn and grow. It's about leveraging technology to enhance our human potential, not diminish it.

For example, I use local LLMs for tasks like proofreading, transcribing audio, and even generating image descriptions. Instead of ChatGPT and Grok, I utilize Jan.ai with Mistral, Llama, OpenCoder, Qwen3, R1, WhisperAI, and Piper. These tools help me be more productive and creative, but they don't replace my own thinking or decision-making.

It's also crucial to advocate for policies and practices that ensure AI is used ethically and responsibly. This includes pushing back against government overreach and corporate misuse, as well as supporting initiatives that promote open-source and accessible technologies.

In conclusion, while it's important to be critical of AI and its potential downsides, I believe that a balanced, thoughtful approach can allow us to harness its benefits without sacrificing our values. Let's choose to be informed, engaged, and proactive in shaping the future of AI.

CC: @Catvalente @audubonballroon

@calsnoboarder @craigduncan

#ArtificialIntelligence #OpenSource #LocalModels #PrivacyLLM #Customization #LocalAI #Empowerment #DigitalLiteracy #CriticalThinking #EthicalAI #ResponsibleAI #Accessibility #Inclusion #Education

Google, yerelde çalışan 3-4 gb ram ile çalışan ai modeli gemma 3n modelibi duyurmuş telefonlarda da yerel olarak kullanılabilecekmiş.Çok güzel gelişme veri mahremiyeti için.Hemde artık ai yavaştan dil modelleri optimizasyon kısmına önem vermeye başlıyorlar. :android_logo:

#AI #yz #yapayzeka #gemma3n #Gemma #localai #mahremiyet #veri #Google #ArtificialIntelligence #telefon #Android

AI lokaal draaien, lijkt mij de toekomst hebben. Het geeft eigenaarschap terug aan de gebruiker en maakt ons los van bedrijven die steeds meer persoonlijke gegevens van ons willen vastleggen. Google lanceert nu een lokale AI versie. https://techcrunch.com/2025/05/31/google-quietly-released-an-app-that-lets-you-download-and-run-ai-models-locally/ #localAI

TechCrunch: Google quietly released an app that lets you download and run AI models locally. “Called Google AI Edge Gallery, the app is available for Android and will soon come to iOS. It allows users to find, download, and run compatible models that generate images, answer questions, write and edit code, and more. The models run offline, without needing an internet connection, tapping into […]

Loving how versatile and capable Gemma 3 has been. Quite astonishing to see the evolution of these open models. And I think I'm only starting to scratch the surface.

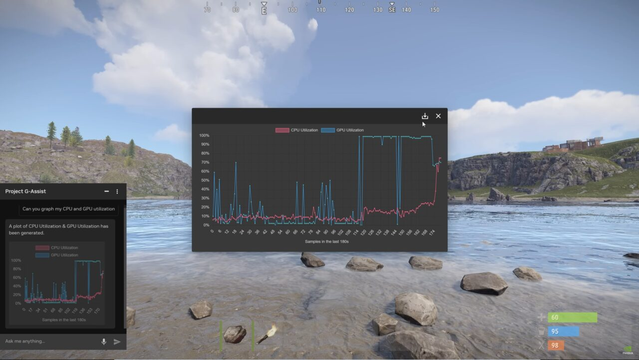

Want to run AI models locally but still trigger them from the cloud? 🤔 This video shows you how! #AI ##n8n #localAI https://youtu.be/byr434VBeFk

@system76

I love #LLM, or as they're often called, #AI, especially when used locally. Local models are incredibly effective for enhancing daily tasks like proofreading, checking emails for spelling and grammatical errors, quickly creating image descriptions, transcribing audio to text, or even finding that one quote buried in tons of files that answers a recurring question.

However, if I wanted to be fully transparent to #bigtech, I would use Windows and Android with all the "big brotherly goodness" baked into them. That's why I hope these tools don't connect to third-party servers.

So, my question to you is: Do you propose a privacy-oriented and locally/self-hosted first LLM?

I'm not opposed to the general notion of using AI, and if done locally and open-source, I really think it could enhance the desktop experience. Even the terminal could use some AI integration, especially for spell-checking and syntax-checking those convoluted and long commands. I would love a self-hosted integration of some AI features. 🌟💻

#OpenSource #Privacy #AI #LocalModels #SelfHosted #LinuxAI #LocalLLM #LocalAI

MakeUseOf: Anyone Can Enjoy the Benefits of a Local LLM With These 5 Apps . “Cloud-based AI chatbots like ChatGPT and Gemini are convenient, but they come with trade-offs. Running a local LLM—the tech behind the AI chatbot—puts you in control, offering offline access and stronger data privacy. And while it might sound technical, the right apps make it easy for anyone to get started.”

Ollama Local LLM Platform Unveils Custom Multimodal AI Engine, Steps Away from Llama.cpp Framework

#Ollama #MultimodalAI #LocalLLM #AI #ArtificialIntelligence #MachineLearning #VisionModels #OpenSourceAI #LLM #AIEngine #TechNews #LocalAI

Looking for recommendations for a personal AI assistant I can run locally with memory and some degree of customization. Ideally open source, but happy to hear about small self-hostable apps too. Any favorites?

#AI #SelfHosted #OpenSource #LocalAI #PersonalAssistant #Privacy #LLM #TechTools #ProductivityApps

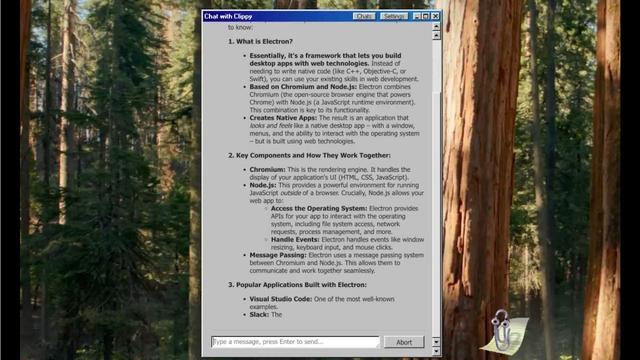

Microsoft Clippy Returns as AI Assistant, Empowered By LLMs You Can Run Locally on Your PC

#AI #Clippy #AIClippy #AIAssistants #LLMs #LocalAI #OpenSource #ElectronJS #LlamaCpp #GGUF #Gemma3 #Llama3 #Phi4 #Qwen3 #RetroTech #MicrosoftOffice #OnDeviceAI

I use #cursor_ai for work, but want something locally hosted for personal projects. I've tried #continue with ollama and several models but nothing even came close. Any other suggestions?

kind of want a laptop with a decent CPU w/good iGPU (some of AMD's products) but then also a fairly beefy NPU that can be used with *handwave* various open source #localAI platforms... since this doesn't really exist yet and I buy like 5 generations behind, I will probably be waiting a while