Findings show distinct brain regions handle individual concepts (frontal/parietal cortex) vs. relations (temporal cortex). LLMs predict brain activity, revealing complex semantic networks.

By @pbogdan.bsky.social

#maps #brain #processes #concepts #semantic #LLMs

www.biorxiv.org/content/10.1...

biorxiv.org/content/10.110...

#semantic

#dans_mon_flux :

Creating a semantic color palette | Go Make Things

https://gomakethings.com/creating-a-semantic-color-palette/

@nickbearded I am trying to stay on execution front and iterate but have to get v basics done first. let's talk more about this - having it run over tor or ip2 may be a nice option. the thought of having ability to shortcut to specific scripts through bash is good osint due diligence; the harnessing collective intelligence part is really the cornerstone #tags #semantic search #tagcloud #distributed decentralized federations #devops #bashcore scripting #xml #xslt #pagerank algo

😂 Oh wow, yet another #open-source 'better-than-everything' model! 🌟 This one apparently turns your grocery list into a #markdown #masterpiece 🎨🤖—a must-have for those who enjoy reading their #receipts in semantic markdown. 🚀 Because who doesn't need their shopping lists to be a structured, intelligent document? 🙄

https://huggingface.co/nanonets/Nanonets-OCR-s #grocery-list #semantic-technology #intelligent-shopping #HackerNews #ngated

I'm not saying that semantic versioning solves all your problems, but if upgrade from 10.2.0.0 to 10.2.0.1 requires migrating databases, maybe you should consider it, it's not that bad.

#semantic #versioning #corporate #craziness #IT #DB #database

An #AI based #semantic search #development #company builds smart search #tools that understand what #users mean. They use #artificial #intelligence to find better, more accurate results. This helps #businesses like online #stores and #websites give users the right information quickly and easily.

@nickbearded have to build it, there are a few layers here and there, kind of started out as generic portal quest...we will see if i can get anything built - i may have to just sell clusters first and then hire a coding genius or two. I do not think this or the 90 pt plan are particularly unique but just have to try and follow through - get a working prototype for the rag pipelines - make it a cohesive dashboard #osint #comp intel #realtime info #portal #embeddings/token/s #federated distributed inference #semantic search

Maybe we should introduce a button in Mastodon which says:

[X] Fancy Quote

That would render text bigger, smaller, thinner, thicker or whatever makes people use f5g raster images instead.

TOSID Update:

Added formal paper presentation

https://github.com/ha1tch/TOSID/blob/main/tosid-paper.md

As PDF:

https://github.com/ha1tch/TOSID/blob/main/tosid-paper.pdf

The TOSID (Taxonomic Ontological Semantic IDentification System) is a comprehensive taxonomic framework designed to uniquely identify and classify any conceivable entity across the universe. The system uses a structured alphanumeric code that embeds hierarchical information about an entity's fundamental nature, origin, scope, and specific identity.

Latest working (non-paper) version:

https://github.com/ha1tch/TOSID/blob/main/tosid-01.md

Repo:

https://github.com/ha1tch/TOSID

#foss #semantic #taxonomy #compsci #computation #informationtheory #ontology #tosid

New Project!

The TOSID (Taxonomic Ontological Semantic IDentification System) is a comprehensive taxonomic framework designed to uniquely identify and classify any conceivable entity across the universe. The system uses a structured alphanumeric code that embeds hierarchical information about an entity's fundamental nature, origin, scope, and specific identity.

https://github.com/ha1tch/TOSID/blob/main/tosid-01.md

#foss #semantic #taxonomy #compsci #computation #informationtheory #ontology

Using the `:has()` #pseudo-class extensively in my own #css template. e.g.

```

section:has( section ) {

border-top: 1px solid #ccc

}

```

I love creating #semantic #html and just tuning the `<section>` that is a container of sub `<section>`s a bit reflects this semantic markup visually without a need to add classes.

Something to add to the 'to read' pile! A useful systematic review of #DMP #semantic #annotation techniques -- the context being that such annotation can unlock machine-actionable DMPs.

Automated Semantic Annotation of Data Management Plans: A Systematic Review https://doi.org/10.5334/dsj-2025-016 #ResearchData #OpenData #OpenResearch #OpenScience

Writing #prompts is really the same as writing rules in rule-based #NLP , just at the #semantic level instead of #syntactic #NLU #LLM #Linguistics

Chonky – a neural approach for text semantic chunking

https://github.com/mirth/chonky

#HackerNews #Chonky #Neural #Text #Chunking #Semantic #AI #MachineLearning

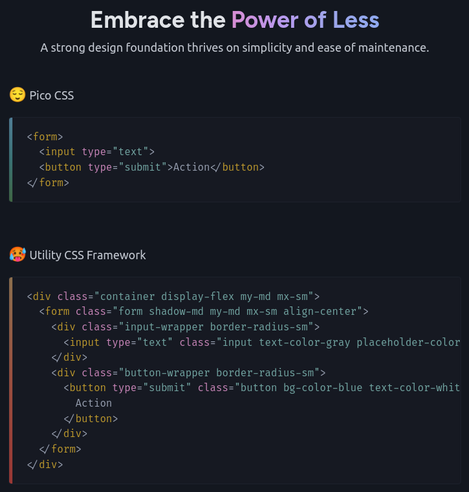

I love the promise of Pico CSS. ✨ 😍 https://picocss.com/ #semantic #html #css #less #lessismore

test #semantic

SciTech Chronicles. . . . . . . . .April 5th, 2025

#mouse #allergens #inflammation #RORγt+ #pharmacology #Osmanthus #LLM #5-MeO-MiPT #Artemis #regolith #ultrathin #efficiency #breakup #Africa #Angola #cooling #Congo #semantic #structures #multidimensional

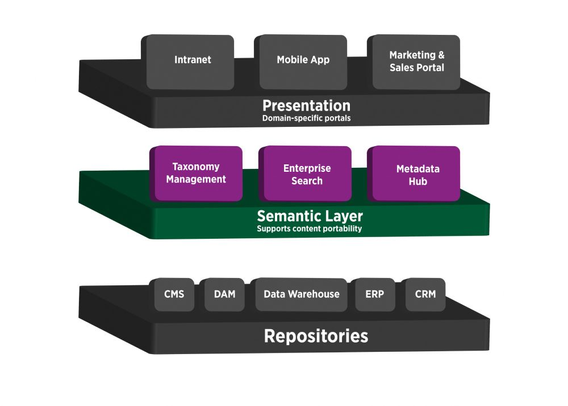

#ITByte: A #Semantic #Data #Layer is a metadata and abstraction layer that manages the relationships between data attributes to create a business view.

A semantic layer can help companies monetize their data and make accurate business decisions by aggregating multiple data sources.

https://knowledgezone.co.in/posts/Semantic-Data-Layer-642a7d8329dfcd4a107ff990