For those who seek the truth, a note. I was born in the USSR. At university I was taught Marxist dialectics, historical #materialism, #economics and scientific #communism. I considered Marx to be the greatest intellectual. But the question of why life in the "backward" West was so much better than in the "progressive" USSR remained. Then I accidentally came across Mises' books...

#Materialism

Possessions

You can’t have everything.

Where would you put it?

slip:4a1349.

#7ForSunday #InspirationalQuotesBookReviewed_ #Materialism #Quotes #Snark #StevenWright

Wohi Sajda Hai Laaeq-E-Ehtamam

Ke Ho Jis Se Har Sajda Tujh Par Haraam

Worth offering is only that prostration

which makes all others forbidden acts.

وہی سجدہ ہے لائقِ اہتمام

کہ ہو جس سے ہر سجدہ تجھ پر حرام

(Bal-e-Jibril-142) Saqi Nama (ساقی نامہ) Sakinama

#Tawheed #lailahaillAllah #Sajda #Worship #FalseGods #ModernShirk #Materialism #GraveWorship #BreakTheIdols #IslamicReminder #BandaSirfAllahKa #ZehniGhulami #Saqinama #Iqbaliyat

Shakespeare: New Voices [BOOK] Deadline: June 30, 2025 #drama #theatre #Shakespeare #performance #adaptation #appropriation #historicism #empire #culture #materialism #TeamEnglish #renaissance #bardolatry #postcolonial #woke #socialjustice #theory #queer call-for-papers.sas.upenn.edu/cfp/2023/05/...

#darkmatter isn't #matter at all! But what #matters is #science has wasted #decades chasing spooky ghosts of #physics instead questioning #materialism as the real #problem.

Our small #planet is a #sacred oasis of life in the vastness of the cosmos. Our human life is a gift that we should use for our own development and for the #good of other people and all living beings in #nature.

Our most important #cultural and #civilizational task today is to break down the door to our inner #universe that has been nailed shut by the crude, reductionist #materialism. The rest will then follow as if by itself.

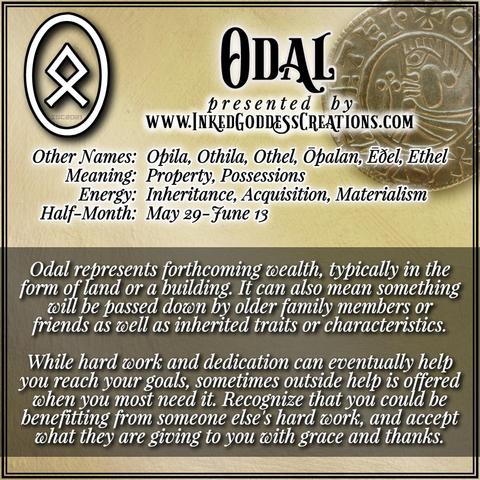

~ Odal ~

When this rune appears in a reading, it usually signifies the imminent arrival of financial or material assistance. It can also sometimes mean the realization of a physical or behavioral trait from your ancestors.

https://www.inkedgoddesscreations.com

#Odal #Posessions #Othila #Property #Inheritance #Materialism #Norse #Rune #Divination #Magick

"[Minimalism is] standing in a collapsing society, surrounded by endless noise and glittery bullshit, and choosing silence - just choosing silence."

"Minimalism is the new “abundance.” What if everything you own is making you miserable? Less is more, more or less. Consumerism and materialism is ruining our world, but simple living is building steam. In this brutally honest video, I tell the story of how I ditched consumerism, gave capitalism the finger, and found unexpected peace in a folding chair and one spoon. Minimalism isn’t aesthetic—it’s survival. If you’ve ever felt suffocated by your stuff, your debt, or your life… this one’s for you."

So many great thoughts - strongly recommend taking your time to watch this video and really LISTEN undistracted!

"The Joy of Less: A Minimalist's Middle Finger To Modern Life"

inv.nadeko.net/watch?v=4eTfNCYhqH4

(youtu.be/4eTfNCYhqH4)

#Minimalism #Minimalismus #TheFunctionalMelancholic #Abundance #Consumerism #Materialism #Capitalism #Peace #Clearity #Survival #MentalHealth

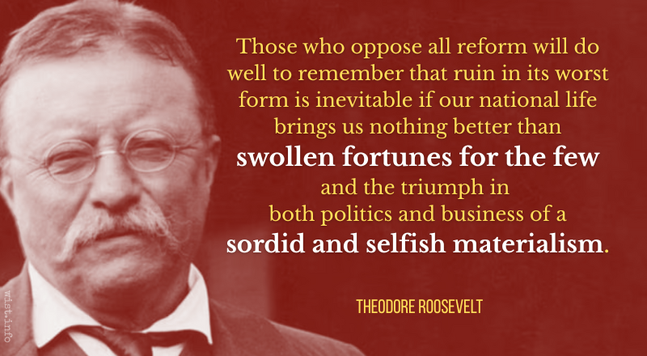

A quotation from Teddy Roosevelt

Those who oppose all reform will do well to remember that ruin in its worst form is inevitable if our national life brings us nothing better than swollen fortunes for the few and the triumph in both politics and business of a sordid and selfish materialism.

Theodore Roosevelt (1858-1919) American politician, statesman, conservationist, writer, US President (1901-1909)

Speech (1910-08-31), “The New Nationalism,” Osawatomie, Kansas

Sourcing, notes: wist.info/roosevelt-theodore/3…

#quote #quotes #quotation #qotd #teddyroosevelt #theodoreroosevelt #materialism #plutocracy #reform #revolution #ruin #wealth #reform #progressivism

An essay from Peter Coffin tackling AI from the creative --- or at least, "artistic" --- side of things:

https://petercoffin.substack.com/p/plato-is-still-a-bitch

>If style becomes proprietary, then aesthetics become real estate. Not just the output, but the process—the brushstroke, the rhythm, the vibe. This is enclosure. It turns shared cultural language into fenced-off property. When you try to copyright a style, you’re not protecting yourself. You’re criminalizing everyone else.

That's certainly the goal with copyright (fenced-off property). Which makes the copyleft approach far more attractive to me: using the system to subvert the system, or at least, to describe a better iteration of it.

>“Plagiarism” becomes the alarm bell—not because something valuable was stolen, but because someone unapproved is now capable of producing something “valuable.” It threatens the scarcity model. It suggests that skill isn’t a divine gift or years of costly training—it’s a pattern, a reproducible technique, or worse: an aesthetic sensibility that was never truly ownable to begin with.

I mean, the training data that generative AI works with often *is* the result of years of costly training. Here, it feels like the creative/artistic world, traditionally protected with copyright law, is merely catching up to the industrial world, traditionally protected with patent law. The industrial world understands perfectly well that the research phase costs significantly more money than the reproduction phase.

In a perfect world, something resembling FRAND agreements would be in place, not for ownership or capital, but for the labourers responsible for the innovations. Perhaps the creative/artistic world could benefit from a similar arrangement. In any event, though I thoroughly dislike IP law in its present form, I suspect that even a socialist economy would have some flavour of it.

=========================

Coffin doesn't speak to the more foundational limitations of generative AI --- or at least of LLMs --- as documented in the Stochastic Parrots paper. Perhaps someone else could chime in from that perspective. It's the one I'm most concerned with as a returning nursing student, surrounded by peers that lean frequently on LLMs via ChatGPT or other models.

There is a similar jumping-off point, I suppose: the notion of who gets to "control" what "the truth" is. Prior to LLMs, fluency was widely utilized as a mechanism for discerning coherency. If it sounds authoritative, then it is. LLMs blow that approach to smithereens. Somewhat ironically, I suspect that a stronger reputation/citation/authorship management system, a more formalized "sign-off" mechanism, may ultimately be crucial in re-establishing some semblance of coherency, of shared reality.

(A short/micro story floating around the fediverse of a no-tech library containing only materials well before the advent of LLMs, carefully guarded to prevent any form of scraping other than by-hand scribes (kinda reminiscent of Dune, honestly) comes to mind; I'd prefer an approach that utilizes PKI to formalize signed chains (or taxonomies, or trees, or webs, or ontologies) of claims).

#socialism #communism #marxism #materialism #capitalism #ai #llm

🎧What is Environmental Psychology? w/ Linda Steg

University of Groningen

#Interview #Materialism #Science #Psychology #EnvironmentalPsychology

https://www.youtube.com/watch?v=HgOGtE5W-Zk&t=78s

After achieving my lifetime material goals of acquiring an Ericofon, a Space:1999 lunchbox, an Ironrite Health Chair, a Citroën 2CV, 39 manual typewriters, a picture book about Leigh Bowery, a Raleigh Twenty bicycle, two nice mechanical pencils, and a really comfortable pair of plastic sandals, I have to ask myself "What was it all for?"

—Matthew Segall, Prehensions, Propositions, and the Cosmological Commons

#mind #body #idealism #materialism #animism

—Suzanne Gieser, The Innermost Kernel: Depth Psychology and Quantum Physics. Wolfgang Pauli's Dialogue with C.G. Jung

#pauli #idealism #materialism

Mind-Blowing Confession: Ex-Rivers Gov Amaechi Says He Wore One Shirt for 4 Years https://www.rainsmediaradio.com/2025/04/mind-blowing-confession-ex-rivers-gov.html?utm_source=dlvr.it&utm_medium=mastodon Follow, Like & Share #Amaechi #NigerianPolitics #FashionStatement #Simplicity #Materialism

I think nuclear war might be less painful.

"So when Coon, also known for playing social climber Bertha Russell on The Gilded Age, mentioned in a recent TikTok video that she swears by lanolin-based Lansinoh Lanolin Nipple Cream as a lip balm—and that she has “converted a lot of people to nipple cream for their lips”—we knew we had to try it"

#marketing #consumerism #materialism #collapse #HollowMen #fools

https://www.nytimes.com/wirecutter/reviews/nipple-cream-for-lips

Woke Shakespeare: Rethinking Shakespeare for a New Era. Pre-order at 50% discount. #drama #theatre #Shakespeare #adaptation #appropriation #historicism #empire #culture #materialism #English #renaissance #bardolatry #postcolonial #woke #socialjustice #queer play.google.com/store/books/...