🧬 Day 2 of my Genetic Algorithms Bootcamp for C# devs is live!

Today we’re breaking down the core concepts: chromosomes, genes, populations, and more. Code that evolves? Yes please.

www.woodruff.dev/day-2-evolut...

#CSharp #GeneticAlgorithms #DotNet #AI

Day 2: Evolution in Code: The ...

#geneticalgorithms

🧬 Day 2 of my Genetic Algorithms Bootcamp for C# devs is live!

Today we’re breaking down the core concepts: chromosomes, genes, populations, and more. Code that evolves? Yes please.

https://www.woodruff.dev/day-2-evolution-in-code-the-core-concepts/

🧬 Day 1 of my Genetic Algorithms Bootcamp for C# devs is live!

Why should you care about evolution in your code? Because survival of the fittest isn't just for biology. 😎

👉 Learn more: https://www.woodruff.dev/day-1-the-survival-of-the-fittest-code-why-learn-genetic-algorithms-in-c/

🧬 Day 1 of my Genetic Algorithms Bootcamp for C# devs is live!

Why should you care about evolution in your code? Because survival of the fittest isn't just for biology.

www.woodruff.dev/day-1-the-su...

#CSharp #GeneticAlgorithms #DotNet #AI #DevLife

Day 1: The Survival of the Fit...

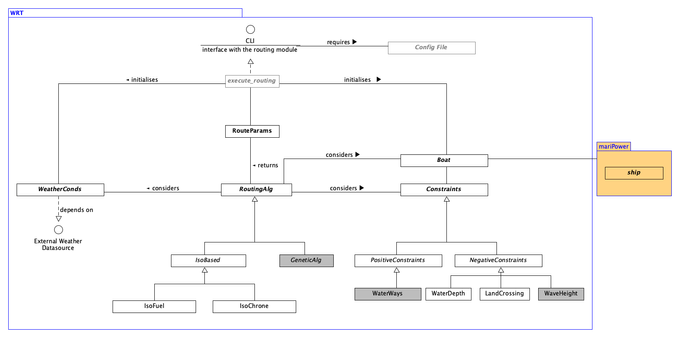

Shreyas Ranganatha introduces his Google Summer of Code project on improving the genetic algorithm for multi-objective optimization in the Weather Routing Tool!

Find out more in his first blog post:

https://blog.52north.org/2025/05/27/extending-the-weather-routing-tool-introductory-blog-post/

#GSoC2025 #WeatherRoutingTool #routeoptimization #geneticalgorithms #MariData

🧬 New blog series!

Evolve Your C# Code with AI starts June 2.

Learn how to build Genetic Algorithms in C# over 5 weeks — practical, testable, and powerful.

woodruff.dev/evolve-your-...

#GeneticAlgorithms #CSharp #DotNet #AI #CodeSmarter #EvolutionaryComputing

Evolve Your C# Code with AI: A...

🧬 New blog series!

Evolve Your C# Code with AI starts June 2.

Learn how to build Genetic Algorithms in C# over 5 weeks — practical, testable, and powerful.

https://woodruff.dev/evolve-your-c-code-with-ai-a-5-week-genetic-algorithms-bootcamp-for-developers/

#GeneticAlgorithms #CSharp #DotNet #AI #CodeSmarter #EvolutionaryComputing

these algorithms "evolve" solutions to complex problems through processes of selection, crossover, and mutation. It's a powerful demonstration of how the fundamental mechanisms that shaped life on Earth can be applied to solve challenges in computing and beyond. Ready to explore this intriguing connection between biology and technology?

📢Click here to learn more📢! zhach.news/genetic-algorithms-mimic-evolution/

#GeneticAlgorithms #Evolution #ComputationalThinking #BeKindToAnimalsMonth

@skryking Isn't that kinda how #AlphaEvolve by #GoogleDeepmind works? (Except that Alpha Evolve has a fully automated feedback loop, so you kinda have #geneticAlgorithms on steorids. And of course, the testing aspect in either case wouldn't be #canonTDD https://tidyfirst.substack.com/p/canon-tdd but #bigTestUpfront.)

Weekly Update at the Open Journal of Astrophysics – 15/03/2025

The Ideas of March are come, so it’s time for another update of papers published at the Open Journal of Astrophysics. Since the last update we have published two papers, which brings the number in Volume 8 (2025) up to 27 and the total so far published by OJAp up to 262.

The first paper to report is “Dark Energy Survey Year 6 Results: Point-Spread Function Modeling” by Theo Schutt and 59 others distributed around the world, on behalf of the DES Collaboration. It was published on Wednesday March 12th 2025 in the folder Cosmology and NonGalactic Astrophysics. It discusses the improvements made in modelling the Point Spread Function (PSF) for weak lensing measurements in the latest Dark Energy Survey (6-year) data and prospects for the future.

Here is the overlay, which you can click on to make larger if you wish:

You can read the officially accepted version of this paper on arXiv here.

The other paper published this week is “Exploring Symbolic Regression and Genetic Algorithms for Astronomical Object Classification” by Fabio Ricardo Llorella (Universidad Internacional de la Rioja, Spain) & José Antonio Cebrian (Universidad Laboral de Córdoba, Spain), which came out on Thursday 13th March. This one is in the folder marked Astrophysics of Galaxies and it discusses the classification of astronomical objects in the Sloan Digital Sky Survey SDSS-17 dataset using a combination of Symbolic Regressiion and Genetic Algorithms.

The overlay can be seen here:

You can find the “final” version on arXiv here.

That’s it for this week. I’ll have more papers to report next Saturday.

#arXiv250105781v2 #arXiv250309220v1 #AstronomicalObjectClassification #AstrophysicsOfGalaxies #CosmologyAndNonGalacticAstrophysics #DarkEnergySurvey #DES #DiamondOpenAccess #GeneticAlgorithms #OpenAccessPublishing #SloanDigitalSkySurvey #SymbolicRegression #TheOpenJournalOfAstrophysics #weakGravitationalLensing

Via the @ataripodcast: in a 25-minute video, Jean Michel Sellier, Research Assistant Professor at Purdue University, demonstrates the use of an #Atari800XL to train a neural network using a genetic algorithm instead of the memory-hungry technique of gradient descent.

https://hackaday.com/2025/02/21/genetic-algorithm-runs-on-atari-800-xl/

I've had a soft spot for Artificial Life for a long time. During the last AI Winter in the mid 1990s, I was spurred to get back into education and onto a career in commercial software development by Stephen Levy's book "Artificial Life: The Quest for a New Creation". I loved that Artificial Life researchers borrowed well-understood mechanisms from genetics and implemented them in software to converge iteratively on solutions, in contrast to AI research, which was attempting to build models of categories which were not understood at all (and largely still aren't) - intelligence (whatever that is) and perception.

In subsequent years I wondered why I wasn't hearing any hype about Artificial Life; it turns out practitioners have been quietly getting on with solving problems using the technique. Meanwhile, yet again, AI boosters have blustered their way into the consciousness with another round of overcooked hype.

The Stephen Levy book is still worth a read, if you can find it. (IIRC Danny Hillis and the Connection Machine folks get a mention too.)

(I don't know if any of the genetic algorithm folks turned out to be supporters of eugenics, as many of the current crop of AI boosters seem to be.)

https://archive.org/details/artificiallifequ0000levy_l1x2

https://en.m.wikipedia.org/wiki/AI_winter#The_setbacks_of_the_late_1980s_and_early_1990s

#solarpunk #permacomputing #geneticalgorithms #ArtificialLife #RetroComputing #VintageComputing #TESCREAL #Eugenics #Genetics

Trying to come up with more use cases for #GeneticAlgorithms to highlight this under-appreciated type of #AI. It is such a fun and exciting way to figure out problems. What are your favorite ways to use GAs?

What do I say, what do I do! I want to talk about genetic algorithms, does #geneticalgorithms allow me to find people totalk to about that? what about #ai

Here's one. If you're given a function, you can treat argmax of that function as a set-valued function varying over all subsets of its domain, returning a subset--the argmaxima let's call them--of each subset. argmax x∈S f(x) is a subset of S, for any S that is a subset of the function f's domain. Another way to think of this is that argmax induces a 2-way partitioning of any such input set S into those elements that are in the argmax, and those that are not.

Now imagine you have some way of splitting any subset of some given set into two pieces, one piece containing the "preferred" elements and the other piece the rest, separating the chaff from the wheat if you will. It turns out that in a large variety of cases, given only a partitioning scheme like this, you can find a function for which the partitioning is argmax of that function. In fact you can say more: you can find a function whose codomain is (a subset of) some n-dimensional Euclidean space. You might have to relax the definition of argmax slightly (but not fatally) to make this work, but you frequently can (1). It's not obvious this should be true, because the partitioning scheme you started with could be anything at all (as long as it's deterministic--that bit's important). That's one thing that's interesting about this observation.

Another, deeper reason this is interesting (to me) is that it connects two concepts that superficially look different, one being "local" and the other "global". This notion of partitioning subsets into preferred/not preferred pieces is sometimes called a "solution concept"; the notion shows up in game theory, but is more general than that. You can think of it as a local way of identifying what's good: if you have a solution concept, then given a set of things, you're able to say which are good, regardless of the status of other things you can't see (because they're not in the set you're considering). On the other hand, the notion of argmax of a function is global in nature: the function is globally defined, over its entire domain, and the argmax of it tells you the (arg)maxima over the entire domain.

In evolutionary computation and artificial life, which is where I'm coming from, such a function is often called an "objective" (or "multiobjective") function, sometimes a "fitness" function. One of the provocative conclusions of what I've said above for these fields is that as soon as you have a deterministic way of discerning "good" from "bad" stuff--aka a solution concept--you automatically have globally-defined objectives. They might be unintelligible, difficult to find, or not very interesting or useful for whatever you're doing, but they are there nevertheless: the math says so. The reason this is provocative is that every few years in the evolutionary computation or artificial life literature there pops up some new variation of "fitnessless" or "objective-free" algorithms that claim to find good stuff of one sort of another without the need to define objective function(s), and/or without the need to explicitly climb them (2). The result I'm alluding to here strongly suggests that this way of thinking lacks a certain incisiveness: if your algorithm has a deterministic solution concept, and the algorithm is finding good stuff according to that solution concept, then it absolutely is ascending objectives. It's just that you've chosen to ignore them (3).

Anyway, returning to our friend argmax, it looks like it has a kind of inverse: given only the "behavior" of argmax of a function f over a set of subsets, you're often able to derive a function g that would lead to that same behavior. In general g will not be the same as f, but it will be a sibling of sorts. In other words there's an adjoint functor or something of that flavor hiding here! This is almost surely not a novel observation, but I can say that in all my years of math and computer science classes I never learned this. Maybe I slept through that lecture!

#ComputerScience #math #argmax #SolutionConcepts #CoevolutionaryAlgorithms #CooptimizationAlgorithms #optimization #EvolutionaryComputation #EvolutionaryAlgorithms #GeneticAlgorithms #ArtificialLife #InformativeDimensions

(1) If you're familiar with my work on this stuff then the succinct statement is: partial order decomposition of the weak preference order induced by the solution concept, when possible, yields an embedding of weak preference into ℝ^n for some finite natural number n; the desired function can be read off from this (the proofs about when the solution concept coincides with argmax of this function have some subtleties but aren't especially deep or hard). I skipped this detail, but there's also a "more local" version of this observation, where the domain of applicability of weak preference is itself restricted to a subset, and the objectives found are restricted to that subdomain rather than fully global.

(2) The latest iteration of "open-endedness" has this quality; other variants include "novelty search" and "complexification".

(3) Which is fair of course--maybe these mystery objectives legitimately don't matter to whatever you're trying to accomplish. But in the interest of making progress at the level of ideas, I think it's important to be precise about one's commitments and premises, and to be aware of what constitutes an impossible premise.

New paper out ✒️😊

We present a novel approach to performing fitness approximation in #geneticalgorithms (#GAs) using #machinelearning (#ML) models, focusing on dynamic adaptation to the evolutionary state.

https://www.mdpi.com/2078-2489/15/12/744

With talented grad students Itai Tzruia and Tomer Halperin, and my colleague Dr. Achiya Elyasaf.

New blog post: Evolutionary Computation Naming Madness

An old post, back from 2010, probably my first public rambling about metaphor heuristics. (I wonder what the activity pub plugin will make of me republishing a post with its original date)

#bestiary #catSwarmOptimization #EvolutionaryComputation #geneticAlgorithms

Full Post: https://claus.castelodelego.org/archives/550

(Note: replies to this post will show up as comments in the blog too!)

Is the evolution metaphor still necessary or even useful for genetic programming? https://link.springer.com/article/10.1007/s10710-023-09469-9 #artificialintelligence #geneticprogramming #geneticalgorithms

Many years ago, I wrote a program that generated fractal music using just intonation. You could select which one you liked the most, and it would apply a genetic algorithm based on your feedback to evolve ever cooler pieces. Now I'm seeing image generators that work on similar principles and it makes me want to dig out the old code.

#GeneticAlgorithms

#GeneticProgramming

#AlgorithmicComposition

#JustIntonation

#Fractals