I've surprised myself how fun it is to write this pure #haskell #hdf5 parser. It's, in a way, gruesome, fiddling with raw bytes, guessing some details, getting new files with features still unsupported, and I have no clue how exciting it will be to implement all the common filters. But the language is delightful and I'm making constant glacial progress. That accounts for a lot I suppose...

#hdf5

🚀 Excited to share more about Caterva2, your ultimate gateway to Blosc2/HDF5 repositories! 🚀

Caterva2 is designed to redefine how you interact with large datasets.

Want to see it in action? 🤔 We've just released a new introductory video showcasing Caterva2's main functionalities! 🎬

👉 https://ironarray.io/caterva2

#Caterva2 #Blosc2 #HDF5 #BigData #DataManagement #FreeSoftware #Python #DataScience #Tech

✨ Ever wondered how to effortlessly apply lazy expressions to massive tomographic HDF5 datasets and visualize them instantly in Cat2Cloud? 🤯

🎬 Check out this fantastic new video from our very own Luke Shaw! Discover how a bit of Python 🐍 magic and our user-friendly web interface simplify the process.

👇 Watch the full video to see the magic unfold: https://ironarray.io/cat2cloud

#HDF5 #Tomography #LazyEvaluation #DataVisualization #Cat2Cloud

#HDF5 User Group meeting, preparing for day two …

Zdenek from MAX IV #Synchrotron in Lund shows their “innovative data acquisition solutions with HSDS“.

Now it's Gerd from the #HDF5 group showing the history and future of the “most versatile container for sharing scientific and engineering data”.

Now Elena from the #HDF5 group / lifeboat is revisiting SWMR (single writer, multiple readers) and file versioning features.

Now it's @FrancescAlted to introduce the #Blosc2 #compression algorithm to reduce #HDF5 file size.

https://indico.desy.de/event/48471/timetable/#20250526

Moin from the European #HDF5 User Group Meeting @DESY in Hamburg!

We just got an introduction by Anton Barty into science and data storage at DESY, including particle physics, astroparticles, and photon science. Peta Bytes within few days, we'd like to write and read in parallel, with high bandwidth and low latency. Let's see where the problems and solutions are ;)

#GutenMorgen, Ihr lieben Tröten!

Noch 'ne knappe ½ Stunde bis zum JungeJungeFrühstück 🥱 …

Bis Mittwoch ist am DESY das europäische HDF5-Nutzergruppentreffen. #HDF5 ist das hierarchische Dateiformat, Version fümpf; ein Datencontainer, in dem wir unsere Röntgenbilder am #Synchrotron speichern. Und weil die Messungen immer größer und umfangreicher werden, packen wir gleich 3000 Bilder in eine Datei. Und viele Metadaten mit dazu. Das passt aber alles gar nicht in 1 Tröt …

Habt 1 gigatollen Tag!

#GutenMorgen, Ihr lieben WochenendTröter*innen!

Noch den Übungszettel fertig bauen, Tutor*innenbesprechung, bisschen Zeug packen … und dann geht's zur #LANParty. Und dann am Sonntag direkt weiter nach Hamburg zum #HDF5 User Group Meeting, drei Tage Minikonferenz zu einem Dateiformat.

Dann eigentlich kurz nach Hause und (aber weil ich auch zur Kontrolle ins Krankenhaus soll, habe ich direkt mitgepackt für:) zum langen Wochenende nach Berlin zum German Fetish Ball.

Habt 1 wunderbunten Freitag!

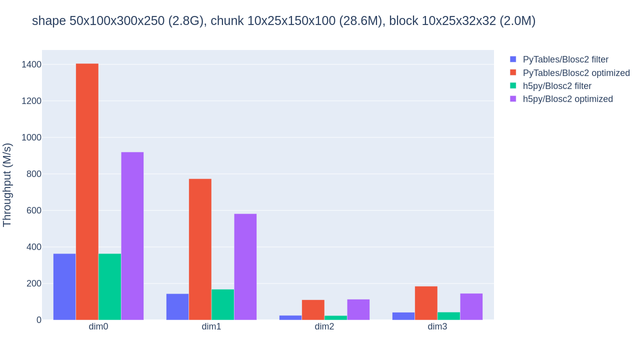

💡 Did you know you can supercharge your #HDF5 datasets with #Blosc2? 🚀

Leverage hdf5plugin (https://hdf5plugin.readthedocs.io) to integrate Blosc2 as a filter within HDF5. Create, write, and read data using popular Python wrappers like h5py or PyTables, while achieving excellent performance! 💨

More speed?

* h5py users: b2h5py offers optimized reads for n-dim slices.

* PyTables users: Optimized support is already built-in.

Learn more: https://www.blosc.org/posts/pytables-b2nd-slicing/

Compress Better, Compute Bigger :-)

🚀 Meet b2h5py: Supercharge your HDF5 data access! ⚡️

Unlock significantly faster reading of Blosc2-compressed HDF5 datasets. b2h5py's optimized slicing bypasses the HDF5 filter pipeline, allowing less data to decompress, and impressive 2x-5x speed-ups compared to standard methods! 💨 https://github.com/Blosc/b2h5py

We will be talking about this on the next 2025 European HDF5 Users Group Meeting: https://indico.desy.de/event/48471/timetable/#20250526

See you there!

#HDF5 #DataAccess #Blosc2 #DataScience #Optimization #Performance

#HDF5 jest super. W skrócie:

1. Oryginalnie, projekt używał systemu budowania autotools. Instalował binarkę h5cc, która — obok bycia nakładką na kompilator — miała dodatkowe opcje do uzyskiwania informacji o instalacji HDF5.

2. Później dodano alternatywny system budowania #CMake. W ramach tego systemu budowania instalowana jest uproszczona binarka h5cc, bez tych dodatkowych funkcji.

3. Każdy, kto próbował budować przez CMake, szybko odkrywał, że ta nowa binarka psuje większość paczek używających HDF5, więc wracano do autotools i zgłoszono problem do HDF5.

4. Autorzy zamknęli zgłoszenie, stwierdzając (tłum. moje): "Zmiany w h5cc przy użyciu CMake zostały udokumentowane w Release.txt, kiedy ich dokonano - kopia archiwalna powinna być dostępna w plikach z historią."

5. Autorzy ogłosili zamiar usunięcia wsparcia autotools.

Co stawia nas w następującej sytuacji:

1. Praktycznie wszyscy (przynajmniej #Arch, #Conda-forge, #Debian, #Fedora, #Gentoo) używa autotools, bo budowanie przy pomocy CMake psuje zbyt wiele.

2. Oryginalnie uznano to za problem w HDF5, więc nie zgłaszano problemu innym paczkom. Podejrzewam, że wiele dystrybucji nawet nie wie, że HDF5 odrzuciło zgłoszenie.

3. Paczki nadal są "zepsute", i zgaduję, że ich autorzy nawet nie wiedzą o problemie, bo — cóż, jak wspominałem — praktycznie wszystkie dystrybucje nadal używają autotools, a przy testowaniu budowania CMake nikt nie zgłaszał problemów do innych paczek.

4. Nawet nie mam pewności, czy ten problem da się "dobrze" naprawić. Nie znam tej paczki, ale wygląda to, jakby funkcjonalność usunięto bez alternatywy, i tym samym ludzie mogą co najwyżej samemu zacząć używać CMake (wzdych) — tym samym oczywiście psując swoje paczki na wszystkich dystrybucjach, które budują HDF5 przez autotools, o ile nie dodadzą dodatkowo kodu dla wsparcia tego drugiego wariantu.

5. Wszystko wskazuje na to, że HDF5 jest biblioteką, której autorów nie obchodzą ich własni użytkownicy.

#HDF5 is doing great. So basically:

1. Originally, upstream used autotools. The build system installed a h5cc wrapper which — besides being a compiler wrapper — had a few config-tool style options.

2. Then, upstream added #CMake build system as an alternative. It installed a different h5cc wrapper that did not have the config-tool style options anymore.

3. Downstreams that tried CMake quickly discovered that the new wrapper broke a lot of packages, so they reverted to autotools and reported a bug.

4. Upstream closed the bug, handwaving it as "CMake h5cc changes have been noted in the Release.txt at the time of change - archived copy should exist in the history files."

5. Upstream announced the plans to remove autotools support.

So, to summarize the current situation:

1. Pretty much everyone (at least #Arch, #Conda-forge, #Debian, #Fedora, #Gentoo) is building using autotools, because CMake builds cause too much breakage.

2. Downstreams originally judged this to be a HDF5 issue, so they didn't report bugs to affected packages. Not sure if they're even aware that HDF5 upstream rejected the report.

3. All packages remain "broken", and I'm guessing their authors may not even be aware of the problem, because, well, as I pointed out, everyone is still using autotools, and nobody reported the issues during initial CMake testing.

4. I'm not even sure if there is a good "fix" here. I honestly don't know the package, but it really sounds like the config-tool was removed with no replacement, so the only way forward might be for people to switch over to CMake (sigh) — which would of course break the packages almost everywhere, unless people also add fallbacks for compatibility with autotools builds.

5. The upstream's attitude suggests that HDF5 is pretty much a project unto itself, and doesn't care about its actual users.

Three Ways of Storing and Accessing Lots of Images in #Python

https://realpython.com/storing-images-in-python/

Using plain files, #LMDB, and #HDF5. It's too bad there's an explicit serialization step for the LMDB case. In C we'd just splat the memory in and out of the DB as-is, with no ser/deser overhead.

Also they use two separate tables for image and metadata in HDF5, but only one table in LMDB (with metadata concat'd to image). I don't see why they didn't just use two tables there as well.

#HDF5 and #DASK are both supported in #skflow #TensorFlow! See examples in https://goo.gl/MSH3dr