#GPUHammer is the first attack to show #Rowhammer bit flips on #GPU memories, specifically on a GDDR6 memory in an #NVIDIA A6000 GPU. Our attacks induce bit flips across all tested DRAM banks, despite in-DRAM defenses like TRR, using user-level #CUDA #code. These bit flips allow a malicious GPU user to tamper with another user’s data on the GPU in shared, time-sliced environments. In a proof-of-concept, we use these bit flips to tamper with a victim’s DNN models and degrade model accuracy from 80% to 0.1%, using a single bit flip. Enabling Error Correction Codes (ECC) can mitigate this risk, but ECC can introduce up to a 10% slowdown for #ML #inference workloads on an #A6000 GPU.

#inference

🧠🚀 Apparently, someone thought we needed yet another #AI #inference engine, but this time exclusively for Apple's golden child, the #Silicon chip. Because clearly, the world was just yearning for a new way to "infer" things while stuck in a walled garden. 🌳🔒

https://github.com/trymirai/uzu #Apple #Tech #Innovation #WalledGarden #HackerNews #ngated

LLM Inference Handbook

#HackerNews #LLM #Inference #Handbook #LLMs #AIInference #MachineLearning #TechTrends

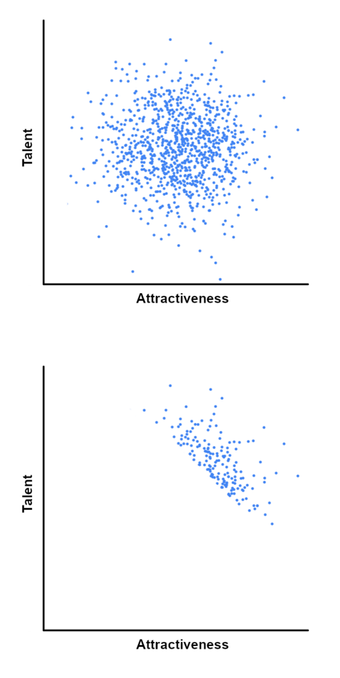

#statstab #383 Berkson's paradox

Thoughts: aka Berkson's bias, collider bias, or Berkson's fallacy. Important for interpreting conditional probabilities. Can produce counterintuitive patterns.

Эффективный инференс множества LoRA адаптеров

LoRA — популярный метод дообучения больших моделей на небольших датасетах, однако на этапе инференса низкоранговые адаптеры работают неэффективно, а их объединение с весами требует хранения отдельной полной копии модели для каждого адаптера. MultiLoRA решает эту проблему, позволяя одновременно выполнять инференс с несколькими адаптерами на основе одной базовой модели. В статье мы сравним производительность MultiLoRA-инференса в двух популярных фреймворках — vLLM и TensorRT-LLM . Тесты проведём на готовых релизных Docker-образах, оценивая, какой фреймворк эффективнее обрабатывает батчи запросов в сценариях, близких к офлайн и асинхронному инференсу.

https://habr.com/ru/articles/922290/

#multilora #offline_inference #async_inference #vllm #TensorRTLLM #tensorrt #peft #inference #benchmark #lora

#statstab #370 The Problem with “Magnitude-based Inference”

Thoughts: An appealing but flawed approach. Good overview of the error inflation issue.

Compiling LLMs into a MegaKernel: A Path to Low-Latency Inference

#HackerNews #CompilingLLMs #MegaKernel #LowLatency #Inference #MachineLearning #AI

#statstab #368 The FisherlPearson Chi-Squared Controversy: A Turning Point for

Inductive Inference

Thoughts: An overview of the difference between Pearson's descriptive view and Fisher's inferential view of X2.

#fisher #pearson #inference #chisquared

https://genepi.qimr.edu.au/contents/p/staff/1983BairdBJPS105-118.pdf

Tokasaurus: An LLM Inference Engine for High-Throughput Workloads

https://scalingintelligence.stanford.edu/blogs/tokasaurus/

#HackerNews #Tokasaurus #LLM #Inference #Engine #HighThroughput #AI #TechInnovation

Why DeepSeek is cheap at scale but expensive to run locally

https://www.seangoedecke.com/inference-batching-and-deepseek/

#HackerNews #DeepSeek #Inference #Batching #Cheap #Expensive #To #Run #Local #Scale

Nvidia Dynamo: If disaggregation is Dynamo’s backbone, the smart management of the KV cache is its brain

At around 300 tokens per second per user, you can generate 30 times more tokens per normalized per #GPU

www.vastdata.com/sharedeveryt...

#AI #Inference via @nicolehemsoth.bsky.social

Why Everyone’s Talking About N...

Ah, behold the majestic #DeepSeekR1-0528, a model so #mysterious and elusive that not even #Inference #Providers dare to touch it. 🤔✨ With a grand total of zero downloads last month, it's clear that this #685B parameter behemoth is the hottest #AI sensation—if only in its creator's wildest dreams. 🐒💭

https://huggingface.co/deepseek-ai/DeepSeek-R1-0528 #Parameters #HottestSensation #HackerNews #ngated

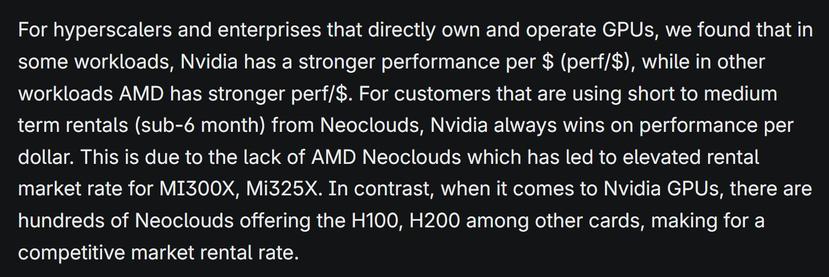

AMD vs NVIDIA #Inference Benchmark: Who Wins? – Performance & Cost Per Million Tokens Report by @SemiAnalysis_ tldr: It's not a simple answer semianalysis.com/2025/05/23/a... #AI #GPU #HPC via @ogawa-tadashi.bsky.social

Designing type inference for high quality type errors

https://blog.polybdenum.com/2025/02/14/designing-type-inference-for-high-quality-type-errors.html

#HackerNews #Designing #type #inference #for #high #quality #type #errors #typeinference #typeerrors #programming #languages #softwaredevelopment #techblog

'On Consistent Bayesian Inference from Synthetic Data', by Ossi Räisä, Joonas Jälkö, Antti Honkela.

http://jmlr.org/papers/v26/23-1428.html

#bayesian #privacy #inference

“ #Inference is actually quite close to a #theoryofeverything – including #evolution, #consciousness, and #life itself. It is #abduction all the way down.” (The process of abduction may be much more pervasive than the relatively rare use of the word “abduction” would suggest) aeon.co/essays/consc...

Consciousness is not a thing, ...

LLM-D: Kubernetes-Native Distributed Inference at Scale

https://github.com/llm-d/llm-d

#HackerNews #LLM-D #Kubernetes #Distributed #Inference #Scale #MachineLearning

inference, not training, represents an increasing majority of #AI’s energy demands and will continue to do so in the near future. It’s now estimated that 80–90% of computing power for AI is used for #inference https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/

🎉 Behold! The #llmd #community emerges from the depths of the #tech abyss, promising the holy grail of Kubernetes-native distributed #LLM #inference. 🤖 Because who doesn't want their #AI #deployments served with extra buzzwords and a side of "competitive performance per dollar"? 🍽️

https://llm-d.ai/blog/llm-d-announce #Kubernetes #news #HackerNews #ngated