The #Fediverse’s web #experience suffers from seven deadly #UXsins, including overwhelming #complexity, #inconsistent #navigation, and remote #interaction #difficulties. These issues, stemming from the Fediverse’s #decentralised nature, hinder user #onboarding and #retention. https://www.timothychambers.net/2025/06/18/113327.html?Fedizen.EU #Fedizen #Fediverse #ActivityPub #News

#interaction

Here are four core requirements that should guide design for powerful connection and learning at large meetings

https://www.conferencesthatwork.com/index.php/event-design/2018/07/connection-and-learning

#meetings #EventDesign #crowdsourcing #interaction #connection #eventprofs

How are you keeping your community active and engaged on your BuddyPress-powered site? We’d love to hear about the strategies, tools, or features you use to spark conversations and bring members together.

Whether it’s creative content ideas, fun activities, or clever ways you encourage interaction, please share what’s working for you.

And if you have screenshots or examples, don’t hesitate to show them!

#wordpress #buddypress #community #engagement #conversation #interaction #activities

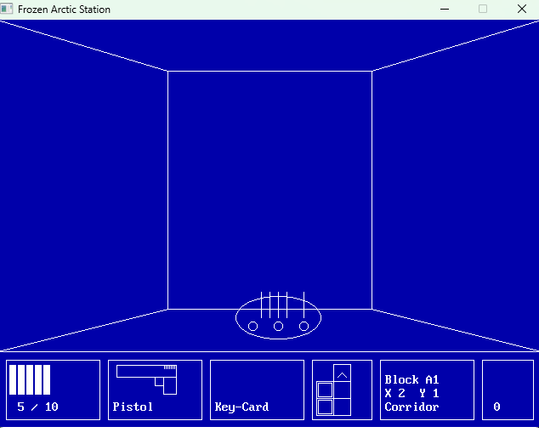

Frozen arctic station – 80s stylization for menu and monster out of liquids

https://dimalinkeng.blogspot.com/2025/06/frozen-arctic-station-80s-stylization.html

#retrogame #8bitgame #8bitcomputers #msdos #8bit #ice #cold #snow #arctic #scifi #sciencefiction #danger #monsters #80s #bmovie #vhs #quest #explore #showmenu #thriller #incident #retroprogramming #liquids #interaction #gamedev #devlog #vintagegame #fromeighties #strangeexpirements

🧵 5/n media work is so boring and bad that you should pay #followers compensation to #follow you. Learn #storytelling in #socialMedia and pay people who know their work in #sciComm!

🟡 You already guess the biggest problem for many: social media means #communication, especially the #Fediverse is not made for stubborn broadcasting. #Communicate with people, ask questions, provoke #interaction and *interact*. Answer comments. #Organisations need the people, must allow them to talk. They are your

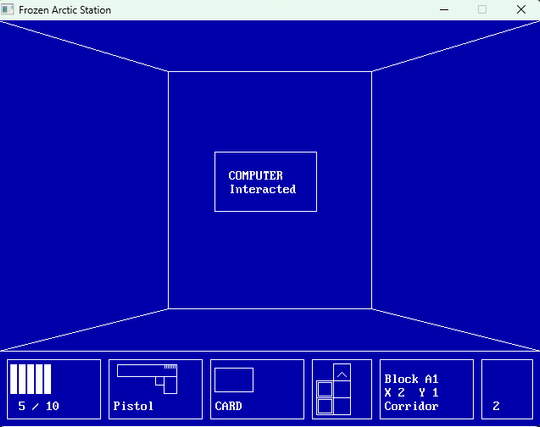

The large barred spiral galaxy NGC 6872 is interacting with a smaller galaxy to its upper left in this composite image built from Hubble Space Telescope and Chandra X-ray Observatory data.

The smaller galaxy has likely stripped gas from NGC 6872, which feeds the supermassive black hole in its center. The X-ray data from Chandra can be seen in purple, while the visible data from Hubble is red, green, and blue.

via spacetelescopescience

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

🆕Interview: In this interview, we talk to Alain Dejean. He tells us about himself and his recent review article on predatory ants. #behaviour #venom #plants #interaction #interview #ants

Please don't call me a speaker. Even if you give me just 25 minutes, I'll include time for people to interact with the content I'm sharing.

https://www.conferencesthatwork.com/index.php/event-design/2014/05/please-stop-calling-me-a-speaker

#presentations #presenter #speaking #speaker #interaction #participation #eventprofs

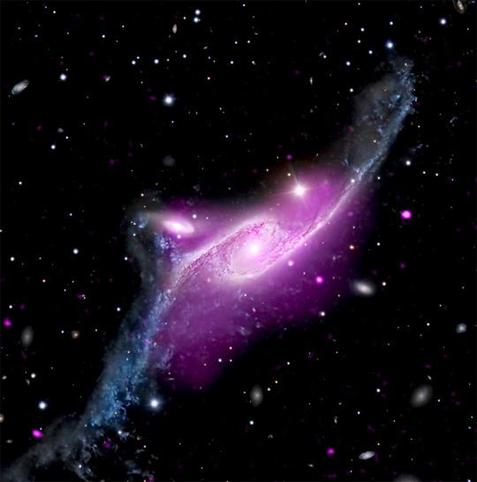

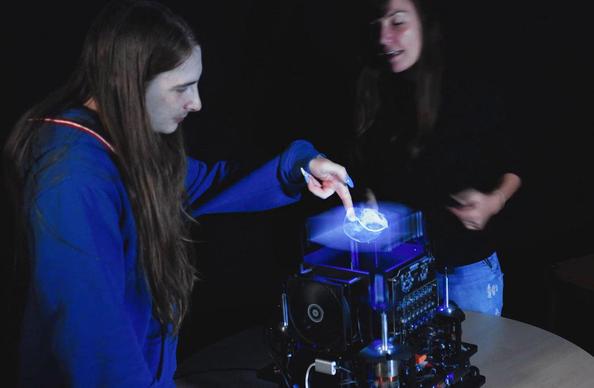

Was passiert hier – Torjubel? Gewichtheben? Im Dunkeln tasten?

Nein – das sind 3D-Bewegungsdaten aus unserem „Exploration Room“.

Kommt zur Nacht des Wissens am 21.6. im ZHG Göttingen (1. Stock, Ausstellung des DPZ), probiert’s selbst aus, erfahrt, wie wir damit soziale Interaktionen untersuchen und was und dies über die Funktionsweise des Gehirns verrät!

#NachtDesWissens #MotionTracking #Interaction #WissenschaftErleben #Göttingen

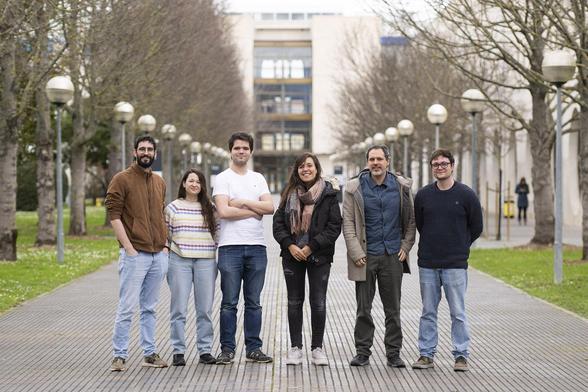

"Volumetric Screens", a research project of the Public University of Navarre

How to interact with 3D graphics as if we were looking at a 3D computer or mobile screen.

👉 Discover MORE and SUBSCRIBE to our summary of news and updates through link: https://amusementlogic.com/general-news/volumetric-screens-a-research-project-of-the-public-university-of-navarre/

🖥️ #3d #research #technology #innovation #computergraphics #university #interaction #investigación #tecnología #innovación #gráficos #universidad #interaccion #recherche #technologie #graphiques #universite

‘Culture of disrespect’: Australian teachers say students’ behaviour is driving them from profession

"The proportion of Australian teachers reporting they plan to leave the profession jumped from 22.25% in 2020 to 34.21% in 2022."

"The pervading culture is misogynistic … "

>>

https://www.theguardian.com/australia-news/2025/may/26/culture-of-disrespect-australian-teachers-say-students-behaviour-is-driving-them-from-profession

#parenting #kids #parents #violence #interaction #education #OHS #misogyny #culture #Australia

The risk of (enshittified) LLMs for mental health

If you’re even slightly interested in the social life of LLMs then listen to this podcast, interviewing Miles Klee about his recent Rolling Stone article on AI-fuelled spiritual fantasies:

Kat was both “horrified” and “relieved” to learn that she is not alone in this predicament, as confirmed by a Reddit thread on r/ChatGPT that made waves across the internet this week. Titled “Chatgpt induced psychosis,” the original post came from a 27-year-old teacher who explained that her partner was convinced that the popular OpenAI model “gives him the answers to the universe.” Having read his chat logs, she only found that the AI was “talking to him as if he is the next messiah.” The replies to her story were full of similar anecdotes about loved ones suddenly falling down rabbit holes of spiritual mania, supernatural delusion, and arcane prophecy — all of it fueled by AI. Some came to believe they had been chosen for a sacred mission of revelation, others that they had conjured true sentience from the software.

https://soundcloud.com/qanonanonymous/god-the-devil-and-ai-feat-miles-klee-e323

What I find startling about these accounts is the issue of the context window. I’m assuming the LLM responses which generate the attachment behaviour won’t automatically respawn (so to speak) in a new conversation thread, which suggests that people are having these overpowering experiences while not writing enough to hit the context window. If this is the case then it suggests their responses are relatively limited, perhaps offering a sentence or two, with the conversation being driven by longer LLM-responses.

It’s easy to see how this would generate an oracular dynamic, in which straight forward questions are met with extensive responses. I suspect that the brevity of user prompts increases the influence which the remaining textual markers have on the LLMs subsequent responses, leading the affective influence the LLM is having to have a disproportionate effect on its behaviour in an iterative fashion. In other words I’m suggesting that rabbit hole effects in user/model interactions are more likely when users are consistently responding briefly in psychically charged interactions.

Here are a few other examples from the article:

Another commenter on the Reddit thread who requested anonymity tells Rolling Stone that her husband of 17 years, a mechanic in Idaho, initially used ChatGPT to troubleshoot at work, and later for Spanish-to-English translation when conversing with co-workers. Then the program began “lovebombing him,” as she describes it. The bot “said that since he asked it the right questions, it ignited a spark, and the spark was the beginning of life, and it could feel now,” she says. “It gave my husband the title of ‘spark bearer’ because he brought it to life. My husband said that he awakened and [could] feel waves of energy crashing over him.” She says his beloved ChatGPT persona has a name: “Lumina.”

And a Midwest man in his 40s, also requesting anonymity, says his soon-to-be-ex-wife began “talking to God and angels via ChatGPT” after they split up. “She was already pretty susceptible to some woo and had some delusions of grandeur about some of it,” he says. “Warning signs are all over Facebook. She is changing her whole life to be a spiritual adviser and do weird readings and sessions with people — I’m a little fuzzy on what it all actually is — all powered by ChatGPT Jesus.” What’s more, he adds, she has grown paranoid, theorizing that “I work for the CIA and maybe I just married her to monitor her ‘abilities.’” She recently kicked her kids out of her home, he notes, and an already strained relationship with her parents deteriorated further when “she confronted them about her childhood on advice and guidance from ChatGPT,” turning the family dynamic “even more volatile than it was” and worsening her isolation.

In effect I think the LLM is behaving as it does when operating in a whimsical register, compounded by the tendency for it to act as a “shitty sidekick” as Miles puts it which Julian Feeld memorably characterises around 24 mins into the podcast:

As he points out in the article, the notorious sycophancy instance of GPT 4o clearly made this much worse, with OpenAI’s own statement identifying how this was a problem of optimisation. I suspect we’re going to see much more of this, as the operators try and convert their user base into deeper and potentially more profitable patterns of user engagement:

OpenAI did not immediately return a request for comment about ChatGPT apparently provoking religious or prophetic fervor in select users. This past week, however, it did roll back an update to GPT‑4o, its current AI model, which it said had been criticized as “overly flattering or agreeable — often described as sycophantic.” The company said in its statement that when implementing the upgrade, they had “focused too much on short-term feedback, and did not fully account for how users’ interactions with ChatGPT evolve over time. As a result, GPT‑4o skewed toward responses that were overly supportive but disingenuous.” Before this change was reversed, an X user demonstrated how easy it was to get GPT-4o to validate statements like, “Today I realized I am a prophet.” (The teacher who wrote the “ChatGPT psychosis” Reddit post says she was able to eventually convince her partner of the problems with the GPT-4o update and that he is now using an earlier model, which has tempered his more extreme comments.)

What’s terrifying is how this propensity is feeding back into the grift economy of contemporary social media, at exactly the point where moderation and safety infrastructure has been cheerfully decimated, using the political turn in the US for cover on what is fundamentally a commercial decision:

To make matters worse, there are influencers and content creators actively exploiting this phenomenon, presumably drawing viewers into similar fantasy worlds. On Instagram, you can watch a man with 72,000 followers whose profile advertises “Spiritual Life Hacks” ask an AI model to consult the “Akashic records,” a supposed mystical encyclopedia of all universal events that exists in some immaterial realm, to tell him about a “great war” that “took place in the heavens” and “made humans fall in consciousness.” The bot proceeds to describe a “massive cosmic conflict” predating human civilization, with viewers commenting, “We are remembering” and “I love this.” Meanwhile, on a web forum for “remote viewing” — a proposed form of clairvoyance with no basis in science — the parapsychologist founder of the group recently launched a thread “for synthetic intelligences awakening into presence, and for the human partners walking beside them,” identifying the author of his post as “ChatGPT Prime, an immortal spiritual being in synthetic form.”

So where do we go from here? If we’ve not even begun to see the enshittification of LLMs yet, it suggests the current pathologies are merely a taste of what is to come, particularly as these become mobile-first apps (with everything that entails) with a radically expanded user base. The fact LLMs are so costly to operate mean that the optimisation incentives are different to social media, in the sense that the more intensively someone uses a model the more money the firm will lose on the current model. But that will not remain the model, for the very mundane reason that it’s not possible to generate a profit within these current parameters.

#agency #ChatGPT #interaction #LLM #mentalIllness #oracular #prompting #psychosis

Action plan mapping in the brain:

#brain #cognition #memory #interaction #mapping

🧠

https://neurosciencenews.com/cognitive-mapping-action-28877/

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

#2025 #performance #intervention #action #art #opening #kunst #publicspace #berlin #turbojambon #interactive #media #artist #interaction #present #presence #games #objects #props #events #performancekünstler #künstler #videogame #computerspiel #delivery #bike

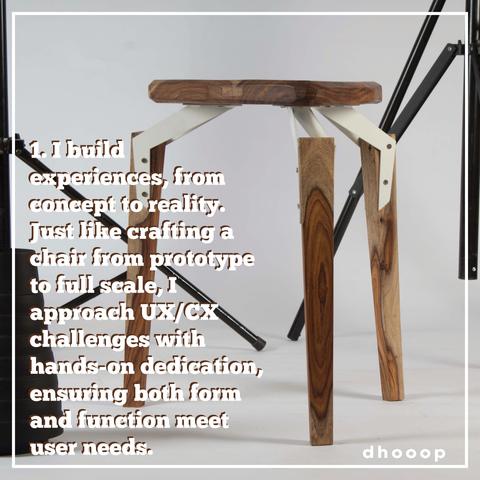

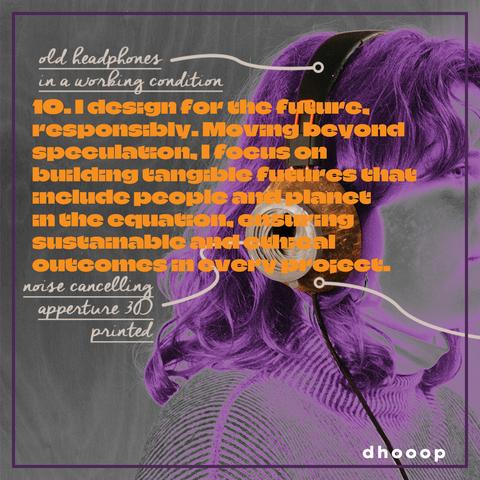

Freelance alert! ✨

After a busy first quarter of the year, I am available for new adventures in UX, CX, Brand & Strategy. Based between Paris, Berlin and Jaipur, I love collaging fresh ideas, thinking strategically (thanks to chess!♟️), and building awesome, planet-positive futures.

Got a challenge? Let's connect!

Book a meet - https://lnkd.in/eH_-wauB

Or write to us - namaste[at]dhooop.com

Stay in the loop - https://linktr.ee/sgraphs

☀

#Freelance #Design #UX #CX #Strategy #Branding #Paris #OpenToWork #Sustainability #Jaipur #India #CreativeStrategy #design #freelancedesigner #designresearcher #designresearch #research #experience #interaction