This post brought to you by the "This #K8s cluster with 100 JVM 'microservices' could have been an app on my #RaspberryPi" association.

#k8s

5,000 trains. 400+ internal projects. 200-page security manifesto.

And just four months to go cloud native with Talos Linux.

https://www.siderolabs.com/case-studies/frances-national-railway-goes-cloud-native-in-four-months/

#kubernetes #CloudNative #K8s #ContainerOrchestration #SNCF #TalosLinux

I generally rather enjoy working with #k8s #helm charts to deploy complex server side apps.

The lack of silly walks is satisfying.

Helm: "May I have my Configuration Burger so I can deploy your app please?"

Me: Here you go! 600 lines of YAML! OPEN WIDE!

Only problem is that when you're 300 lines in editing all the values to be correct it feels like THIS point people used to talk about hitting while building electronics projects:

Istio как мультикластерное решение: возможности, подходы и компромиссы

Привет, Хабр. Продолжаем изучение Istio и сегодня рассмотрим некоторые интересные особенности, которые в дальнейшем могут облегчить сопровождение и развитие сервисной mesh-инфраструктуры в Kubernetes. С ростом распределённых систем и микросервисных архитектур в Kubernetes всё чаще встаёт вопрос о построении надёжной, масштабируемой и безопасной сетевой инфраструктуры. Когда одного кластера становится недостаточно, возникает потребность объединить несколько инсталляций в единую mesh-сеть. Здесь и появляется Istio, как кандидат на реализацию мультикластерной архитектуры.

In case you want to get your hands dirty with Traefik, Kubernetes and the new GatewayAPI, I got you covered.

Here is another vagrant-libvirt setup that has #k3s, #Traefik and a #Nginx deployment. Instead of using a #Kubernetes #ingress or a Traefik ingressroute, this setup uses the #GatewayAPI resources like Gateways and HTTPRoutes.

https://codeberg.org/johanneskastl/traefik_gateway-api_on_k3s_vagrant_libvirt_ansible

https://github.com/johanneskastl/traefik_gateway-api_on_k3s_vagrant_libvirt_ansible

As usual, #Ansible does the heavy lifting and deploys everything in the cluster.

Have a lot of fun.

#k8s #Kubernetes #Traefik #Ingress #GatewayAPI #DevOps #vagrant #libvirt #Nginx #HellYeah

Как я перестал страдать и полюбил CoreDNS: три истории

Всем привет! Я Саша Краснов, CTO контейнерной платформы «Штурвал». В апреле прошла юбилейная DevOpsConf 2025, на которой мне посчастливилось выступать с докладом. Рассказывал я про хаки, которые позволяют автоматизировать использование DNS. Эта статья построена на базе моего доклада и трех реальных историй: — управление DNS из git; — собственный nip.io ; — как и зачем писать плагины для CoreDNS. Приходите под кат, там интересно :) Попасть под кат

https://habr.com/ru/companies/chislitellab/articles/913946/

#coredns #dns #kubernetes #k8s #nipio #git #github #плагины #штурвал #devops

✨ It’s a big day for the #VictoriaMetrics community!

We’re kicking off our first-ever #Features & #Community Call today at 5 pm BST / 6 pm CEST / 9 am PST.

We'll talk about how to optimise data collected by default in k8s-stack helm chart, incl. topics such as:

🔭 Cardinality explorer

🎥 Stream aggregation

📈 Unused #metrics

🔍 Explore powerful features

💬 Hear top user Q&As

K8s как основа: строим инфраструктурную платформу

Kubernetes является мощным инструментом для оркестрации контейнеризированных приложений. Построение инфраструктурной платформы на основе K8s является очень нетривиальной задачей, и в этой статье мы рассмотрим основные вопросы и проблемы, возникающие при разработке архитектуры платформы.

https://habr.com/ru/companies/otus/articles/911962/

#k8s #architecture #kubernetes #оркестрация_контейнеров #архитектура_платформы

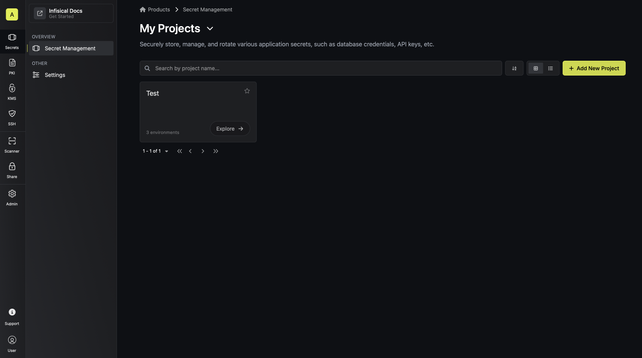

Migrating from Sealed Secrets to Infisical in my homelab.

#DevOps #k8s #learnk8s #cncf #100DaysOfCode #100DaysOfDevOps #kubernetes

Edge infrastructure isn’t a scaled-down copy of the cloud.

Kubernetes may have been designed for connected, stable, and resource-rich environments, but now it's making its mark at the edge. And everything is changing.

https://www.edgeir.com/when-kubernetes-hits-the-edge-everything-changes-20250527

#kubernetes #k8s #edgecomputing

Has anyone run #K8S nodes across the internets? For example, control plane running in one location (eg #homelab) and worker nodes in a DC?

@sheogorath any experience with this?

If you're curious about how to configure HPA on OTel Collectors managed by the #OpenTelemetry Operator, then check out my latest article!

Big thanks to Jacob Aronoff for helping me unpack some of this!

Putting aside the question of *which* node should be advertising a given service via BGP - *what* would it advertise? Services /can/ have multiple IPs but that's not usually the case. It's primarily a single ClusterIP to indirect backends right?

Okay so *somehow* the IP gets advertised but what range do you put on it?

The entire service CIDR sure is convenient but then what? All services hit the same node and get converted to in-cluster IP forwarding? Can you even advertise a range with multiple gateways? Probably. But this is also playing roulette with nodes not having a backend on them. Even if you made the route advertisement only the nodes with backends for the service, it'd be quite a weighty way to do the indirection, and you're now moving that indirection *outside* the cluster - which is cool but seems to violate the idea that services should be internal-only.

@hugo Halp

So the default kubernetes service has no `selector` in spec, which, according to the v1 `Service` spec:

> If empty or not present, the service is assumed to have an external process managing its endpoints, which Kubernetes will not modify.

But fetching endpoints (or endpointslices rather) yields none for the default service. This would explain the CNI not doing anything about the Service. Does not explain the lack of service routing for ones that *do* have endpointSlices

Am I missing some Cilium option to make it manage the endpoint?

What is the practical benefit of splitting persistent volume and persistent volume claim resource definitions in kubernetes when they have a 1:1 mapping? Is it helpful or just extra boilerplate you're forced to write?

Felt like I was thrashing semi-blindly with my CNI config so i read the Kubernetes/Cilium for network engineers book. Couple new tidbits but looks like I am stuck waiting till 2026 for the proper O'Reilly one.

Meantime - labs I guess

What I'm really trying to do: build an nginx config bundle (conf + certificates) as a Secret with an in-cluster metaoperator and then sync that to a set of external proxies.

What is the most lightweight way to monitor a #Kubernetes object for changes outside of a cluster? Specifically, I want to know when an object changes and fire a hook script with the updated object.

Is this just shell-operator?

Jam-packed talk full of crazy stuff with IPv6 SRV.

I think I understood like maybe a half of it.