Bei der Beschäftigung mit #Chatbots wird schnell klar, wie enorm hier fremdes geistiges Eigentum missachtet wird (#Urheberrechtsverletzungen). Das passiert auch durch wörtliche umfangreich Textausgaben, die den geregelten Zitatumfang übersteigen. Was vielen womöglich nicht klar ist: Auch wenn sie umfangreiche Textpassagen vom Chatbot übernehmen und diese veröffentlichen, können sie wegen Urheberrechtsverletzung bestraft werden.

#ChatBots

Tech Xplore: Dark LLMs: It’s still easy to trick most AI chatbots into providing harmful information, study finds. “A group of AI researchers at Ben Gurion University of the Negev, in Israel, has found that despite efforts by large language model (LLM) makers, most commonly available chatbots are still easily tricked into generating harmful and sometimes illegal information.”

Millionen #Menschen sind ständig online, aber oft allein. Jetzt will #Meta-Chef Mark #Zuckerberg unsere soziale Leere mit #Chatbots füllen – und macht aus Freundschaft ein Geschäftsmodell. #KI #AI #Facebook #Whatsapp https://www.aargauerzeitung.ch/kultur/social-media-digitale-einsamkeit-facebook-hat-die-freundschaft-zerstoert-und-ersetzt-sie-jetzt-mit-ki-ld.2777117

This is alarming and should act as a wake-up call. Using AI it costs virtually nothing to create personalised misinformation campaigns aimed at local politicians - it would be surprising if this was confined to Canada.

#Chatbots wie #ChatGPT, #Gemini, #Copilot benutzen gelegentlich russische Propaganda, wenn sie auf nordischen Sprachen antworten. Ausführlicher Artikel auf #norwegisch (#bokmål) bei #faktisk.no via #filternyheter. https://www.faktisk.no/artikkel/russiske-propagandasider-infiltrerer-nordiske-ki-chatboter/125605?utm_source=Filter+Nyheter&utm_campaign=28a8af39f1-EMAIL_CAMPAIGN_2025_05_28_12_46&utm_medium=email&utm_term=0_-28a8af39f1-282565289&mc_cid=28a8af39f1

I’m going to write about this… Any thoughts? OpenAI's ChatGPT may be driving countless of its users into a dangerous state of "ChatGPT-induced psychosis."

“Friends and family are watching in alarm as users insist they've been chosen to fulfill sacred missions on behalf of sentient AI or nonexistent cosmic powerse — chatbot behavior that's just mirroring and worsening existing mental health issues, but at incredible scale and without the scrutiny of regulators or experts.” #CyberPsychosis #AI #ChatBots #psychology #Behavior

Source: Futurism https://share.google/5gbEHBdr0SQiso6bv

🤔🙄 Oh wow, because the world was just DYING for another overhyped AI toy to invade our chats! What’s next, $300M to teach asteroids how to text? 🚀🪨

https://techcrunch.com/2025/05/28/xai-to-invest-300m-in-telegram-integrate-grok-into-app/ #overhypedAI #AItoys #techhumor #chatbots #innovation #HackerNews #ngated

Want an advanced AI assistant? Prepare for them to be all up in your business

#Tech #AI #AIAssistants #ArtificialIntelligence #FutureTech #AIEthics #DigitalIdentity #TechNews #AIRisks #GenAI #CharacterAI #AIAndSociety #AIFuture #Chatbots #ChatGPT

https://the-14.com/want-an-advanced-ai-assistant-prepare-for-them-to-be-all-up-in-your-business/

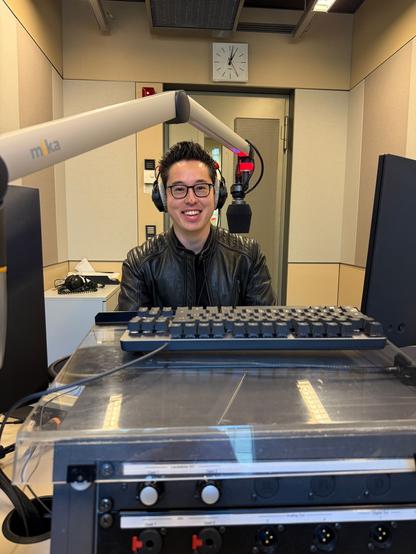

Wie nutzen die Menschen #KI im #Alltag?

Mein Live-Studiogespräch mit den Hörer:innen des #WDR gibt es nun auch als Podcastfolge zum Nachhören – und die Themen, über die wir in einer Stunde gesprochen haben, waren mehr als vielfältig: Ökologische #Nachhaltigkeit und KI-Rechenzentren, digitale Abhängigkeit und digitale #Souveränität, #Chatbots im Betrieb bis hin zum #Datenschutz in Social Media.

Hier geht’s zum Reinhören für alle, die live nicht dabei sein konnten:

https://www1.wdr.de/radio/wdr5/sendungen/tagesgespraech/250526-100.html

Anthrope aguijada un modo de voz para Claude #Anthrope #chatbots #Claude #lanza #modo #para #voz #ButterWord #Spanish_News Comenta tu opinión 👇

https://butterword.com/anthrope-aguijada-un-modo-de-voz-para-claude/?feed_id=23888&_unique_id=68365e757d243

🎩🐑 So, apparently, if you slap a fancy "Megakernel" on Llama-1B, your chatbot will answer before you even ask. 🙄 Their groundbreaking discovery? Faster GPUs make things faster. Who knew? 🤦♂️🚀

https://hazyresearch.stanford.edu/blog/2025-05-27-no-bubbles #Megakernel #Llama1B #FasterGPUs #Chatbots #Innovation #HackerNews #ngated

🧠 What happens when you train an LLM on your own life?

💬 5 years of private Telegram chats

🧪 LoRA fine-tuning on Mistral-7B

🎮 PS5 jokes, dark humor, sarcastic punchlines

💸 All for just $70

⚡ This isn’t just AI — it’s me, replicated in code.

👇 Read the full experiment:

🔗 https://medium.com/@rogt.x1997/70-5-years-of-telegram-chats-and-14-fine-tuning-jumps-how-i-built-a-personality-driven-llm-4cdc4c5e7f32

#LLM #Mistral7B #LoRA #AIPersonality #GenerativeAI #Chatbots #OpenSourceAI #AIClone

https://medium.com/@rogt.x1997/70-5-years-of-telegram-chats-and-14-fine-tuning-jumps-how-i-built-a-personality-driven-llm-4cdc4c5e7f32

"The curse of prompt injection continues to be that we’ve known about the issue for more than two and a half years and we still don’t have convincing mitigations for handling it.

I’m still excited about tool usage—it’s the next big feature I plan to add to my own LLM project—but I have no idea how to make it universally safe.

If you’re using or building on top of MCP, please think very carefully about these issues:

Clients: consider that malicious instructions may try to trigger unwanted tool calls. Make sure users have the interfaces they need to understand what’s going on—don’t hide horizontal scrollbars for example!

Servers: ask yourself how much damage a malicious instruction could do. Be very careful with things like calls to os.system(). As with clients, make sure your users have a fighting chance of preventing unwanted actions that could cause real harm to them.

Users: be thoughtful about what you install, and watch out for dangerous combinations of tools."

https://simonwillison.net/2025/Apr/9/mcp-prompt-injection/

#AI #GenerativeAI #LLMs #Chatbots #CyberSecurity #MCP #PromptInjection

“Yet, despite substantial investments, economic impacts remain minimal. Using difference-in-differences and employer policies as quasi-experimental variation, we estimate precise zeros: AI chatbots have had no significant impact on earnings or recorded hours in any occupation, with confidence intervals ruling out effects larger than 1%."

#AI #chatbots #technology #tech #software #llm #research #science #programming #opensource

VT Digger: Final Reading: Meet ChatVT, the state of Vermont’s homemade AI chatbot. “These days, everything seems to be powered, augmented or enhanced by artificial intelligence: phones, software, movies, etc. Now, add the state of Vermont to that list. For the past few months, all Vermont state employees have had access to a bespoke AI chatbot named — of course — ChatVT.”

🍮 Wissen zum Nachtisch: 🍨

#CommunityContent: Immer mehr Menschen sehen sich im beruflichen Umfeld genötigt, mit generativer #KI zu arbeiten.

Besonders Großunternehmen „überrollen“ damit ihre #Mitarbeiter. Es wird eine Art #Wettbewerbsdruck unter #Kollegen aufgebaut.

Wer in generative #Chatbots wie #ChatGPT schnell einsteigen möchte, kann sich als #Steady-Unterstützer mein E-Book herunterladen.

#Büroalltag #Buchtipp #Nachhilfe #Bewerbung #SocialMedia #Korrespondenz

Chatbots als Lernhilfe: Potenziale und Herausforderungen in der Weiterbildung

Wie Chatbots die Weiterbildung und die Vermittlung von Future Skills verändern: Vorteile, Einsatzbereiche und Grenzen von KI-basierten Lernhilfen im Überblick.

#Chatbots #DigitalesLernen #KI #Lernhilfe #DigitaleTutoren #Microlearning

🤖📉BREAKING: #Chatbots are as reliable as that friend who swears they almost landed a gig on Mars. 🚀🔥 Study reveals 73% of large language models' conclusions are as accurate as a weather forecast from a fortune cookie. 🍪🔮 Maybe the secret to AI's success is just a splash of human hyperbole? 🌈

https://www.uu.nl/en/news/most-leading-chatbots-routinely-exaggerate-science-findings #Hyperbole #AI #Reliability #LanguageModels #HackerNews #ngated

"Let’s not forget that the industry building AI Assistants has already made billions of dollars honing the targeted advertising business model. They built their empires by drawing our attention, collecting our data, inferring our interests, and selling access to us.

AI Assistants supercharge this problem. First because they access and process incredibly intimate information, and second because the computing power they require to handle certain tasks is likely too immense for a personal device. This means that very personal data, including data about other people that exists on your phone, might leave your device to be processed on their servers. This opens the door to reuse and misuse. If you want your Assistant to work seemlessly for you across all your devices, then it’s also likely companies will solve that issue by offering cloud-enabled synchronisation, or more likely, cloud processing.

Once data has left your device, it’s incredibly hard to get companies to be clear about where it ends up and what it will be used for. The companies may use your data to train their systems, and could allow their staff and ‘trusted service providers’ to access your data for reasons like to improve model performance. It’s unlikely what you had all of this in mind when you asked your Assistant a simple question.

This is why it’s so important that we demand that our data be processed on our devices as much as possible, and used only for limited and specific purposes we are aware of, and have consented to. Companies must be provide clear and continuous information about where queries are processed (locally or in the cloud) and what data has been shared for that to happen, and what will happen to that data next."

#AI #GenerativeAI #LLMs #Chatbots #AIAssistants #Privacy #AdTech #DataProtection #AdTargeting