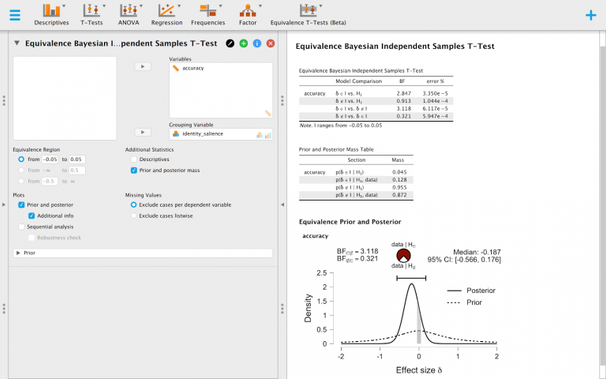

I am realizing something about #LLM use and teaching: it means, if I want to make sure I'm assessing student learning and not student saying-stuff-to-chatgpt, I can't trust students as much or give them the benefit of the doubt. Tonight a student produced some graphs for her results on a stats project that had an extra variable thrown in that wasn't part of her original hypotheses. It was in her dataset, so it wasn't bizarre, and it made some sense, but there were a few things that in the past I would have said were just students being students: no error bars, odd wording of axis labels, and like that. Historically, these (for me) have been within the bounds of "students kind of missing the boat a bit."

Now I think it could be that or it could be that chatGPT or grok or some other LLM cranked these graphs out, or possibly spit out the instructions for making them in #JASP.

I can't trust the student anymore. I can't give her the benefit of the doubt. There is an ever-present alternative explanation for all faults in student work, and it's a very strong explanation.

#teaching #professor #statistics