Achei que esse ano não ia rolar uma palestra para o Caipyra. Mas no fim aprovaram uma das minhas propostas! Para quem trabalha com raspagem de dados e com Scrapy, bora aprender uns tópicos um pouquinho mais avançados agora em junho, lá em Ribeirão Preto?

https://2025.caipyra.python.org.br/

#Caipyra2025 #Python #Scrapy

#scrapy

🐍 How can you scrape data from webpages using #Python? In this talk, you'll see how this is possible with #scrapy. https://www.youtube.com/watch?v=tdA1cl6LiCw

Настраиваем паука для сбора данных: как работает фреймворк Scrapy

В Точке мы обучаем наших AI-ассистентов, а для этого нужно много данных. В статье расскажу, как быстро собрать информацию практически с любого сайта при помощи фреймворка Scrapy.

Create your personal web crawler with #scrapy and #raspberrypi. Complete tutorial to scrape your favourite websites with a simple #python script #linux #diy https://peppe8o.com/use-raspberry-pi-as-your-personal-web-crawler-with-python-and-scrapy/

This week I wrote how to use "CrawlSpider" to use a declarative format to follow links during a web scraping project with Scrapy.

However, in a past project, I had the need to extend this functionality a bit, defining dynamic rules (based on user input).

So as a continuation of my previous post, I wrote a new one explaining a little about how this solution was made.

https://rennerocha.com/posts/dynamic-rules-for-following-links-declaratively-with-scrapy/

Scrapy has a generic Spider class (`CrawlSpider`) that helps extracting and following links in a more declarative way (using a set of "rules"). I noticed in projects that I worked before, that this not used that much, but I found it very helpful and make the code very readable.

I wrote something about it:

https://rennerocha.com/posts/following-links-declaratively-with-scrapy/

Как парсить данные с Python

Парсинг — это автоматический поиск различных паттернов (на основе заранее определенных конструкций) из текстовых источников данных для извлечения специфической информации. Не смотря на то, что парсинг — широкое понятие, чаще всего под этим термином подразумевают процесс сбора и анализа данных с удаленных веб-ресурсов.

https://habr.com/ru/companies/timeweb/articles/877596/

#timeweb_статьи #html #python #парсинг #ubuntu #xml #вебсайт #JSON #javascript #scrapy

🐍 How can you scrape data from webpages using #Python? In this talk, you'll see how this is possible with #scrapy. https://www.youtube.com/watch?v=tdA1cl6LiCw

Would this be useful?

What would you use it for?

Examples:

- Page testing using different proxies

- Page testing using different devices (still can't figure why it's not properly working though)

- Ads tracking (yours and/or competitors)

- Tracking featured content

- other?

2/2

@rolanddreger If the wget way is not enough (if e.g. you need to modify the contents): Some years ago I created something for the same use case with #python and #scrapy (https://scrapy.org/) – let me know if you need some input there.

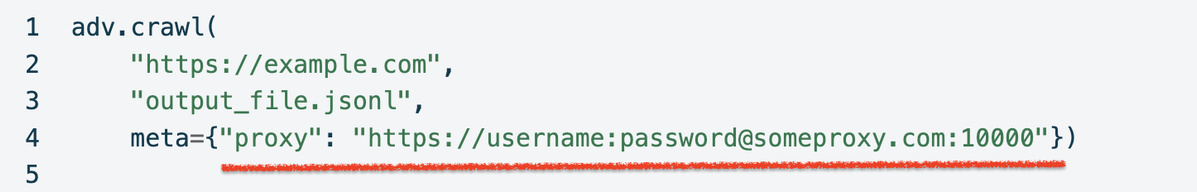

Using a proxy while crawling

This is another feature of using the meta parameter while crawling with #advertools.

It's as simple as providing a proxy URL.

There is also a link to using rotating proxies if you're interested

Happy to share a new release of #advertools v0.16

This release adds a new parameter "meta" to the crawl function.

Options to use it:

🔵 Set arbitrary metadata about the crawl

🔵 Set custom request headers per URL

🔵 Limited support for crawling some JavaScript websites

Details and example code:

#SEO #crawling #scraping #python #DataScience #advertools #scrapy

You might be familiar with what I'm terming the "Token Wars" - in which #LLM and #GenAI companies seek to ingest text, image, audio and video content to create their #ML models. Tokens are the basic unit of data input into these models - meaning that #scraping of web content is widespread.

In retaliation, many sites - such as Reddit, Inc. and Stack Overflow - are entering into content sharing deals with companies like OpenAI, or making their sites subscription only.

Another solution that has emerged recently is content blocking based on user agent. In web programming, the client requesting a web page identifies themself - usually as a browser or a bot.

User agents can be blocked by a website's robots.txt file - but only if the user agent respects the robots.txt protocol. Many web scrapers do not. Taking this a step further, network providers like Cloudflare are now offering solutions which block known token scraper bots at a a network level.

I've been playing with one of these solutions called #DarkVisitors for a couple weeks after learning it about it on The Sizzle and was **amazed** at how much traffic to my websites were bots, crawlers and content scrapers.

(No backhanders here, it's just a very insightful tool)

#TokenWars #tokenization #scraping #bots #scrapy #WebScraping

I'm deconstructing a pair of jeans that I've been using part of cus they were old. I'm planning on keeping the legs intact as I'm going create another jean wall art with them.

This is one of three that I have done. How does it look?

#sew #sewing #upcycle #upcycling #upcycled #craft #handmade #crafty #crafts #scrapy #clothing #clothes #jeans #art

As a volunteer #FLOSS community manager, it bugs me that 10 years in, it's still impossible to filter #GitLab issues by created (& closed) date ranges, to get a rough idea of how many tickets have been created in the time period, and substract them from the total of tickets were closed based on various target milestones.

https://gitlab.com/gitlab-org/gitlab/-/issues/17481

https://gitlab.com/gitlab-org/gitlab/-/issues/17758

https://gitlab.com/gitlab-org/gitlab/-/issues/121728

Y'know, *basic* statistics. For talk slides.

Anyone got a #scraping script? Maybe with #scrapy?

Made some more peace symbols using jean scraps. How'd they look? Just need to see them onto something.

I did wonder if I should actually sew safety pins on the backs and have them as removable pin badges instead of patches. However I may need to make smaller ones to do that.

I thought about making them more uniform and circular but I like the contrast of the lighter circle on bold darker material.

Good?

#sew #sewing #upcycle #upcycling #upcycled #craft #handmade #crafty #crafts #scrapy

If you're into #scraping and #Python, you should probably know that there's a new cool kid in town. Just a few days ago Apify has released a new OSS framework called #Crawlee.

It's asyncio, type hints, it can seamlessly switch between basic and browser (Playwright) requests. Auto retries, proxy rotation. I'm very much #Scrapy person, but these promises get me really intrigued! https://github.com/apify/crawlee-python

Tell them what you think - talk to Saurav at their #EuroPython booth or file GitHub issues 🛠️

"Gathering data from the web using Python" workshop by Renne Rocha at #PyConUS

Free 3-hour tutorial!

Video: https://www.youtube.com/watch?v=sD30nvc1ff0

GitHub repo: https://github.com/rennerocha/pyconus2024-tutorial

Rocha maintains the Querido Diário open-source platform https://queridodiario.ok.org.br/ that scrapes official documents of varoius cities in Brazil to make them more easy to access.

[PyConUS 2024] Tutorials - Gathering data from the web using Python

https://peertube.lhc.net.br/videos/watch/87b51855-d757-4623-967a-2f606a5be20d