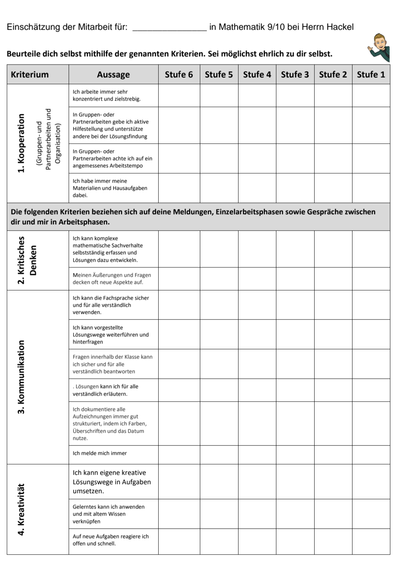

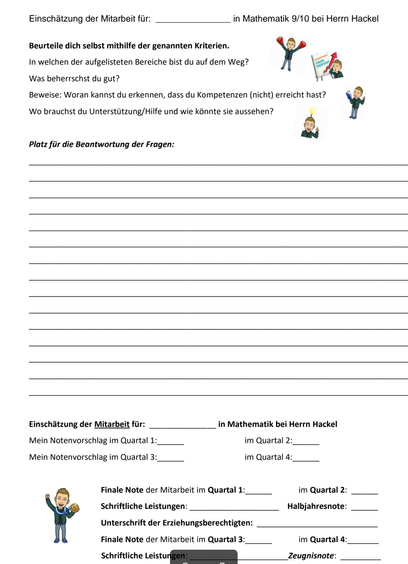

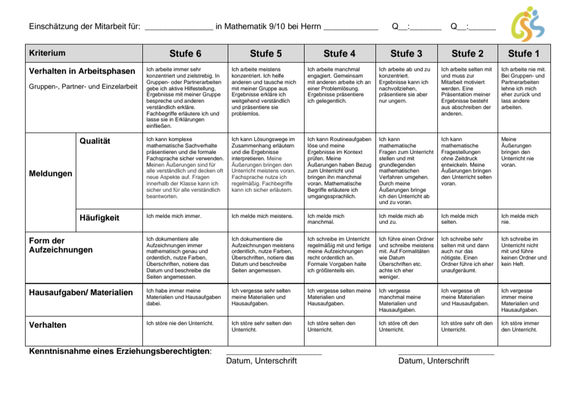

How do you learn and find out things? Usually by getting feedback.

Read more in my article 👇

https://akikoo.medium.com/daa904c0c6b0

#feedback #feedforward #feedbackstaircase #systemsthinking #leadership #teams #distributedteams #psychologicalsafety #culture #theculturemap #agile #scrum #people #books #learning #motivation #careergrowth