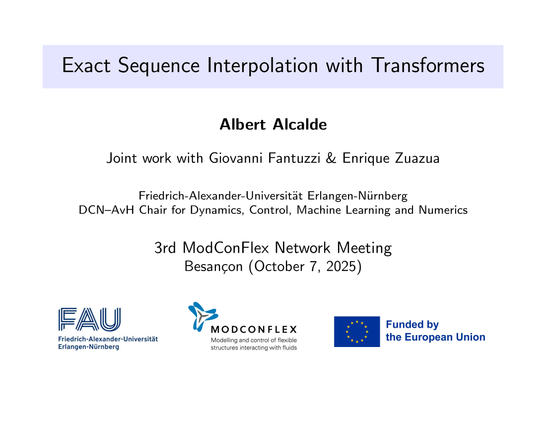

Một công cụ tương tác mới giúp giải thích kiến trúc Transformer, tích hợp trợ lý AI để giải đáp thắc mắc. Rất hữu ích cho những ai muốn tìm hiểu về AI và machine learning!

#Transformer #AI #MachineLearning #InteractiveTool #DeepLearning

#KiếnTrúcTransformer #TríTuệNhânTạo #HọcMáy #CôngCụTươngTác #HọcSâu

https://www.reddit.com/r/SideProject/comments/1pb8hl8/interactive_transformer/