Interesting concept. If you are a #ChatGPT Plus subscriber (like me) you want consistent quality output for the product you subscribe to.

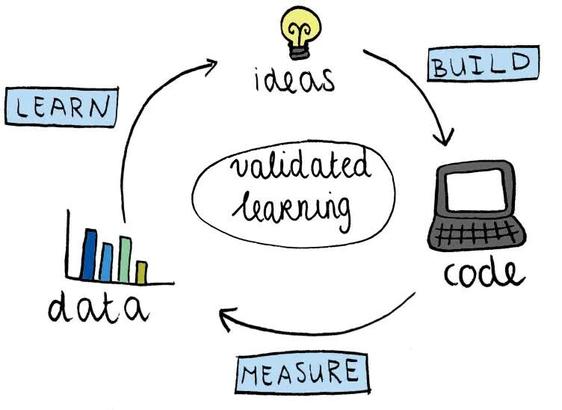

I am inclined to select tests in areas which I depend on #GPT4 for assistance. Then measure the output against expected results, consistently. In other words, use software quality processes to gauge performance over time.

What test cases would you select and run?

#artificialintelliegence #machinelearning #largelanguagemodels