Hope you're all #conda champions too 🐍🫠 #python https://youtube.com/shorts/eCoTu6eigEg?si=WEV-dKWjx2RYVwpa

#conda

Practical Power: Reproducibility, Automation, and Layering with Conda

Part 3 of the 3-part series is live! 🚀 Beyond theory into engineering practice: provenance, lockfiles, rolling distribution, and real-world workflows.

#conda #packaging #python #reproducibility

https://conda.org/blog/conda-practical-power

For #Rstats users who manage environments with #conda, Anaconda is deprecating their R channel:

https://www.anaconda.com/blog/changes-to-anacondas-r-channel-support

I'm disheartened to see this because of how valuable conda is and can be for R environments, especially in academia.

conda-forge (https://conda-forge.org/) and bioconda (https://bioconda.github.io/) are great channels that can support your use of R in conda environments. But maybe it's time to look elsewhere for environment managers that support R and R packages

⚠️ CVE-2025-64343 (HIGH): conda constructor <3.13.0 lets local users alter installations via permissive directory permissions. Patch to 3.13.0+ & lock down install paths! Details: https://radar.offseq.com/threat/cve-2025-64343-cwe-289-authentication-bypass-by-al-98c81eba #OffSeq #infosec #vuln #conda

PyClean v3.4.0 released! Now with Pyright debris removal, cleanup of empty folders only, and git-clean integration to remove untracked files. Try it with #conda or #uv now! `uvx pyclean`. https://pypi.org/project/pyclean/ #python #bytecode #debris #cleanup #development #python3 #cpython #pypy #Linux #macOS #Windows #Pyright #Git

Conda in the Packaging Spectrum: From pip to Docker to Nix

If conda is a distribution, where does it fit alongside pip, Docker, and Nix? Part 2 of the 3-part series! 🚀

We explore conda's unique "middle path”. Why it's lighter than containers, more powerful than language-specific package managers, and uniquely portable through its clever approach to system dependencies.

What on earth is the Conda package testing suite, cycle on cycle, enormous dependency graph

Fix and update in @guix

Conda ≠ PyPI

Conda isn’t just another Python package manager, it’s a multi-language, user-space distribution system.

In this 3-part series, we explore the fundamental differences between conda and PyPI, and why understanding them matters for your workflow.

Part 1 is live now 👇

https://conda.org/blog/conda-is-not-pypi

#conda #packaging #python

Trying to run x86_64 on Mac... Pls help #mac #macbook #arm #multipass #conda

Каждый раз, когда мне нужно что-то устанавливать, настраивать, удалять что-то питонье при помощи #conda, я вспоминаю Вадима Шефнера.

Известие о том, что Юрий хочет писать о нем, старик принял без должной радости.

- А звать-то вас как? - хмуро спросил он.

- Юрий Лесовалов... Но вообще-то я Анаконда.

- Что? - угрюмо переспросил старик. - Почему она конда?

- Анаконда - змея такая. Обитает в бассейне реки Амазонки, отдельные экземпляры достигают пятнадцати метров длины.

- Зачем же змеей себя прозывать? - бестактно поинтересовался сторож.

- Это мой творческий псевдоним, он звучит мужественно и романтично, - терпеливо пояснил Юрий, раскрывая блокнот.

Well dammit. The X.h header is missing from the #conda #condaforge packages.

If you include #xorg Xlib it’ll bork when it can’t find X.h

Naturally #wayland isn’t an option because many required libraries don’t have packages to provide it.

#linux is extremely annoying to develop graphical apps.

It's the first Thursday tomorrow, which means it is Michigan Python night at 7pm ET! Antonio Cavallo will be giving a talk comparing uv and micromamba!

He'll compare these two modern tools—their strengths, use cases, and when to use each. Whether you're managing pure Python environments or multi-language stacks, you'll gain practical insights for choosing the right tool. Looking forward to seeing everyone, all are welcome!

MapServer 8.4.1 already available on #conda https://anaconda.org/conda-forge/mapserver

Did a lot of work on conda-forge this week handling various packages for exotic architectures. Includes some Rust CLI tools I got to build on ppc4le (for fun), and CUDA-dependent libs I wanted to enable on Grace Hopper systems using aarch64.

Previously I've relied mostly on the migrator bot, which tends to default to emulation on QEMU apparently, but found out at https://github.com/conda-forge/cargo-auditable-feedstock/pull/4#issuecomment-3291674939 that cross-compilation 1) works and 2) is faster. It's documented at https://conda-forge.org/docs/maintainer/knowledge_base/#how-to-enable-cross-compilation, but it only 'clicked' in my brain recently.

Level of difficulty/pain: 🐍 Python+CUDA/C++ > Rust 🦀

Naprawdę cenię w #CondaForge to, że kiedy pracuję nad potwornie paskudnym projektem, który ma mnóstwo zależności, ale wspiera wyłącznie stare wersje tych zależności, to #Conda zazwyczaj potrafi wykombinować jakieś rozwiązanie, dopasować odpowiednie paczki binarne i oszczędzić mi sporo czasu.

One thing I really love about #CondaForge is that if you're working on a very, very bad project, and it has a lot of dependencies, but builds only against outdated versions of them, #Conda can generally figure out a way to come up with a solution, provide a consistent environment using binary packages and save you lots of time.

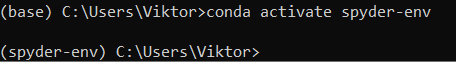

Ok, #direnv to the rescue. Add this to your .envrc to fix the #conda environment on your terminal and on #vscode.

```

conda activate whatever-environment

mkdir -p .vscode

cat > .vscode/settings.json << EOF

{

"python.defaultInterpreterPath": "$(which python)",

"python.terminal.activateEnvironment": false,

}

EOF

```