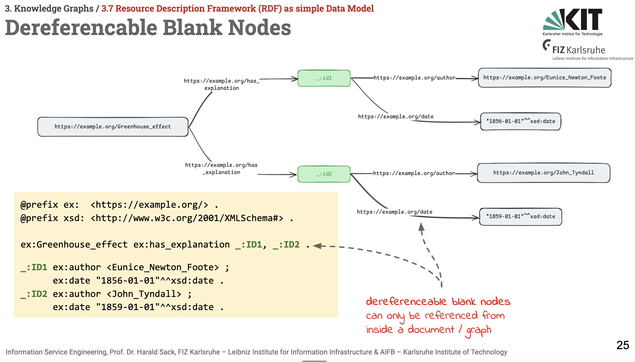

Back in the lecture hall again after two exciting weeks of #ESWC2025 and #ISWS2025. This morning, we introduced our students to RDF, RDFS, RDF Inferencing, and RDF Reification.

#ise2025 #semanticweb #semweb #knowledgegraphs #rdf #reasoning #reification #lecture @fiz_karlsruhe @fizise @KIT_Karlsruhe @sourisnumerique @tabea @enorouzi