These publications showcase Warwick Statistics' commitment to pushing the boundaries of statistical machine learning and AI methodology. Interested in our research? Connect with our department to explore potential collaborations!

#WarwickStats #AIResearch #StatisticalLearning #ReinforcementLearning

#statisticallearning

#AI #DailyPaper #MachineLearning

"Neural Redshift: Random Networks are not Random Functions", Teney et al.

https://arxiv.org/abs/2403.02241

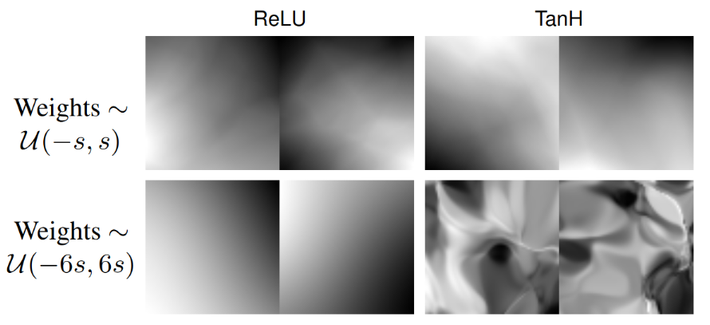

Counters the notion that Neural Networks have an inherent "simplicity bias". Instead, inductive bias depends on components such as ReLUs, residual connections, and LayerNorm, which can be tuned to build architectures with a bias for any level of complexity.

Always trip me up when i read “principle component analysis”, “reclusive feature elimination”, “gradual boosting” etc. I’d be concerned about the validity of everything else.

Principal vs principle, i can understand, but reclusive, that is so far out…

from the standpoint of model selection, parsimony often boils down to dimensionality reduction

#modelSelection #parsimony #OccamsRazor #dimensionalityReduction #degreesOfFreedom #complexity #informationTheory #biasVarianceTradeoff #overfitting #underfitting #optimization #parameterTuning #crossValidation #inverseProblems #inference #statisticalLearning #machineLearning #ML #dataScience #modeling #decisionTheory #fitting #regression #classification #residualError #costFunction #performanceLoss

New #preprint: "Rules and statistics: What if it’s both? A basic computational

model of statistical learning in reading acquisition", describing my first attempt at a #ComputationalModel: https://osf.io/5b76z. The model aims to explain how #StatisticalRegularities can be an integral part of orthographic systems, while little evidence points to a relationship between #StatisticalLearning ability and #reading performance. Feedback welcome! :D

I wrote a paper on #StatisticalLearning, #reading, and #dyslexia during maternity leave in 2021, and realise now I have very little recollection of what I actually wrote... https://www.mdpi.com/2076-3425/11/9/1143

At last, the final (corrected) version is available.

Neat modeling of the processing non-adjacent dependencies by Noémi Éltető and Peter Dayan. I am very grateful to be part of this project.

https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1009866

#statisticallearning #bayesian

"ACC neural ensemble dynamics are structured by strategy prevalence"

https://www.biorxiv.org/content/10.1101/2022.11.17.516909v1

#Neuroscience #Neuro #Brain #AnteriorCinculateCortex #Behavior #Cognition #EnsembleActivity #StatisticalLearning #DecisionMaking

For those interested in employing linear regression using R, this freely available book: https://pyoflife.com/linear-regression-using-r-an-introduction-to-data-modeling/

#datamodeling #R #CodingBook #StatisticalModels #statisticalanalysis #statisticallearning #StatisticalProgramming

An #introduction post: I love #Statistics and #Learning, which means that I love both #StatisticalLearning and #LearningStatistics. Currently, most of my professional focus is on #teaching #ResearchMethods and Statistics to #undergraduate #psychmajors. I also love #photography, #travel, and #food (who doesn’t), and have recently figured out a way to combine all of these loves into a three-week #StudyAbroad trip to #Japan where I get to teach a class on #PsychologyOfLanguage.

Hi all ^^

I'm a PhD in ClinicalPsych at the University of Oslo and Modum Bad Psychiatric Hospital.

Currently I am investigating the mental morbidity trajectories in the COVID-19 pandemic, by applying advanced statistical methods (e.g., #networks, #SEM, & #StatisticalLearning) to population-based registry resources, biobanks, and large-scale #ESM data.

Due to my background in PsychMethods, I am also interested in the estimation of network models and its #replicability.

Nice to meet everyone!

@kaitclark Sorry, posted these keywords in a different reply😬 #cognitiveneuroscience #visualperception #visualattention #openscience #eeg #statisticallearning

Found This Week #96:

#Music, #statisticallearning, #smartbus, #smartglass, cryto canon, Google TPUs, Microsoft #autonomousdriving cookbook, Caoga Caoga, Tokyo crowd control and an aircraft emergency slide!

https://medium.com/@dfeehely/found-this-week-96-fb4df33e0f3 https://mastodon.cloud/media/MvVSQGOvrE9OIvZyoZk