Ubuntu Adopts Chrony + NTS for Secure Network Time #news #chrony #security #ubuntu_25.10

https://www.omgubuntu.co.uk/2025/06/ubuntu-chrony-nts-default-25-10

#Chrony

Cài đặt Chrony trên Server Linux (2023)

Cài đặt Chrony trên Server Linux (2023) #Chrony #Linux #NTP #Server #CấuHình #ThờiGian #HệThống Chrony là một daemon đồng bộ hóa thời gian mạng mạnh mẽ và chính xác, được thiết kế để hoạt động hiệu quả trên nhiều loại máy chủ Linux. Nó là một giải pháp thay thế đáng tin cậy cho NTP (Network Time Protocol) truyền thống, với nhiều ưu điểm vượt trội.

https://maychu.top/2025/06/09/cai-dat-chrony-tren-server-linux-2023/

My overnight tests finished!

In my environment, I get the best #NTP accuracy with #Chrony when using `minpoll -2 maxpoll -2` and not applying any filtering. That is, have the client poll the NTP server 4 times per second. Anything between `minpoll -4` (16x/second) and `minpoll 0` (1x/second) should have similar offsets, but the jitter increases with fewer than 4 polls per second.

https://scottstuff.net/posts/2025/06/03/measuring-ntp-accuracy-with-an-oscilloscope-2/

Chrony has a `filter` option that applies a median filter to measurements; the manual claims that it's useful for high-update rate local servers. I don't see any consistent advantage to `filter` in my testing and larger filter values (8 or 16) consistently make everything worse.

When polling 4x/second on a carefully constructed test network, NTP on the client machine is less than 2 ns away from #PTP with 20 ns of jitter. I know that PTP on the client is 4 ns away from PTP on the server (w/ 2 ns of jitter), as measured via oscilloscope.

So, you could argue that this counts as single-digit nanosecond NTP error, although with 20 ns of jitter that's probably a bit optimistic. In any case, that's *well* into the range where cable lengths are a major factor in accuracy. It's a somewhat rigged test environment, but it's still much better than I'd have expected from NTP.

Ubuntu 25.10 Questing Quokka will use chrony to replace systemd-timesyncd to improve security.

#Ubuntu #Linux #UbuntuLinux #Computers #Laptops #TechNews #TechUpdates #chrony #QuestingQuokka

Ubuntu 25.10 bringt frischen Kernel und verbessert die Sicherheit https://fosstopia.de/ubuntu-25-10-kernel-617/ #Chrony #Kernel6.17 #LinuxDesktop #Ubuntu25.10 #Ubuntu25.10QuestingQuokka #UbuntuSTS

Okay, this is weird. My one ConnectX-4 system *really* dislikes running #linuxptp.

I'm currently using #Chrony for NTP sync; in *some* cases linuxptp/ptp4l gives better results., but *not* with ConnectX-4 NICs. I'm judging result quality by tracking the RMS offset from `chrony tracking`, as collected by the Prometheus Chrony collector.

As a steady state, my test system sees 25-40ns of RMS offset time. Nice and steady. Chrony is talking to 3 local NTP servers plus some pool servers, with `hwtimestamp *` set but no `refclock` config.

Just starting up `ptp4l` in the background (where it syncs PTP time from the network onto the NIC's PTP Hardware Clock, but *doesn't* touch the system clock) causes chrony's tracking error to jump from ~40ns to ~900ns. Stopping `ptp4l` makes the errors go away immediately.

In this state, there shouldn't be *any* interaction between Chrony and ptp4l at all, but I see a 45x increase in timing error.

Disabling `hwtimestamp *` doesn't help at all. I could see some weird dependency on the PHC when HW timestamps are used, but disabling them doesn't help at all.

This is on Ubuntu 24.04, with Linux 6.8.0-59 and linuxptp 4.0-1ubuntu1. It's using a MCX456A-ECAx NIC (psid MT_2190110032). I originally observed this with FW 12.27.4000; upgrading to the latest FW (12.28.2302) and rebooting showed no change.

I don't see similar problems w/ ConnectX-5.

*In addition to chrony being unhappy*, ptp4l isn't really syncing correctly, either. It's logging RMS errors that look mostly random, between 20 and 50000ns of error, with no real indication of setting down into sync.

For comparison, my pair of Intel X710 systems run with <15ns of RMS error via the same linuxptp build and same switch infra.

On the other hand, using #PTP to sync time to my web servers is a big win. They're behind a software router and using PTP with Intel NICs drops the sync error from ~10 us to ~5 ns, according to #Chrony. This is mostly because time is bypassing the firewall entirely and being distributed directly by the switch, so there's far less jitter.

I'm not quite sure what a 5 ns time error means when devices are more than 2.5 feet apart, but lets ignore that for now.

@erincandescent If a thing's worth doing, then it's worth over-doing. I'm now running the #Prometheus #Chrony exporter on 5 systems now, and using the error metric from its GH page, it looks like I'm running 50-100 usec of #NTP error now, which should be more than good enough.

Huh. Now that I have #otel traces on a bunch of things at home, it's pretty clear that my clocks aren't in sync on every system. They're maybe 1ms off, but it's enough that supposedly-nested trace spans aren't quite nested right.

Which is annoying since I have two local GPS #NTP receivers.

The two "bad" machines were using #systemd-timesyncd to talk to Ubuntu's pool clocks instead of the local clocks. The "good" machines are using #chrony and claim that they're ~2 us off of GPS time.

Now I'm curious -- is this a problem with network latency and Ubuntu's pool, or is that just as good as timesyncd gets?

@mark @M0CUV Perhaps some info here on clocks via GPS, very tantalising rabbit hole....

https://openwrt.org/docs/guide-user/services/ntp/gps

https://github.com/domschl/RaspberryNtpServer

https://github.com/tiagofreire-pt/rpi_uputronics_stratum1_chrony

#gps #stratum1 #ntp #chrony #ntpd

Habe mal wieder ein neues Nerd-Projekt: Ein eigener Stratum 1 NTP-Zeitserver. Warum? Weil es geht. Die Daten kommen per GPS und haben dadurch eine Genauigkeit im Bereich von Nano-Sekunden. Der Testaufbau läuft, jetzt muss ich nur noch ein passendes Gehäuse drucken und eventuell die Antenne noch draußen positionieren.

https://github.com/macports/macports-ports/pull/27072

GitHub Continuous Integration checks are running.

It's up to someone else with commit access to merge it.

#chrony #MacPorts #NTP #NetworkTimeProtocol #NTPD #OpenSource #GPL #macOS

Anyone upgrading #TrueNAS from their 24.10 release candidate to the full release should be aware that it clobbers a lot of purportedly local override configuration files not accessible from the GUI. Basically, anything not configured by their "middleware" (i.e. their custom #WebUI) is likely to go bye-bye. The #ZFS file systems and pools should be fine, though; just have a backup in case you've had to tweak #AppArmor, #Chrony, /etc/default/*, or anything else that isn't available via the web UI.

NTP – Zeitserver

Ein neuer adminForge Service kann ab sofort genutzt werden.

Eine geographische Nähe zu einem Zeitserver ist wichtig um die höchstmögliche Genauigkeit der Zeitsynchronisation zu bekommen. Du kannst jeden unserer Server in deiner NTP-Konfiguration verwenden. Alle Server nehmen auch an dem NTP-Pool-Projekt teil.

Server

IP-Adressen

Stratum

ntp1.adminforg

https://adminforge.de/tools/ntp-zeitserver/

#Tools #adminforge #chrony #ntp #Timeserver #Zeitserver

New release Ansible role stafwag.ntpd, and clean up Ansible roles

https://stafwag.github.io/blog/blog/2024/08/25/ansible-role-ntpd-released/

I made some time to give some love to my own projects and spent some time rewriting the Ansible role stafwag.ntpd and cleaning up some other Ansible roles.

#ansible #linux #ntpd #chrony #systemd #linux #freebsd #openbsd #netbsd

```

root@zwergenland:~# chronyd -Q -t 3 'server ptbtime1.ptb.de iburst maxsamples 1'

2024-07-07T12:24:35Z chronyd version 4.4 starting (+CMDMON +NTP +REFCLOCK -RTC +PRIVDROP -SCFILTER -SIGND +ASYNCDNS +NTS -SECHASH +IPV6 -DEBUG)

2024-07-07T12:24:35Z Disabled control of system clock

2024-07-07T12:24:36Z System clock wrong by 887290.072863 seconds (ignored)

2024-07-07T12:24:36Z chronyd exiting

```

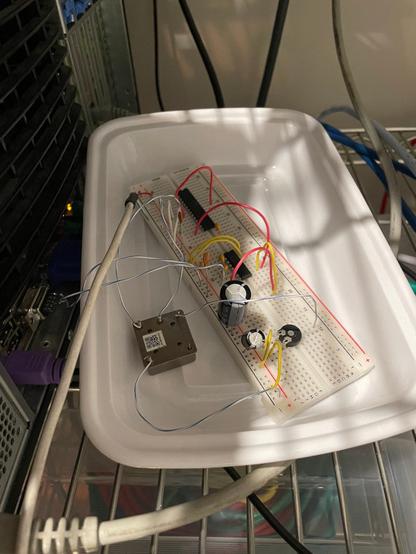

Unsynchronized PPS Experiment

https://blahg.josefsipek.net/?p=611 #electronics #timenuts #chrony #FreeBSD #PPS #OCXO #timekeeping