How I use LLMs – neat tricks with Simon’s `llm` tool

Earlier this year I co-authored a report about the direct environmental impact of AI, which might give the impression I’m massively anti-AI, because it talks about the signficant social and environmental of using it. I’m not. I’m (still, slowly) working through the content of the Climate Change AI Summer School, and I use it a fair amount in my job. This post shows some examples I use.

I’ve got into the habit of running an LLM locally on my machine in the background, having it sit there so I can pipe text or quick local queries into it.

I’m using Ollama, mostly the small LLama 3.2 3B model and the Simon Willison’s wonderful llm tool. I use it like this:

llm "My query goes here"

I’m able to continue discussions using the -c flag like so:

llm -c "continue discussion in a existing conversation"

It’s very handy, and because it’s on the command line, I can pipe text into and out of it.

Doing this with multi line queries

Of course, you don’t want to write every query on the command line.

If I have a more complicated query, I now do this:

cat my-longer-query.txt | llm

Or do this, if I want the llm to respond a specific way I can send a system prompt to like so:

cat my-longer-query.txt | llm -s "Reply angrily in ALL CAPS"

Because llm can use multiple models, if I find that the default local (currently llama 3.2) is giving me poor results, I can sub in a different model.

So, let’s say I have my query, and I’m not happy with the response from the local llama 3.2 model.

I could then pipe the same output into the beefier set of Claude models instead:

cat my-longer-query.txt | llm -m claude-3.5-sonnet

I’d need an API key and the rest set up obvs, but that’s an exercise left to the reader, as the LLM docs are fantastic and easy to follow.

Getting the last conversation

Sometimes you want to fetch the last thing you asked an llm, and the response.

llm logs -r

Or maybe the entire conversation:

llm logs -c

In both cases I usually either pipe it into my editor, which has handy markdown preview:

llm logs -c | code -

Or if I want to make the conversation visible to others, the github gh command has a handy way to create a gist in a single CLI invocation.

llm logs -c | gh gist create --filename chat-log.md -

This will return an URL for a publicly accessible secret gist, that I can share with others.

Addendum – putting a handy wrapper around these commands

I have a very simple shell function,ve that opens a temporary file, for me to jot stuff into, and upon save, echoes the content to STDOUT, using cat.

(If these examples look different from regular bash / zsh, it’s because I use the fish shell).

This then lets me write queries in an editor, which I usually have open, without needing to worry about cleaning up the file I was writing in. Because llm stores every request and response in a local sqlite database, I’m not worried about needing to keep these files around.

function ve --description "Open temp file in VSCode and output contents when closed" # Create a temporary file set tempfile (mktemp) # Open VSCode and wait for it to close code --wait $tempfile # If the file has content, output it and then remove the file if test -s $tempfile cat $tempfile rm $tempfile else rm $tempfile return 1 endend

This lets me do this now for queries:

ve | llm

One liner queries

I’ve also since set up another shortcut like this for quick questions I’d like to see the output from, like so:

function ask-llm --description "Pipe a question into llm and display the output in VS Code" set -l question $argv llm $question | code -end

This lets me do this now:

ask-llm "My question that I'd like to ask"

Do you use this all the time?

Not really.

I started using Perplexity last year, as my way in to experimenting with Gen AI after hearing friends explain it was a significant improvement on using regular web search services like Google as they get worse over time. I also sometimes use Claude because Artefacts are such a neat feature.

I also experimented with Hugging Face’s Hugging Chat thing, but over time, I’ve got more comfortable using llm.

If I wanted a richer interface than what I use now, I’d probably spend some time using Open Web UI. If was to strategically invest in building a more diverse ecosystem for Gen AI, it’s where I would spend some time. Mozilla, or anyone interested in less consolidation, this is where you should be investing time and money if you insist on jamming AI into things.

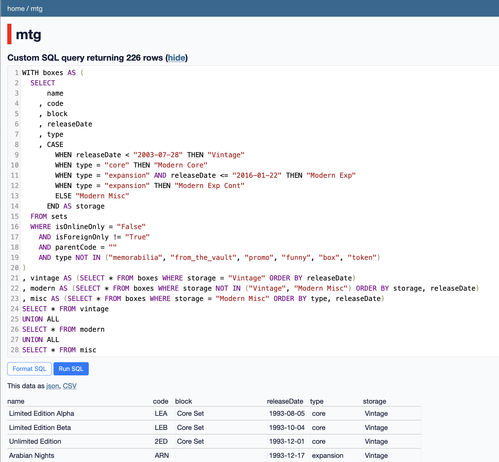

In my dream world, almost every Gen AI query I make is piped through llm, because that means all the conversations are stored in a local sqlite database that I can do what I like with.

In fact, I’d probably pay an annual fee (preferably to Simon!) to have my llm sqlite database backed up somewhere safe, or accessible from multiple computers, because as I use llm more, it becomes more valuable to me, and the consequences of losing it, or corrupting it in some way become greater.

If you have had success using llm that way, I’d love to hear from you.

#AI #datasette #generativeAi #llm