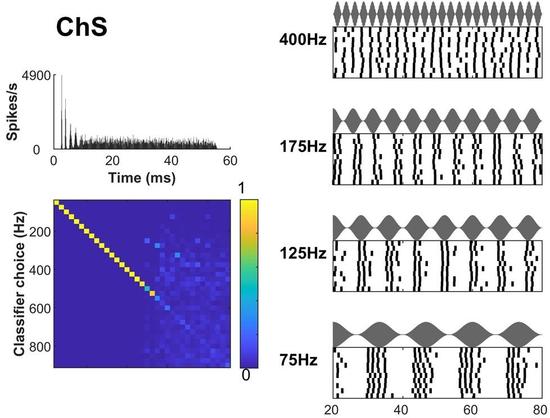

Slow amplitude fluctuations in sounds, critical for #SpeechRecognition, seem poorly represented in the #brainstem. This study shows that overlooked intricacies of #SpikeTiming represent these fluctuations, reconciling low-level neural processing with #perception @plosbiology.org 🧪 https://plos.io/3FJ4adI

#SpeechRecognition

The Marvel of Auditory and Cognitive Networks Working Together in Your Brain

#AuditoryProcessing #BrainScience #NeuralNetworks #CognitiveScience #Hearing #SpeechRecognition #BrainPlasticity #CentralNervousSystem #SoundProcessing #Neuroscience #ListeningSkills #BrainHealth #AuditoryDisorders #LearningAndMemory

🌟 Excited to share Thorsten-Voice's YouTube channel! 🎥 🗣️🔊 ♿ 💬

Thorsten presents innovative TTS solutions and a variety of voice technologies, making it an excellent starting point for anyone interested in open-source text-to-speech. Whether you're a developer, accessibility advocate, or tech enthusiast, his channel offers valuable insights and resources. Don't miss out on this fantastic content! 🎬

follow hem here: @thorstenvoice

or on YouTube: https://www.youtube.com/@ThorstenMueller YouTube channel!

#Accessibility #FLOSS #TTS #ParlerTTS #OpenSource #VoiceTech #TextToSpeech #AI #CoquiAI #VoiceAssistant #Sprachassistent #MachineLearning #AccessibilityMatters #FLOSS #TTS #OpenSource #Inclusivity #FOSS #Coqui #AI #CoquiAI #VoiceAssistant #Sprachassistent #VoiceTechnology #KünstlicheStimme #MachineLearning #Python #Rhasspy #TextToSpeech #VoiceTech #STT #SpeechSynthesis #SpeechRecognition #Sprachsynthese #ArtificialVoice #VoiceCloning #Spracherkennung #CoquiTTS #voice #a11y #ScreenReader

Goode @thorstenvoice, just found your channel and I'm impressed! Your work on TTS is fantastic and so important for accessibility in the FLOSS community. Keep it up! #AccessibilityMatters #FLOSS #TTS #OpenSource #Inclusivity #FOSS #Coqui #AI #CoquiAI #VoiceAssistant #Sprachassistent #VoiceTechnology #KünstlicheStimme #MachineLearning #Python #Rhasspy #TextToSpeech #VoiceTech #STT #SpeechSynthesis #SpeechRecognition #Sprachsynthese #ArtificialVoice #VoiceCloning #Spracherkennung #CoquiTTS #voice #a11y #ScreenReader

Jargonic Sets New SOTA for Japanese ASR

https://aiola.ai/blog/jargonic-japanese-asr/

#HackerNews #Jargonic #SOTA #Japanese #ASR #AI #Technology #SpeechRecognition #Innovation

Nvidia Releases High-Speed Parakeet AI Speech Recognition Model, Claims Top Spot on Leaderboard

#Nvidia #AI #ASR #SpeechRecognition #SpeechToText #OpenSource #MachineLearning #Parakeet #NeMo #HuggingFace #AIModels

Yesterday, I ordered food online. However it went a little off. And I contacted Support. They called me and for one moment, I thought it's a bot or recorded voice or something. And I hated it. Then I realized it's a human on the line.

I was planning to do an LLM+TTS+Speech Recognition and deploy it on A311D. To see if I can practice british accent with it. Now I'm rethinking about what I want to do. This way we are going, it doesn't lead to a good destination. I would hate it if I would have to talk to a voice enabled chatbot as support agent rather than a human.

And don't get me wrong. Voice enabled chatbots can have tons of good uses. But replacing humans with LLMs, not a good one. I don't think so.

#LLM #AI #TTS #ASR #speechrecognition #speechai #ML #MachineLearning #chatbot #chatbots #artificialintelligence

I'm exploring ways to improve audio preprocessing for speech recognition for my [midi2hamlib](https://github.com/DO9RE/midi2hamlib) project. Do any of my followers have expertise with **SoX** or **speech recognition**? Specifically, I’m seeking advice on: 1️⃣ Best practices for audio preparation for speech recognition. 2️⃣ SoX command-line parameters that can optimize audio during recording or playback.

https://github.com/DO9RE/midi2hamlib/blob/main/tests/speech_menu.sh #SoX #SpeechRecognition #OpenSource #AudioProcessing #ShellScripting #Sphinx #PocketSphinx #Audio Retoot appreciated.

After my #wake_word_detection #research has delievered fruits, I have plans to continue works in the voice domain. I would love if I could train a #TTS model which has #British accent so I would use it to practice.

I was wondering if I could do the inference on #A311D #NPU. However, as I am skimming papers of different models, having inference on A311D with reasonable performance seems unlikely. Even training of these models on my entry level #IntelArc #GPU would be painful.

Maybe I could just finetune an already existing models. I am also thinking about using #GeneticProgramming for some components of these TTS models to see if there will be better inference performance.

There are #FastSpeech2 and #SpeedySpeech which look promising. I wonder how much natural their accents will be. But they would be good starting points.

BTW, if anyone needs opensource models, I would love to work as a freelancer and have an #opensource job. Even if someone can just provide access to computation resources, that would be good.

#forhire #opensourcejob #job #hiring

#AI #VoiceAI #opensourceai #ml #speechrecognition #speechsynthesis #texttospeech #machinelearning #artificialintelligence #getfedihired #FediHire #hireme #wakeworddetection

Quand je passe par la commande vocale pour ajouter du VIANDOX à la liste de courses 🤦♂️

Jargonic: Industry-Tunable ASR Model

https://aiola.ai/blog/introducing-jargonic-asr/

#HackerNews #Jargonic #ASR #Industry #AI #Model #SpeechRecognition

T-Pro Expands Footprint in India with New AI-Powered Healthcare Offices in Chennai and Bangalore

#TProExpansion #HealthcareInnovation #AIinHealthcare #ClinicalDocumentation #GlobalGrowth #AIResearch #MedtechIndia #DigitalHealth #IrelandIndiaPartnership #ChennaiTech #BangaloreInnovation #AIForHealth #EnterpriseIreland #SpeechRecognition #MedtechSolutions #HealthcareAI #FutureOfHealthcare #TechExpansion #IrishMedtech #HealthcareRevolution

Vibe is an #OpenSource desktop client (mac, windows, linux) for locally running Whisper to more accurately transcribe or caption videos & audio https://thewh1teagle.github.io/vibe/ Source code: https://github.com/thewh1teagle/vibe/ Easier to use than what I was using before (WhisperDesktop). Default settings use the medium Whisper model, which has been good enough in my experience.

#Accessibility #A11y #AI #SpeechRecognition #EdTech

For learning languages, do you think it's a good idea to practice with an AI Speech Recognition and an AI Speech Synthesis engine?

I'm specifically interesting in British English and German.

#AI #ML #LanguageLearning #Learning #SprachenLernen #British #English #DeutchLernen #EnglishLearning #speechrecognition #speechtotext #speechrecognitionsoftware #speechsynthesis #SpeechSynthesizer

Speech recognition systems struggle with accents and dialects, risking problems in critical fields like healthcare and emergency services. Imagine calling 911 and the AI used to screen out non-emergency calls can’t understand you.

A Spanish language professor explains: https://theconversation.com/sorry-i-didnt-get-that-ai-misunderstands-some-peoples-words-more-than-others-239281 #AI #speechrecognition

#UnplugTrump - Tipp5:

Verabschiede dich von Alexa und anderen Sprachassistenten, die deine Gespräche mithören und auswerten. Nutze stattdessen eine datenschutzfreundliche Alternative wie OpenVoiceOS, ein Open-Source-Sprachassistent, der von einer aktiven Community weiterentwickelt wird und auf einem RaspberryPi läuft. So behältst du die Kontrolle über deine Daten.

#Alexa #OpenVoiceOS #Sprachassistent #VoiceControl #SpeechRecognition #datenschutz #privacy

MLCommons and Hugging Face have launched a multilingual speech dataset with over one million hours of audio #AI #AIResearch #HuggingFace #MLCommons #MachineLearning #NLP #SpeechDataset #SpeechRecognition #VoiceRecognition #VoiceTech

Unlock the power of Speech Recognition with advanced Audio Codecs! Dive into how audio processing enables seamless interaction in IoT, automotive, and smart devices.

Explore the future of voice-driven tech. Read more: https://moschip.com/blog/speech-recognition-with-audio-codecs/

#SpeechRecognition #AudioCodecs #IoT

Using LLMs to clean up the output of speech recognition has been a game changer for me in the past year:

https://blog.nawaz.org/posts/2023/Dec/cleaning-up-speech-recognition-with-gpt/

Note: I've improved my workflow compared to that post. I should write a followup.

University of Copenhagen: Coming soon – offline speech recognition on your phone. “More than one in four people currently integrate speech recognition into their daily lives. A new algorithm developed by a University of Copenhagen researcher and his international colleagues makes it possible to interact with digital assistants like ‘Siri’ without any internet connection. The innovation […]