The Anemoia Device is a tangible, multisensory AI system that uses generative AI to translate analogue photographs into scent to create synthetic memories. https://hackernoon.com/mit-researchers-build-ai-device-that-turns-old-photographs-into-custom-scents #multimodalai

#multimodalAI

Kakao Corp. has unveiled its advanced multimodal AI models, Kanana-o and Kanana-v-embedding, optimized for Korean language and culture, demonstrating superior performance in speech, image, and text processing compared to global competitors.

#YonhapInfomax #KakaoCorp #KananaO #MultimodalAI #KoreanLanguage #AIModelPerformance #Economics #FinancialMarkets #Banking #Securities #Bonds #StockMarket

https://en.infomaxai.com/news/articleView.html?idxno=95316

Z.ai Launches GLM-4.6V AI Model to Let AI Agents See Natively

#AI #GenAI #MultimodalAI #AgenticAI #OpenSourceAI #ComputerVision #Zai #ZhipuAI #GLM46V #ChinaAI #AIModels

Google is Finalizing ‘Nano Banana 2 Flash’ For Expected December Release

#AI #NanoBanana #GenAI #Google #Alphabet #AIImageGeneration #GoogleGemini #DeepMind #AIModels #AIImageEditing #MultimodalAI

"GPT-4V revolutionizes vision-language tasks with human-level accuracy! #GPT4V #MultimodalAI #VisionLanguage"

GPT-4V, a multimodal AI model, has achieved human-level performance on vision-language tasks by integrating advanced vision encoders with large language models. The model's novel attention mechanism enables more effective cross-modal understanding, allowing it to reason about images with unprecedented...

#GPT-4V #MultimodalAI #Vision-LanguageUnderstanding #LargeLanguageModels

"GPT-4V revolutionizes vision-language tasks with human-level accuracy #MultimodalAI #GPT4V #VisionLanguage"

The GPT-4V model has achieved human-level performance on vision-language tasks by integrating advanced vision encoders with large language models, enabling accurate image understanding and reasoning. This breakthrough is attributed to a novel attention mechanism and improved training techniques that facilitate...

#GPT-4V #MultimodalAI #Vision-LanguageTasks #LargeLanguageModels

"GPT-4V revolutionizes AI vision with human-level understanding, leveraging novel attention mechanisms #GPT4V #MultimodalAI #VisionLanguage"

The GPT-4V model has achieved human-level performance on vision-language tasks by integrating advanced vision encoders with large language models, enabling accurate image understanding and reasoning. A novel attention mechanism is a key innovation in GPT-4V, allowing for improved...

#GPT-4V #MultimodalAI #Vision-LanguageModels #AttentionMechanisms

Hệ thống trí tuệ nhân tạo (AI) đa phương thức mới có khả năng "ghi nhớ" hình ảnh và video. Demo sử dụng Shark Tank để test khả năng nhận diện và trả lời câu hỏi về nội dung video. Ứng dụng tiềm năng cho AI trong việc lưu trữ và truy xuất thông tin từ dữ liệu trực quan.

#AI #tritueNhanTao #multimodalAI #congnghe #technology #SharkTank

Qwen3‑VL‑235B‑Instruct now powers data distillation that leaves behind clear reasoning traces. By mixing multimodal domains and a clever RL recipe with a composite reward, it boosts answer diversity and transparency. Curious how this opens new doors for open‑source AI? Dive into the details. #Qwen3VL235B #DataDistillation #ReasoningTraces #MultimodalAI

🔗 https://aidailypost.com/news/qwen3-vl-235b-instruct-used-data-distillation-creates-reasoning-traces

Kling just unveiled Video O1, an all‑in‑one model that fuses a Multimodal Visual Language bridge with a powerful transformer backbone. It promises tighter AI‑video integration and hints at open‑source collaborations. Curious how this stacks against Google Veo 3.1? Dive in for the full rundown. #Kling #VideoO1 #MultimodalAI #MVL

🔗 https://aidailypost.com/news/kling-launches-video-o1-allinone-model-mvl-bridge-using-transformer

Mistral just introduced Mistral 3 — a fully open family of models from efficient 3B/8B/14B versions to the new sparsely-activated Mistral Large 3 with 675B parameters and strong multimodal capabilities. A notable step for open, high-performance AI.

#OpenSourceAI #FoundationModels #MultimodalAI

Introducing Mistral 3 | Mistra...

Mistral stellt mit Mistral 3 eine neue Generation offener Modelle vor – von kleinen, effizienten Edge-Modellen (3B/8B/14B) bis zu Mistral Large 3, einem sparsely-gemixten 675B-Parameter-Modell mit starker Multimodalität und Spitzenleistung. Alles unter Apache 2.0 und optimiert für NVIDIA-Hardware.

New hands-on tutorial on The Main Thread 🚀

I built a real-time emotion detector in Java using #Quarkus, #JavaCV, #WebSocketsNext, and #LangChain4j.

The pipeline streams webcam frames → detects faces locally → sends only the cropped face to a multimodal LLM (GPT-4o/Gemini) for emotion classification.

High-throughput + low latency = fun and surprisingly practical.

👉 https://www.the-main-thread.com/p/real-time-emotion-detection-quarkus-langchain4j-webcam

#AI #GenAI #BlackForestLabs #VentureCapital #OpenSourceAI #VisualIntelligence #FLUX2 #MultimodalAI #AIReasoning

Black Forest Labs Hits $3.25B Valuation, Pivots to ‘Visual Intelligence’ with Series B

Một người dùng đã tìm ra nguyên nhân khiến API gọi đa phương thức đến server vLLM cho ra kết quả kém hơn Open WebUI: sự khác biệt đến từ việc sử dụng qwen-vl-utils để tiền xử lý hình ảnh. Thông tin hữu ích cho ai gặp vấn đề tương tự.

#vLLM #OpenWebUI #MultimodalAI #LLM #AI #qwen_vl_utils #TiềnXửLýẢnh

https://www.reddit.com/r/LocalLLaMA/comments/1p85tiw/an_update_to_why_multimodal_api_calls_to_vllm/

Gemini 3 Pro pulls ahead in coding, matching, and creative writing, outpacing GPT‑5.1, Claude 4.5 and ARC‑AGI‑2. Its multimodal grasp of visual data gives it a clear edge. Curious how the new benchmark looks? Dive into the full analysis. #Gemini3Pro #LLM #MultimodalAI #AIResearch

🔗 https://aidailypost.com/news/gemini-3-pro-shows-clear-lead-coding-matching-creative-writing

Gemini 3 Pro just turned a UI mockup into working React code in just two prompts—plus it squashed several bugs. The new multimodal AI demo shows how fast code generators can go from screenshot to functional component. Curious how it works? Dive in for the details. #Gemini3Pro #ScreenshotToCode #ReactDev #MultimodalAI

🔗 https://aidailypost.com/news/gemini-3-pro-builds-screenshot-to-code-app-two-prompts-fixes-bugs

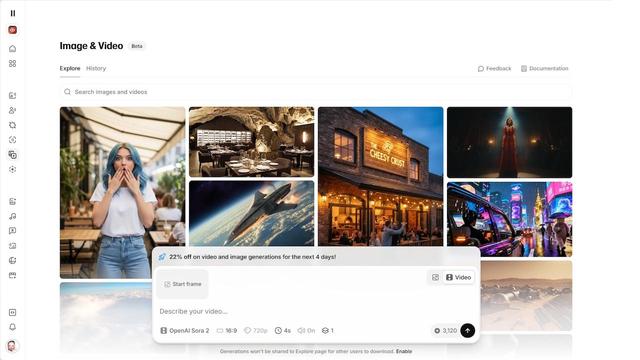

ElevenLabs Pivots to Image and Video Generation with OpenAI’s Sora 2 Pro and Google’s Veo 3.1

#AI #ElevenLabs #GenAI #AIVideo #Sora #Veo #Software #ContentCreation #MultimodalAI #CreativeTech

கூகுள் ஜெமினி 3 வெளியாகி உலகை அதிரவைத்தது – அடுத்த தலைமுறை AI ஏஜென்ட்ஸ்

உருவாக்க புதிய ஓபன் சோர்ஸ் இணைப்பு!

https://viralnewstatus.blogspot.com/2025/11/3-ai.html

Google’s Gemini 3 launch shocks the world with a new open-source partnership powering the next generation of advanced AI agents designed to redefine.

#Gemini3 #GoogleAI #AIAgents #NextGenAI #AISummit #OpenSourceAI #AIUpdate #TechNews #FutureAI #AIRevolution #MultimodalAI #AIDevelopment #NewRelease #AIFeatures #AIvsGPT #GeminiLaunch #AITrends

Gemini 3.0 from Google sets a new bar for AI with multimodal understanding, advanced reasoning, and powerful coding features, now live across Google Search, AI Studio, and developer APIs.