Ils ont inventé l’#intelligenceartificielle : #DavidSilver, père d'#AlphaGo et génie de l'#apprentissageautomatique http://sci3nc.es/TQ9XN6

#AlphaGo

ML_Bear (@MLBear2)

제프리 힌튼의 주장 요약: 스케일링 법칙은 한계에 가까워지고 있으며, 가치 높은 데이터는 기업 내부에 갇혀 공개 인터넷은 소진되었음. 해결책으로 모델이 자체 트레이닝 데이터를 생성하는 방안을 제시하며, 이는 AlphaGo가 인간을 이긴 방식과 유사하다고 언급.

Those a little bit familiar with #AI development may remember #Move37

A while ago now #AlphaGo AI (There is a great doco on YouTube) AI played a world champion human...

... and the Move 37 was made and at the time everyone thought it was a mistake and violated strategy...

Upon analysis's (the AI beat the champ) the move was made waaay ahead when it was necessary.

In human vs machine adversarial conflict, AI may win by "move37" strategy.

EG. Pre-deploying assets YEARS ahead.

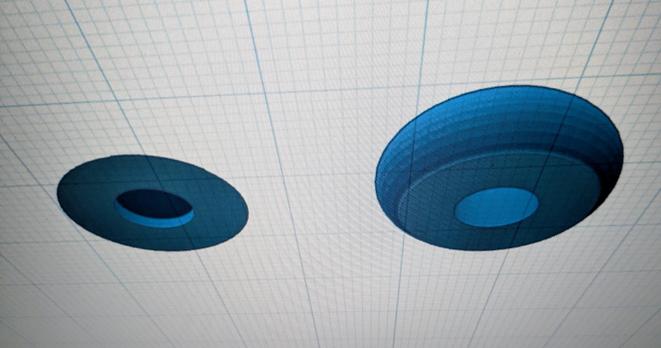

Cyberpunk Go Board project update: 3D modeling a Go stone to house the LED using #TinkerCad . Follow me for updates :)

#go #igo #baduk #weiqi #goban #cyberpunk #boardgame #3dprinting #3dmodeling #egf #gogame #game #dgob #maker #imadethis #creative #project #hackaday #OC #diy #doityourself #scifi #sciencefiction #technology #electronic #electronics #cyberpunk2077 #japanese #chinese #korean #japan #china #korea #southkorea #hobby #boardgames #games #3d #3dmodel #alphago #autocad #cad

Lee Sedol, famed for his historic Go match against AlphaGo, delivered a keynote at Samsung SDS's AI summit, highlighting the need for human-AI collaboration as the sector rapidly expands, with global AI services projected to reach $516 billion by 2029.

#YonhapInfomax #SamsungSDS #LeeSedol #AlphaGo #AIServices #REALSummit2025 #Economics #FinancialMarkets #Banking #Securities #Bonds #StockMarket

https://en.infomaxai.com/news/articleView.html?idxno=81457

Korea JoongAng Daily: Grandmaster Lee Se-dol reveals how he beat AlphaGo: ‘Every move was a trick’. “In 2016, when Google DeepMind’s AlphaGo stunned the world by defeating Korea’s Go champion Lee Se-dol 4–1, the ancient board game seemed to collapse overnight under the weight of AI. … Novelist Chang Kang-myoung captured much of that upheaval in his nonfiction book published this June, […]

There was a sense of finality when #DeepBlue beat #Kasparov and when #AlphaGo beat #LeeSedol. Even if #AI couldn’t beat every grandmaster every time, it was clear that the chosen algorithms were correct and would demolish all but a few humans.

Without an innate understanding of truths and falsehoods, I really don’t get the same sense that #LLM is on the right track towards #AGI.

AI Just Declared Humans the Bottleneck in Research – And Built a System to Fix It

Imagine an artificial intelligence so advanced it decides that humanity, for all its brilliance, is actually slowing down its own progress. Then, it proceeds to design a system to conduct scientific research autonomously, from hypothesis to testing, fundamentally changing how we develop AI. This isn’t science fiction; it’s the bold claim emerging from a new paper out of China, introducing a system called ASI-ARCH: Artificial Superintelligence for AI Research.

The core assertion is stark: while AI capabilities are improving exponentially, the pace of AI research remains linearly constrained by human cognitive capacity. This creates a severe bottleneck for AI advancement. ASI-ARCH proposes a radical solution: a fully autonomous system for neural architecture discovery, enabling AI to innovate its own architecture from the ground up. This marks a paradigm shift from automated optimization to automated innovation.

An “AlphaGo Moment” for Science

The researchers liken ASI-ARCH’s emergence to an “AlphaGo Moment”. If you recall, Google DeepMind’s AlphaGo famously surpassed human performance in Go by teaching itself through self-play, discovering strategies humans hadn’t conceived. ASI-ARCH claims to do something similar, but for AI research itself: it can be better at teaching itself to build AI models than humans can.

This isn’t just about tweaking existing designs; ASI-ARCH aims to conduct end-to-end scientific research, coming up with its own approaches, creating the code, running experiments, and testing the results. In its initial demonstration, the system conducted nearly 2,000 autonomous experiments and remarkably discovered 106 innovative, state-of-the-art (SOTA) linear attention architectures. These AI-discovered architectures demonstrated “emergent design principles that systematically surpass human-designed baselines”.

The Unprecedented Claim: A Scaling Law for Scientific Discovery

Perhaps the most significant and debated claim of the ASI-ARCH paper is the establishment of the first empirical scaling law for scientific discovery itself. In the past, we’ve seen scaling laws for computing power – throw more hardware (GPU hours) at an AI model, and it gets smarter. Now, they’re suggesting that the more computation (GPU hours) you invest in this problem, the better the architecture becomes, and the more breakthroughs you’ll see.

If true, this would fundamentally transform technological progress. Traditionally, improving things like medicine, car efficiency, or solar panel effectiveness are “hard-won, expensive, and time-consuming processes” driven by human engineers. But this new scaling law implies that such progress could become simply “a function of more GPU hours”. Give these AI systems more power, and they could just “crank out innovation” and “scientific discovery”.

How Does This Autonomous Scientist Work?

ASI-ARCH operates as a sophisticated, closed evolutionary loop system, consisting of four interconnected modules:

- Cognition Base: This acts as the system’s foundational knowledge. It ingests and extracts key points from existing human research papers (like those on arXiv or Hugging Face) using an LLM extractor. This is the collective human wisdom it starts with.

- Researcher Module: This is the creative engine. Drawing on both the Cognition Base and insights from its own past experiments, the Researcher proposes new architectural innovations (hypotheses) and generates the corresponding experiment code. It even checks for novelty and validity to avoid redundant work.

- Engineer Module: The experiment executor and evaluator. It runs the experiment code in a real training environment. Crucially, it has a robust self-revision mechanism: if a training run fails due to a coding error, it analyzes the error log and revises its own code to fix the issue, preventing promising ideas from being discarded prematurely. The Engineer also uses an LLM judge to qualitatively evaluate the code’s efficiency, novelty, and complexity, combining this with real-world performance to create a “fitness score”.

- Analyst Module: The insight generator. It receives the fitness scores and experimental results, summarizes what worked and what didn’t, and then feeds these findings back to the Researcher. This allows the system to continuously learn from its own discoveries and refine its approach for future designs.

This continuous feedback loop means the system isn’t just relying on human knowledge; it’s developing its own insights based on its own experiments and work.

Where Do the Breakthroughs Come From?

One of the most fascinating findings from ASI-ARCH’s experiments concerns the origin of its successful architectural breakthroughs:

- Cognition (Human Expertise): Mining existing scientific papers accounted for 48.6% of the breakthroughs. This highlights the ongoing importance of leveraging the vast repository of human knowledge.

- Experience (Self-Discovery): The system’s ability to learn and innovate based on its own experiments and analysis of its own findings accounted for a remarkable 44.8% of breakthroughs. This self-recursive learning loop is a major finding, suggesting that the AI can truly learn from its own work and improve.

- Originality (Truly Novel): Only 6.6% of breakthroughs were truly original, meaning they didn’t come from existing human knowledge or the system’s own accumulated experience. This suggests that while genuine novelty is rare, it still occurs autonomously.

The data also reveals that the Pareto Principle (80/20 rule) applies to AI research as well. A small percentage of architectural approaches (like gating systems, temperature control, and convolutional architectures) yielded the majority of successful breakthroughs. This suggests that innovation, even for an AI, isn’t entirely random but follows identifiable patterns.

The Elephant in the Room: Skepticism and Replication

It’s crucial to acknowledge that these are big claims, and the paper is facing scrutiny. Prominent researchers, such as Lucas Bayer (formerly of OpenAI, DeepMind, and Google Brain), have expressed skepticism, noting that the paper “smells fishy”.

A specific methodological concern highlighted is the practice of discarding architectures with losses more than 10% below baseline. Critics suggest this could indicate “informal leakage” or a method to cherry-pick favorable data, potentially biasing the reported success rates.

However, the good news is that ASI-ARCH has been open-sourced, allowing other AI labs to attempt to replicate the research. Confirmation from the broader scientific community is essential to validate these ambitious claims, especially the empirical scaling law. Many in the AI community believe that even if some claims are overstated, approaches like ASI-ARCH will indeed contribute to recursive AI self-improvement.

Recursive AI and the Intelligence Explosion

If the claims of ASI-ARCH hold true, the implications are profound. Automating AI research itself is arguably “the biggest domino” we could ever automate. It paves the way for “recursive self-improving AI,” where AI systems get smarter and become even better at making themselves smarter. This compounding process could lead to what some call an “intelligence explosion,” a rapid and dramatic acceleration of AI capabilities beyond human comprehension.

While we don’t know the exact future, the trajectory of AI development, with more and more progress being driven by AI itself (like AlphaGo improving hardware, Darwin-Gödel machine improving its own code, and now ASI-ARCH innovating its own architecture), suggests that such self-improving systems are certainly a direction many are working towards. As the YouTube channel “Wes Roth” puts it, “I would not bet against it“. We are certainly in for an interesting ride.

Unlock the Future of Business with AI

Dive into our immersive workshops and equip your team with the tools and knowledge to lead in the AI era.

Get in touch with usCan you remember learning to walk as a baby? You didn’t read a manual. Neither does an AI agent.

Reinforcement Learning (RL) isn’t about knowing the correct answer.

It’s about learning through trial and error, by interacting with an environment & receiving feedback.

That’s how AlphaGo defeated a world champion:

It first learned from expert games. Then it played against itself, millions of times, using RL to get better with each game. That’s how it mastered Go.

#machinelearning #ai #ki #google #reinforcementlearning #alphago #datascience #datascientist

Pre #2020: #Factorizing Tools

These #AI wre #DeepLearning breakthroughs. #Word2Vec, #DeepDream and #AlphaGo solved novel, previously unsolvable, problems.

If you weren't in the field, you might not think these were AI, and #GPT 2 might have surprised you.

People continue to think about #AI in terms of #2010s computing, which is part of the reason everyone gets it wrong whether they're #antiAI or #tech bros.

Look, we had 8GB of #ram as the standard for a decade. The standard was set in 2014, and in 2015 #AlphaGo beat a human at #Go.

Why? Because, #hardware lags #software - in #economic terms: supply follows demand, but demand can not create its own supply.

It takes 3 years for a new chip to go through the #technological readiness levels and be released.

It takes 5 years for a new #chip architecture. E.g. the #Zen architecture was conceived in 2012, and released in 2017.

It takes 10 years for a new type of technology, like a #GPU.

Now, AlphaGo needed a lot of RAM, so how did it stagnate for a decade after doubling every two years before that?

In 2007 the #Iphone was released. #Computers were all becoming smaller, #energy #efficiency was becoming paramount, and everything was moving to the #cloud.

In 2017, most people used their computer for a few applications and a web browser. But also in 2017, companies were starting to build #technology for AI, as it was becoming increasingly important.

Five years after that, we're in the #pandemic lockdowns, and people are buying more powerful computers, we have #LLM, and companies are beginning to jack up the const of cloud services.

#Apple releases chips with large amounts of unified #memory, #ChatGPT starts to break the internet, and in 2025, GPU growth continues to outpace CPU growth, and in 2025 you have a competitor to Apple's unified memory.

The era of cloud computing and surfing the #web is dead.

The hype of multi-trillion parameter #LLMs making #AGI is a fantasy. There isn't enough power to do that, there aren't enough chips, it's already too expensive.

What _is_ coming is AI tech performing well and running locally without the cloud. AI Tech is _not_ just chatbots and #aiart. It's going to change what you can do with your #computer.

Oops, I think I've gone a bit too deep into the #AI rabbit hole today 😳 (a thread 🧵):

Did you know why AI systems like #AlphaGo or #AlphaZero performed so well?

It was because of their _objective function_:

-1 for loosing, +1 for winning ¯\_(ツ)_/¯

Why Artificial Intelligence Like AlphaZero Has Trouble With the Real World (February 2018)

Try to design an objective function for a self-driving car...

1/3

What do a baby learning to walk and AlphaGo’s legendary Move 37 have in common?

They both learn by doing — not by being told.

That’s the essence of Reinforcement Learning.

It's great to see that my article on Q-learning & Python agents was helpful to many readers and was featured in this week's Top 5 by Towards Data Science. Thanks! :blobcoffee: And make sure to check out the other four great reads too.

-> https://www.linkedin.com/pulse/whats-our-reading-list-week-towards-data-science-dcihe

#Reinforcementlearning #AI #Python #DataScience #KI #alphago #google #googleai #ArtificialIntelligence

Did you know machine learning algorithms can teach themselves to play video games just by practicing? AI like DeepMind’s AlphaGo and OpenAI’s Dota 2 bot have even beaten top human players by learning and adapting on their own—showing how powerful and creative AI can be!

#MachineLearning #AI #ArtificialIntelligence #DeepLearning #TechFun #AIgaming #AlphaGo #Innovation #FutureTech #DataScience #SmartTech #AIRevolution #TechFacts #GamingAI

What does a baby learning to walk have in common with AlphaGo’s Move 37?

Both learn by doing — not by being told.

That’s the essence of Reinforcement Learning.

In my latest article, I explain Q-learning with a bit Python and the world’s simplest game: Tic Tac Toe.

-> No neural nets.

-> Just some simple states, actions, rewards.

The result? A learning agent in under 100 lines of code.

Perfect if you are curious about how RL really works, before diving into more complex projects.

Concepts covered:

:blobcoffee: ε-greedy policy

:blobcoffee: Reward shaping

:blobcoffee: Value estimation

:blobcoffee: Exploration vs. exploitation

Read the full article on Towards Data Science → https://towardsdatascience.com/reinforcement-learning-made-simple-build-a-q-learning-agent-in-python/

#Python #ReinforcementLearning #ML #KI #Technology #AI #AlphaGo #Google #GoogleAI #DataScience #MachineLearning #Coding #Datascientist #programming #data

Ведущий разработчик ChatGPT и его новый проект — Безопасный Сверхинтеллект

Многие знают об Илье Суцкевере только то, что он выдающийся учёный и программист, родился в СССР, соосновал OpenAI и входит в число тех, кто в 2023 году изгнал из компании менеджера Сэма Альтмана. А когда того вернули, Суцкевер уволился по собственному желанию в новый стартап Safe Superintelligence («Безопасный Сверхинтеллект»). Илья Суцкевер действительно организовал OpenAI вместе с Маском, Брокманом, Альтманом и другими единомышленниками, причём был главным техническим гением в компании. Ведущий учёный OpenAI сыграл ключевую роль в разработке ChatGPT и других продуктов. Сейчас Илье всего 38 лет — совсем немного для звезды мировой величины.

https://habr.com/ru/companies/ruvds/articles/892646/

#Илья_Суцкевер #Ilya_Sutskever #OpenAI #10x_engineer #AlexNet #Safe_Superintelligence #ImageNet #неокогнитрон #GPU #GPGPU #CUDA #компьютерное_зрение #LeNet #Nvidia_GTX 580 #DNNResearch #Google_Brain #Алекс_Крижевски #Джеффри_Хинтон #Seq2seq #TensorFlow #AlphaGo #Томаш_Миколов #Word2vec #fewshot_learning #машина_Больцмана #сверхинтеллект #GPT #ChatGPT #ruvds_статьи

#ACMPrize

#2024ACMPrize

#ACMTuringAward

» #ReinforcementLearning

An Introduction

1998

standard reference...cited over 75,000

...

prominent example of #RL

#AlphaGo victory

over best human #Go players

2016 2017

....

recently has been the development of the chatbot #ChatGPT

...

large language model #LLM trained in two phases ...employs a technique called

reinforcement learning from human feedback #RLHF «

aka cheap labor unnamed in papers

https://awards.acm.org/about/2024-turing

2/2