Can't Connect to WayDroid Containers in LXD Using ADB #android #docker #lxd #adb

#lxd

okay

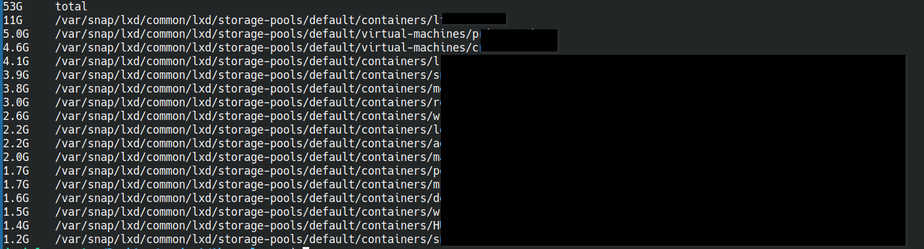

i have installed windows11 today in 3 different VM-system (LXD, virt-manager, VirtualBox) and I am back in virtual box, the classic.

so, now exploring win11 and... where the fuck is the X on the settings screen?!

Yes, I have installed all updates and restarted. Wheres the X? How do I close this window?

Spoiler: i found out, can you guess?

#windows11 #windows #virtualisation #vm #virtualbox #lxd #virtmanager

Neuzugang beim NaWik! David Lohner @davidlohner verstärkt uns als Didaktiker und Learning Experience Designer mit einiger Erfahrung im Bereich der digitalen Hochschullehre. Er promoviert am KIT, engagiert sich im dghd-Vorstand – und bringt ein Faible für gepflegtes Nerdtum mit. Willkommen im Team! #NaWik #Wisskomm #Didaktik #LXD #Team

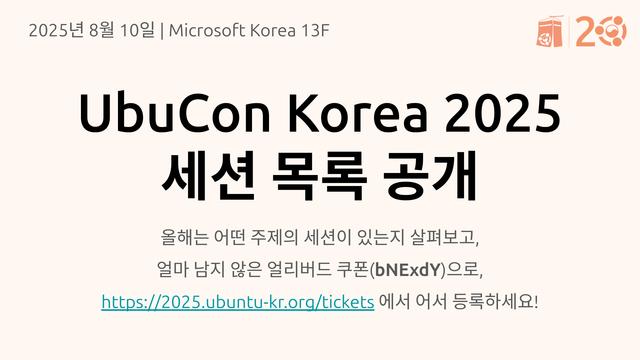

오는 8월 10일 #우분투한국커뮤니티 #20주년 을 함께 축하하는 #UbuCon #Korea 의 세션 목록이 어느세 공개 되었습니다! 올해도 #데스크톱 #클라우드 #홈서버 #AI #LXD #커널 #컨테이너 #Rockcraft #WSL 등 정말 다양한 주제의 흥미로운 세션이 준비되어 있습니다.

참가 등록할까 고민 중이라면, 지금 세션 목록도 살펴보고, 얼마 안 남은 #얼리버드 쿠폰 "bNExdY" 사용하여 바로 #등록 해 보세요!

8월 10일 #광화문 Microsoft Korea 13F에서 만나요!

세션 목록 공개 공지 https://discourse.ubuntu-kr.org/t/ubucon-korea-2025/50018

Just saw the new Incus release announcement and took the chance to donate to @stgraber for his excellent container management software (which also runs metalhead.club and trashserver.net!)

👏

Here's a screen cast of the gemma3 1b model running in Ollama in a LXD container on Ubuntu Touch on my Pixel 3a device.

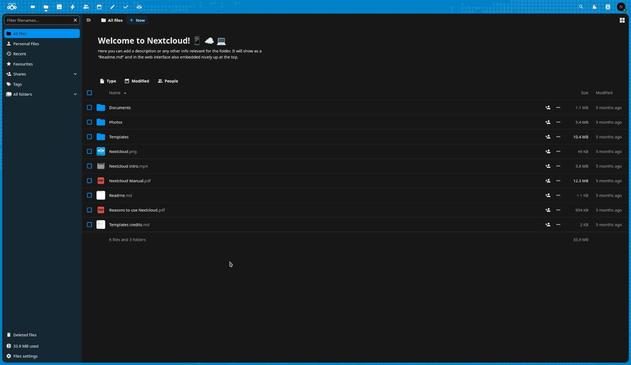

New blog post!

In which I talk in detail how I deployed Nextcloud locally in my homelab using LXD and NextcloudPi.

I go through the whole process of setting up LXD, importing the NCP image, installing, configuring, and scheduling backups for my own personal cloud.

https://stfn.pl/blog/67-deploying-nextcloud-locally-with-lxd/

:ubuntu: I really like #LXD I can spin up an environment using a custom profile and then begin installing dependencies etc within seconds. Then I can clone, modify, build and test changes without polluting my system.

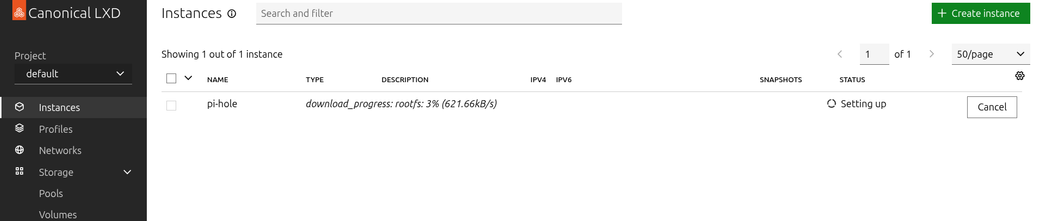

Jetzt probiere ich mal die #Canonical #lxd UI aus 🙈

Soll wohl eine Alternative zu einem Proxmox sein.

LXD 6.3 system container manager brings Pure Storage support, Ubuntu Pro auto-attachment, NVIDIA GPU pass-through, and more.

https://linuxiac.com/lxd-6-3-brings-pure-storage-driver-improved-gpu-pass-through/

Setting up Windows on #LXD is complicateder enough than libvirt to be annoying. I apparently have to rebuild the ISO to include some drivers.

So I have this idea to move at least some of my self-hosted stuff from Docker to LXC.

Correct my if I'm wrong dear Fedisians, but I feel that LXC is better than Docker for services that are long-lasting and keeping an internal state, like Jellyfin or Forgejo or Immich? Docker containers are ephemeral in nature, whereas LXC containers are, from what I understand, somewhere between Docker containers and VMs.

#Incus is a next-generation #system #container application container, and virtual machine manager. It provides a user experience similar to that of a public #cloud With it, you can easily mix and match both containers and virtual machines, sharing the same underlying #storage and #network - The Incus project was created by Aleksa Sarai as a community driven alternative to Canonical's #LXD https://linuxcontainers.org/incus/

Its amazing how useful having a break can be.

Last night my brain had completely ground to a halt working on my #incus / #lxd #API client.

I could not, for the life of me, find the search terms to convey my question "how do I verify the remote LXD servers certificate in my #python code".

Today I have the answer and pushed updated code (https://github.com/medeopolis/container_client/).

Long story short:

- use supplied tools to create server and client certificates (eg using https://linuxcontainers.org/incus/docs/main/howto/server_expose/). You could do it with openssl commands if you really have to

- The resulting .config/{incus,lxc}/client.{crt,key} are for client authentication

- the resulting .config/{incus,lxc}/servercerts/servername.crt can be used for https verification/validation. *to actually use this meaningfully the server cert has to be regenerated, the only addresses covered out of the box are loopback IPs*

I don't know if there is one place I can point to thank for helping me figure my way through but https://discuss.linuxcontainers.org/ had several useful posts 🙏 , and perhaps a good night sleep.

So I managed to get #Forgejo jobs running in a way that I'm happy enough with. The main concern is that they're isolated as possible. The current runner has a scary warning on it that it's in alpha and shouldn't be considered secure.

The runner instance is Debian running in an #lxd --vm on my laptop (I didn't want to consume resources on the server since it's small). In this instance I have #k0s running, which is a slimmed down #Kubernetes, which is running #Codeberg 's runner pod. This has three containers. One to register the runner, then two others that are always running, the runner itself and #Docker -in-Docker . You can replicate this entire setup so that each runner gets its own DinD which is completely separated from the others.

Each runner checks for a single job in a loop, and once a job is completed then the docker server is pruned, and the runner shuts down. Then k0s will automatically restart it. This isn't perfect but it's better than runner processes which never shut down, because the docker cache will linger and others can look at it to spy on your jobs.

What could be better on a Sunday than throwing more incomplete #sourcecode over the wall and hoping no one throws it back?

This time its a thin as paper wrapper around the #LXD / #Incus REST #API written in #python .

(I'll be trying to use that instead of #pylxd until their websockets bug fixes are in place. Hopefully then I can swap back to pylxd)

After taking the nickle tour of #Qubes, my hasty conclusion is that it is anti-#KISS; there are seemingly many moving parts under the surface, and many scripts to grok to comprehend what is going on.

I plan to give it some more time, if only to unwrap how it launches programs in a VM and shares them with dom0's X server and audio and all that; perhaps it's easier than I think.

I also think #Xen is a bit overkill, as the claim is that it has a smaller kernel and therefore smaller attack surface than the seemingly superior alternative, #KVM. Doing some rudimentary searching out of identified / known VM escapes, there seem to be many more that impact Xen than KVM, in the first place.

Sure, the #Linux kernel may be considerably larger than the Xen kernel, but it does not need to be (a lot can be trimmed from the Linux kernel if you want a more secure hypervisor), and the Linux kernel is arguably more heavily audited than the Xen kernel.

My primary concern is compartmentalization of 'the web', which is the single greatest threat to my system's security, and while #firejail is a great soltion, I have run into issues maintaining my qutebrowser.local and firefox.local files tuned to work well, and it's not the simplest of solutions.

Qubes offers great solutions to the compartmentalization of data and so on, and for that, I really like it, but I think it's over-kill, even for people that desire and benefit from its potential security model, given what the threats are against modern workstations, regardless of threat actor -- most people (I HOPE) don't have numerous vulnerable services listening on random ports waiting to be compromised by a remote threat.

So I am working to refine my own security model, with the lessons I'm learning from Qubes.

Up to this point, my way of using a system is a bit different than most. I have 2 non-root users, neither has sudo access, so I do the criminal thing and use root directly in a virtual terminal.

One user is my admin user that has ssh keys to various other systems, and on those systems, that user has sudo access. My normal user has access to some hosts, but not all, and has no elevated privileges at all.

Both users occasionally need to use the web. When I first learned about javascript, years and years ago, it was a very benevolent tool. It could alter the web page a bit, and make popups and other "useful" things.

At some point, #javascript became a beast, a monster, something that was capable of scooping up your password database, your ssh keys, and probe your local networks with port scans.

In the name of convenience.

As a result, we have to take browser security more seriously, if we want to avoid compromise.

The path I'm exploring at the moment is to run a VM or two as a normal user, using KVM, and then using SSH X forwarding to run firefox from the VM which I can more easily firewall, and ensures if someone escapes my browser or abuses JS in a new and unique way, that no credentials are accessible, unless they are also capable of breaking out of the VM.

What else might I want to consider? I 'like' the concept of dom0 having zero network access, but I don't really see the threat actor that is stopping. Sure, if someone breaks from my VM, they can then call out to the internet, get a reverse shell, download some payloads or build tools, etc.

But if someone breaks out of a Qubes VM, they can basically do the same thing, right? Because they theoretically 'own' the hypervisor, and can restore network access to dom0 trivially, or otherwise get data onto it. Or am I mistaken?

Also, what would the #LXC / #LXD approach look like for something like this? What's its security record like, and would it provide an equivalent challenge to someone breaking out of a web browser (or other program I might use but am not thinking of at the moment)?

Hey Everyone, You've been meaning to do it but now is the time 'circle the wagons' and get your systems moved from docker to something else.

Time for me to 'restart' the migration.

Remember: never Oracle